Sigmodal

Description

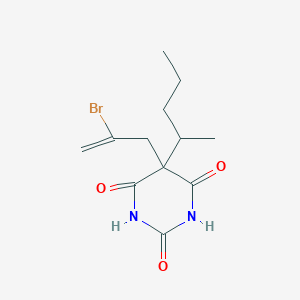

Structure

3D Structure

Properties

CAS No. |

1216-40-6 |

|---|---|

Molecular Formula |

C12H17BrN2O3 |

Molecular Weight |

317.18 g/mol |

IUPAC Name |

5-(2-bromoprop-2-enyl)-5-pentan-2-yl-1,3-diazinane-2,4,6-trione |

InChI |

InChI=1S/C12H17BrN2O3/c1-4-5-7(2)12(6-8(3)13)9(16)14-11(18)15-10(12)17/h7H,3-6H2,1-2H3,(H2,14,15,16,17,18) |

InChI Key |

ZGVCLZRQOUEZHG-UHFFFAOYSA-N |

SMILES |

CCCC(C)C1(C(=O)NC(=O)NC1=O)CC(=C)Br |

Canonical SMILES |

CCCC(C)C1(C(=O)NC(=O)NC1=O)CC(=C)Br |

Other CAS No. |

1216-40-6 |

Origin of Product |

United States |

Foundational & Exploratory

The Sigmoid Function: A Technical Guide for Scientific Research

An In-depth Guide for Researchers, Scientists, and Drug Development Professionals

The sigmoid function, characterized by its distinctive "S"-shaped curve, is a cornerstone of data analysis in various scientific disciplines. Its ability to model systems that transition between two states makes it invaluable for researchers in biology, pharmacology, and drug development. This guide provides a technical overview of the sigmoid function, its mathematical underpinnings, and its practical applications in scientific research, complete with data presentation standards, experimental protocols, and visualizations.

The Mathematics of Sigmoidal Behavior

A sigmoid function is a mathematical function that produces a characteristic S-shaped curve.[1][2] The most common form is the logistic function, which maps any real-valued input to a value between 0 and 1.[3][4] This property is ideal for modeling processes that have a minimum and maximum value, such as the response of a biological system to increasing concentrations of a drug.

The general equation for a four-parameter logistic (4PL) function, frequently used in dose-response analysis, is:

Y = Bottom + (Top - Bottom) / (1 + (X / EC₅₀) ^ HillSlope)

Where:

-

Y is the measured response.

-

X is the concentration or dose of the stimulus.

-

Bottom is the minimum response value (the lower plateau of the curve).[5]

-

Top is the maximum response value (the upper plateau of the curve).[5]

-

EC₅₀ (or IC₅₀ ) is the concentration that produces a response halfway between the Bottom and Top. It is a key measure of a drug's potency.[5]

-

Hill Slope (or Hill Coefficient, nH) describes the steepness of the curve.[6][7] A Hill Slope of 1 indicates a standard response, while a value greater than 1 suggests positive cooperativity, and a value less than 1 indicates negative cooperativity.[6][8]

The logistic function is widely used in fields like biology, chemistry, and statistics due to its ability to model growth rates, chemical reactions, and probabilities.[9][10]

Core Applications in Scientific Research

The sigmoid curve is fundamental to analyzing data in several key areas of scientific and pharmaceutical research.

-

Dose-Response Analysis: In pharmacology, dose-response curves are essential for characterizing the effect of a drug.[7] These curves are typically sigmoidal and are used to determine a drug's potency (EC₅₀ or IC₅₀) and efficacy (the maximum effect).[11] Nonlinear regression is the preferred method for fitting these curves to experimental data.[12][13]

-

Enzyme Kinetics and Cooperativity: The Hill equation, a specific form of the sigmoid function, is used to describe the binding of ligands to macromolecules like enzymes or receptors.[8][14] The shape of the resulting curve and the value of the Hill coefficient can reveal whether the binding is cooperative.[6][15] Positive cooperativity, where the binding of one ligand increases the affinity for subsequent ligands, results in a steep sigmoidal curve (nH > 1).[6][8]

-

Logistic Regression: In biomedical research and statistics, logistic regression is a powerful tool for predicting a binary outcome (e.g., disease presence/absence) from a set of independent variables.[16][17] The model uses the sigmoid function to transform a linear combination of predictors into a probability, which is always between 0 and 1.[4][17]

Quantitative Data Presentation

Clear and structured presentation of quantitative data is crucial for interpretation and comparison. When reporting results derived from sigmoid curve fitting, a tabular format is highly recommended.

Table 1: Comparative Potency of Two Investigational Drugs

| Parameter | Drug A | Drug B |

| Target | Receptor X | Receptor X |

| EC₅₀ (nM) | 15.2 | 89.5 |

| Hill Slope | 1.1 | 0.9 |

| Max Response (%) | 98.1 | 75.3 |

| R² of Fit | 0.992 | 0.985 |

This table provides a clear comparison of the key parameters for two hypothetical drugs, allowing for a quick assessment of their relative potency and efficacy.

Experimental Protocols

Accurate data for sigmoidal analysis relies on robust experimental design. Below is a generalized protocol for a common dose-response experiment.

Protocol: In Vitro Cytotoxicity Assay using a Resazurin-Based Reagent

This protocol aims to determine the IC₅₀ value of a test compound on a cancer cell line.

-

Cell Seeding:

-

Culture cells to their logarithmic growth phase.

-

Trypsinize, count, and resuspend cells to a predetermined optimal density (e.g., 5,000-10,000 cells/well).

-

Seed 100 µL of the cell suspension into each well of a 96-well plate.

-

Incubate the plate for 24 hours at 37°C and 5% CO₂ to allow for cell attachment.[18]

-

-

Compound Preparation and Dosing:

-

Prepare a high-concentration stock solution of the test compound (e.g., 10 mM in DMSO).

-

Perform a serial dilution (e.g., 1:3) in culture media to create a range of at least 7 concentrations.[18]

-

Include vehicle-only controls (negative control) and a maximum kill control (e.g., a known cytotoxic agent) on each plate.

-

Remove the old media from the cells and add 100 µL of the media containing the various compound concentrations.

-

-

Incubation and Viability Assay:

-

Incubate the plate for a predetermined duration (e.g., 48 or 72 hours).

-

Add 20 µL of a resazurin-based viability reagent to each well.

-

Incubate for 1-4 hours, protected from light, until a color change is apparent.[18]

-

Measure fluorescence (e.g., 560 nm excitation / 590 nm emission) using a plate reader.[18]

-

-

Data Analysis:

-

Subtract the average background signal from media-only wells.

-

Normalize the data by setting the average fluorescence of the vehicle control wells to 100% viability and the maximum kill control wells to 0% viability.[18]

-

Plot the normalized viability (%) against the logarithm of the compound concentration.

-

Fit the data using a four-parameter logistic (4PL) non-linear regression model to determine the IC₅₀ value.[18]

-

Signaling Pathways and Cooperativity

Sigmoidal responses are often the result of underlying cooperative mechanisms in biological signaling pathways. A classic example is the binding of an allosteric activator to a multi-subunit enzyme, which can switch the enzyme from a low-activity to a high-activity state in a highly sensitive, switch-like manner.

References

- 1. Sigmoid function | Formula, Derivative, & Machine Learning | Britannica [britannica.com]

- 2. surface.syr.edu [surface.syr.edu]

- 3. Sigmoid function - Wikipedia [en.wikipedia.org]

- 4. The Sigmoid Function: A Key Component in Data Science | DataCamp [datacamp.com]

- 5. graphpad.com [graphpad.com]

- 6. Khan Academy [khanacademy.org]

- 7. How Do I Perform a Dose-Response Experiment? - FAQ 2188 - GraphPad [graphpad.com]

- 8. Hill equation (biochemistry) - Wikipedia [en.wikipedia.org]

- 9. Logistic function - Wikipedia [en.wikipedia.org]

- 10. Logistic function | Formula, Definition, & Facts | Britannica [britannica.com]

- 11. fiveable.me [fiveable.me]

- 12. Analysis and comparison of sigmoidal curves: application to dose-response data. | Semantic Scholar [semanticscholar.org]

- 13. Dose Response Relationships/Sigmoidal Curve Fitting/Enzyme Kinetics [originlab.com]

- 14. chem.libretexts.org [chem.libretexts.org]

- 15. Hill kinetics - Mathematics of Reaction Networks [reaction-networks.net]

- 16. Logistic Regression in Medical Research - PMC [pmc.ncbi.nlm.nih.gov]

- 17. A Review of the Logistic Regression Model with Emphasis on Medical Research [scirp.org]

- 18. benchchem.com [benchchem.com]

The Sigmoid Curve: A Cornerstone of Biological Modeling

An In-depth Technical Guide on the History, Application, and Experimental Basis of the Sigmoid Function in Biological Sciences

For Researchers, Scientists, and Drug Development Professionals

Executive Summary

The sigmoid function, with its characteristic "S"-shaped curve, is an indispensable mathematical tool in biological modeling. Its utility spans a wide array of applications, from describing population growth dynamics to characterizing dose-response relationships in pharmacology and modeling the cooperative binding of enzymes. This technical guide provides a comprehensive overview of the history of the sigmoid function in biological modeling, details key experimental protocols that generate sigmoidal data, and presents visualizations of complex signaling pathways that exhibit sigmoidal behavior. By understanding the origins and diverse applications of this fundamental concept, researchers and drug development professionals can better leverage its power in their own work.

A Historical Perspective on the Sigmoid Function in Biology

The application of the sigmoid function in biology has a rich history, with several key developments shaping its use. The two primary forms of the sigmoid function that have found widespread use in biological modeling are the logistic function and the Gompertz function.

The Logistic Function and Population Dynamics

The logistic function was first introduced in a series of papers between 1838 and 1847 by the Belgian mathematician Pierre François Verhulst.[1] Verhulst developed the function to model population growth, offering a refinement of the exponential growth model by incorporating the concept of a "carrying capacity" – a limit to the population size that an environment can sustain.[2] This self-limiting growth model produces a characteristic S-shaped curve, where the initial growth is slow, followed by a rapid acceleration, and finally a plateau as the carrying capacity is approached.[3] The logistic function was later rediscovered and popularized in the early 20th century by researchers like Raymond Pearl and Lowell Reed, who applied it to model population growth in the United States.[4]

The Gompertz Function and Mortality

Another important sigmoid function, the Gompertz function, was introduced by the British actuary Benjamin Gompertz in 1825.[5] Originally, Gompertz designed the function to describe human mortality, based on the assumption that a person's resistance to death decreases as they age.[5] While similar to the logistic function in its sigmoidal shape, the Gompertz curve is asymmetrical, with a more gradual approach to its asymptote on one side.[5] This characteristic has made it useful for modeling various biological phenomena, including tumor growth and the growth of animal and plant populations.[6][7]

Dose-Response Relationships and the Hill Equation

The concept of the dose-response relationship, a cornerstone of pharmacology and toxicology, also relies heavily on the sigmoid curve. The history of understanding this relationship can be traced back to Paracelsus in the 16th century, who recognized the importance of dose in determining toxicity.[8][9] However, it was in the "Classical Era" of pharmacology, from around 1900 to 1965, that significant progress was made in mathematically modeling this relationship.[8][9]

A pivotal development was the formulation of the Hill equation by Archibald Vivian Hill in 1910.[10][11] Hill originally developed this equation to describe the sigmoidal binding curve of oxygen to hemoglobin, a phenomenon known as cooperative binding.[10][12] The Hill equation is now widely used to model dose-response relationships, where the effect of a drug is plotted against its concentration.[11] The sigmoidal nature of this curve reflects the fact that as the concentration of a drug increases, its effect typically increases until it reaches a maximum, at which point further increases in concentration produce no greater effect.[13]

Enzyme Kinetics and Allostery

In the realm of enzyme kinetics, the standard Michaelis-Menten model describes a hyperbolic relationship between substrate concentration and reaction velocity.[14] However, for allosteric enzymes, which have multiple binding sites and exhibit cooperativity, the relationship is often sigmoidal.[14][15] The binding of a substrate molecule to one active site can increase the affinity of the other active sites for the substrate, leading to a much steeper increase in reaction velocity over a narrow range of substrate concentrations.[15] This sigmoidal response allows for more sensitive regulation of metabolic pathways.[16]

Experimental Protocols for Generating Sigmoidal Data

The theoretical application of the sigmoid function is grounded in experimental observations. The following sections detail the methodologies for key experiments that typically yield data best modeled by a sigmoid curve.

Bacterial Growth Curve

Objective: To measure the growth of a bacterial population over time and demonstrate the phases of bacterial growth, which can be modeled by a logistic function.

Methodology:

-

Inoculation: A sterile liquid growth medium is inoculated with a small number of bacteria from a pure culture.[2]

-

Incubation: The culture is incubated under optimal conditions of temperature, pH, and aeration.

-

Sampling: At regular time intervals, a small aliquot of the culture is aseptically removed.[2]

-

Measurement of Bacterial Growth: The growth of the bacterial population is typically measured by one of two methods:

-

Turbidity Measurement (Optical Density): The turbidity of the culture, which is proportional to the bacterial cell mass, is measured using a spectrophotometer at a wavelength of 600 nm (OD600).[2] This is a rapid and non-destructive method.

-

Viable Cell Count: The number of viable bacteria is determined by performing serial dilutions of the culture aliquot and plating them on a solid agar medium. After incubation, the number of colonies is counted, and the concentration of viable cells in the original culture is calculated.

-

-

Data Plotting: The logarithm of the number of viable cells or the optical density is plotted against time.[2]

Expected Outcome: The resulting plot will show the characteristic four phases of bacterial growth: the lag phase, the exponential (log) phase, the stationary phase, and the death phase, which collectively form a sigmoidal curve.

Table 1: Representative Data for a Bacterial Growth Curve

| Time (hours) | Optical Density (OD600) |

| 0 | 0.05 |

| 2 | 0.06 |

| 4 | 0.12 |

| 6 | 0.25 |

| 8 | 0.50 |

| 10 | 0.80 |

| 12 | 1.10 |

| 14 | 1.25 |

| 16 | 1.30 |

| 18 | 1.30 |

| 20 | 1.28 |

| 22 | 1.25 |

| 24 | 1.20 |

Dose-Response Curve in Pharmacology

Objective: To determine the relationship between the concentration of a drug and its biological effect.

Methodology:

-

Cell Culture or Tissue Preparation: A suitable biological system, such as a cell line or an isolated tissue, is prepared.

-

Drug Application: A range of concentrations of the drug are applied to the biological system. A control group with no drug is also included.

-

Incubation: The biological system is incubated with the drug for a predetermined period to allow for a response.

-

Response Measurement: The biological response is measured. This can be a variety of endpoints, such as cell viability, enzyme activity, or muscle contraction.

-

Data Normalization: The response at each drug concentration is often normalized to the maximum possible response to express the data as a percentage of the maximum effect.

-

Data Plotting: The response is plotted against the logarithm of the drug concentration.[13]

Expected Outcome: The resulting plot is typically a sigmoidal curve, from which key pharmacological parameters such as the EC50 (the concentration of the drug that produces 50% of the maximum effect) and the Hill slope can be determined.

Table 2: Representative Data for a Dose-Response Curve of an Agonist

| Log [Agonist] (M) | % Response |

| -10 | 2 |

| -9.5 | 5 |

| -9 | 15 |

| -8.5 | 35 |

| -8 | 50 |

| -7.5 | 65 |

| -7 | 85 |

| -6.5 | 95 |

| -6 | 98 |

| -5.5 | 100 |

| -5 | 100 |

Hemoglobin-Oxygen Dissociation Curve

Objective: To determine the affinity of hemoglobin for oxygen by measuring the percentage of hemoglobin saturated with oxygen at various partial pressures of oxygen.

Methodology:

-

Blood Sample Preparation: A sample of whole blood or a purified hemoglobin solution is prepared.[15]

-

Tonometer Setup: The blood sample is placed in a tonometer, a device that allows for the equilibration of the blood with gases at known partial pressures.

-

Gas Equilibration: The blood sample is exposed to a series of gas mixtures with varying partial pressures of oxygen (pO2). The partial pressure of carbon dioxide and the pH are typically held constant.[15]

-

Measurement of Oxygen Saturation: After equilibration at each pO2, the percentage of hemoglobin saturated with oxygen (SO2) is measured using a co-oximeter, which is a specialized spectrophotometer.

-

Data Plotting: The percentage of oxygen saturation is plotted against the partial pressure of oxygen.

Expected Outcome: The resulting plot is a characteristic sigmoidal curve, demonstrating the cooperative binding of oxygen to hemoglobin. The P50, the partial pressure of oxygen at which hemoglobin is 50% saturated, can be determined from this curve.

Table 3: Representative Data for a Hemoglobin-Oxygen Dissociation Curve

| pO2 (mmHg) | % O2 Saturation |

| 10 | 10 |

| 20 | 35 |

| 27 | 50 |

| 30 | 57 |

| 40 | 75 |

| 50 | 85 |

| 60 | 90 |

| 70 | 94 |

| 80 | 96 |

| 90 | 97 |

| 100 | 98 |

Visualization of Sigmoidal Behavior in Signaling Pathways

Many intracellular signaling pathways exhibit switch-like, sigmoidal responses to stimuli. This allows cells to convert graded inputs into decisive, all-or-none outputs. The following sections provide Graphviz diagrams of two key signaling pathways known for their sigmoidal activation kinetics.

G-Protein Coupled Receptor (GPCR) Signaling Pathway

G-protein coupled receptors (GPCRs) are a large family of transmembrane receptors that play a crucial role in cellular communication.[9] Upon binding of a ligand, GPCRs activate intracellular G-proteins, which in turn trigger a cascade of downstream signaling events.[5] The amplification inherent in this cascade often leads to a sigmoidal response.

Caption: A simplified G-protein coupled receptor (GPCR) signaling pathway.

Mitogen-Activated Protein Kinase (MAPK) Signaling Cascade

The Mitogen-Activated Protein Kinase (MAPK) cascade is a highly conserved signaling module that regulates a wide range of cellular processes, including cell proliferation, differentiation, and apoptosis. This three-tiered kinase cascade (MAPKKK -> MAPKK -> MAPK) provides multiple levels of signal amplification and regulation, often resulting in an ultrasensitive, switch-like, sigmoidal response to stimuli.

Caption: The core logic of a Mitogen-Activated Protein Kinase (MAPK) cascade.

Conclusion

The sigmoid function is a powerful and versatile tool that has been fundamental to the advancement of quantitative biology. From its early applications in modeling population growth and mortality to its current widespread use in pharmacology, enzyme kinetics, and systems biology, the S-shaped curve has provided a mathematical framework for understanding a diverse range of biological processes. For researchers, scientists, and drug development professionals, a thorough understanding of the historical context, experimental underpinnings, and practical applications of the sigmoid function is essential for the rigorous analysis and interpretation of biological data. As our ability to generate large and complex datasets continues to grow, the principles of sigmoidal modeling will undoubtedly remain a cornerstone of biological inquiry.

References

- 1. analyticalscience.wiley.com [analyticalscience.wiley.com]

- 2. Estimating microbial population data from optical density - PMC [pmc.ncbi.nlm.nih.gov]

- 3. harvardapparatus.com [harvardapparatus.com]

- 4. How to Interpret Dose-Response Curves [sigmaaldrich.com]

- 5. derangedphysiology.com [derangedphysiology.com]

- 6. graphpad.com [graphpad.com]

- 7. Oxygen Saturation - StatPearls - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 8. 2.1 Dose-Response Modeling | The inTelligence And Machine lEarning (TAME) Toolkit for Introductory Data Science, Chemical-Biological Analyses, Predictive Modeling, and Database Mining for Environmental Health Research [uncsrp.github.io]

- 9. researchgate.net [researchgate.net]

- 10. Estimating microbial population data from optical density | PLOS One [journals.plos.org]

- 11. researchgate.net [researchgate.net]

- 12. study.com [study.com]

- 13. Quantitative Measure of Receptor Agonist and Modulator Equi-Response and Equi-Occupancy Selectivity - PMC [pmc.ncbi.nlm.nih.gov]

- 14. graphpad.com [graphpad.com]

- 15. journals.plos.org [journals.plos.org]

- 16. mobile.fpnotebook.com [mobile.fpnotebook.com]

Understanding the S-shaped Curve in Scientific Data: An In-depth Technical Guide

The S-shaped, or sigmoidal, curve is a fundamental pattern observed across numerous scientific disciplines, from molecular biology to pharmacology and population dynamics. This guide provides a technical overview of the S-shaped curve, its mathematical underpinnings, and its practical applications for researchers, scientists, and drug development professionals. We will delve into the experimental methodologies that generate this characteristic curve and explore the biological signaling pathways that exhibit this behavior.

The Core Concept: A Tale of Three Phases

The S-shaped curve graphically represents a process that begins with a slow initial phase, transitions into a period of rapid, exponential growth, and finally concludes with a plateau phase where the rate of increase slows and approaches a maximum. This pattern is often modeled by logistic functions.[1][2] The three distinct phases can be characterized as follows:

-

Lag Phase: The initial phase where the process starts slowly. In a biological context, this could represent a period of adaptation, such as bacteria adjusting to a new environment or a low initial binding of a ligand to a receptor.

-

Logarithmic (Exponential) Phase: A period of rapid acceleration where the rate of the process is at its highest. This is characteristic of phenomena like rapid cell proliferation or the steep part of a dose-response curve.

-

Stationary (Plateau) Phase: The final phase where the process slows down and approaches a natural limit or carrying capacity. This can be due to factors like resource depletion, saturation of binding sites, or the maximum achievable effect of a drug.

Below is a logical diagram illustrating these three key phases of a sigmoidal progression.

Quantitative Data Presentation

To illustrate the S-shaped curve in a practical context, we present quantitative data from three common experimental scenarios: a dose-response assay, a bacterial growth curve, and the cooperative binding of oxygen to hemoglobin.

Dose-Response Relationship

The following table shows a typical dataset from a dose-response experiment, where the response of a biological system is measured at increasing concentrations of a drug.

| Concentration (nM) | Response (% Inhibition) |

| 0.1 | 2.5 |

| 1 | 5.1 |

| 10 | 25.3 |

| 50 | 50.2 |

| 100 | 75.8 |

| 500 | 95.1 |

| 1000 | 98.9 |

| 5000 | 99.5 |

Bacterial Growth Curve

This table represents the change in optical density (OD) at 600 nm over time as a bacterial culture grows, a common method to monitor microbial population dynamics.

| Time (minutes) | Optical Density (OD600) |

| 0 | 0.05 |

| 30 | 0.08 |

| 60 | 0.15 |

| 90 | 0.32 |

| 120 | 0.65 |

| 150 | 0.98 |

| 180 | 1.25 |

| 210 | 1.35 |

| 240 | 1.38 |

Oxygen-Hemoglobin Dissociation

The sigmoidal relationship between the partial pressure of oxygen and the saturation of hemoglobin is a classic example of cooperative binding.[3][4]

| Partial Pressure of O2 (mmHg) | Hemoglobin Saturation (%) |

| 10 | 10 |

| 20 | 25 |

| 30 | 50 |

| 40 | 75 |

| 50 | 85 |

| 60 | 90 |

| 70 | 94 |

| 80 | 96 |

| 90 | 97 |

| 100 | 98 |

Experimental Protocols

Detailed methodologies are crucial for reproducing experimental results that yield sigmoidal data. Below are protocols for two key experiments.

Dose-Response Assay Protocol

This protocol outlines the steps for a typical in vitro dose-response experiment to determine the potency of a compound.

-

Cell Culture: Plate cells at a desired density in a multi-well plate (e.g., 96-well) and incubate under appropriate conditions to allow for cell attachment and growth.

-

Compound Preparation: Prepare a serial dilution of the test compound in the appropriate vehicle (e.g., DMSO). A typical dilution series might span several orders of magnitude.

-

Treatment: Add the diluted compounds to the corresponding wells of the cell plate. Include vehicle-only controls (negative control) and a positive control (a compound with a known effect).

-

Incubation: Incubate the treated plates for a predetermined period, which can range from hours to days depending on the assay endpoint.

-

Assay Endpoint Measurement: Measure the biological response of interest. This could be cell viability (e.g., using an MTT or CellTiter-Glo assay), enzyme activity, or the expression of a specific marker.

-

Data Analysis: Plot the response as a function of the log of the compound concentration. Fit the data to a four-parameter logistic equation to determine key parameters like the EC50 or IC50.

The following diagram illustrates a generalized workflow for a dose-response experiment.

Bacterial Growth Curve Protocol

This protocol describes how to measure a bacterial growth curve using spectrophotometry.[5][6][7]

-

Inoculum Preparation: Inoculate a single bacterial colony into a small volume of sterile growth medium and incubate overnight to obtain a saturated starter culture.

-

Culture Inoculation: Dilute the overnight culture into a larger volume of fresh, pre-warmed sterile medium to a low starting optical density (e.g., OD600 of 0.05).

-

Incubation and Sampling: Incubate the culture under optimal conditions (e.g., 37°C with shaking). At regular time intervals (e.g., every 30 minutes), aseptically remove a small aliquot of the culture.

-

OD Measurement: Measure the optical density of each aliquot at a wavelength of 600 nm (OD600) using a spectrophotometer. Use sterile medium as a blank.

-

Data Plotting: Plot the OD600 values against time to generate the bacterial growth curve. The data can also be plotted on a semi-logarithmic scale (log OD600 vs. time) to linearize the exponential growth phase.

Signaling Pathways with Sigmoidal Responses

Many biological signaling pathways exhibit switch-like, sigmoidal behavior in response to a stimulus. This "ultrasensitivity" allows a cell to convert a graded input into a more decisive, all-or-none output.[8] A classic example is the Mitogen-Activated Protein Kinase (MAPK) cascade.

The MAPK/ERK Signaling Pathway

The MAPK/ERK pathway is a highly conserved signaling cascade that regulates a wide range of cellular processes, including proliferation, differentiation, and survival. The pathway consists of a series of protein kinases that sequentially phosphorylate and activate one another. This multi-step activation process contributes to the sigmoidal nature of the response. A small initial signal can be amplified at each step, leading to a sharp increase in the activation of the final kinase, ERK, once a certain threshold of the initial stimulus is reached.

Below is a simplified diagram of the core MAPK/ERK signaling cascade.

References

- 1. derangedphysiology.com [derangedphysiology.com]

- 2. cdn-links.lww.com [cdn-links.lww.com]

- 3. litfl.com [litfl.com]

- 4. edodanilyan.com [edodanilyan.com]

- 5. creative-diagnostics.com [creative-diagnostics.com]

- 6. Oxygen–hemoglobin dissociation curve - Wikipedia [en.wikipedia.org]

- 7. documents.thermofisher.com [documents.thermofisher.com]

- 8. Item - Bacterial bioindicators growth curves - figshare - Figshare [figshare.com]

The Logistic Function in Science: A Conceptual and Technical Overview

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

The logistic function, a sigmoid or "S"-shaped curve, is a fundamental mathematical concept with wide-ranging applications across numerous scientific disciplines. Its ability to model growth processes that are initially exponential and then level off due to limiting factors makes it an invaluable tool in fields such as biology, ecology, epidemiology, and pharmacology. This technical guide provides a comprehensive overview of the logistic function, its mathematical underpinnings, and its practical applications in scientific research, with a focus on quantitative data, experimental methodologies, and visual representations of underlying processes.

Core Concepts of the Logistic Function

The logistic function describes a common pattern of growth where the rate of increase is proportional to both the current size and the remaining available "space" for growth.[1] This self-limiting growth is in contrast to purely exponential models, which assume unlimited resources.[2]

The standard logistic function is defined by the following equation:

f(x) = L / (1 + e^(-k(x - x₀)))[1]

Where:

-

L (Carrying Capacity): The maximum value that the function can reach. In biological contexts, this often represents the maximum population size an environment can sustain.[1]

-

k (Logistic Growth Rate): The steepness of the curve, indicating how quickly the growth occurs.[1]

-

x₀ (Midpoint): The x-value of the sigmoid's midpoint, where the growth rate is at its maximum.[1]

-

e: The base of the natural logarithm.

A simplified and commonly used form is the standard sigmoid function, where L=1, k=1, and x₀=0:

σ(x) = 1 / (1 + e^(-x))

This form is particularly prevalent in machine learning and statistics, where it is used to convert a real-valued number into a probability between 0 and 1.

Applications in Scientific Disciplines

The logistic function's versatility allows it to model a wide array of phenomena. Below are key applications in various scientific fields, complete with illustrative data and experimental considerations.

Population Ecology: Modeling Microbial Growth

In ecology, the logistic function is a cornerstone for modeling population growth when resources are limited.[1] A classic example is the growth of a microbial population in a nutrient-rich medium. Initially, with ample resources, the population grows exponentially. As the population increases, resources become scarce, and waste products accumulate, leading to a decrease in the growth rate until the population reaches the carrying capacity (K) of the environment.[3]

Quantitative Data: Bacterial Growth Curve

The following table presents a hypothetical dataset representing the growth of a bacterial culture over time, measured by its optical density (OD) at 600 nm. This data follows a logistic pattern.

| Time (Hours) | Optical Density (OD600) |

| 0 | 0.05 |

| 2 | 0.12 |

| 4 | 0.28 |

| 6 | 0.55 |

| 8 | 0.85 |

| 10 | 1.10 |

| 12 | 1.25 |

| 14 | 1.32 |

| 16 | 1.35 |

| 18 | 1.36 |

Experimental Protocol: Generating a Bacterial Growth Curve

This protocol outlines the steps to generate data for a bacterial growth curve, which can then be fitted to a logistic model.

-

Inoculum Preparation:

-

Aseptically transfer a single colony of the bacterium from an agar plate into a sterile test tube containing a small volume of nutrient broth.

-

Incubate the culture overnight at the optimal temperature for the specific bacterium (e.g., 37°C for E. coli). This creates a starter culture.

-

-

Growth Experiment Setup:

-

In a larger sterile flask containing a larger volume of fresh, pre-warmed nutrient broth, inoculate with a small volume of the overnight starter culture to achieve a low initial optical density (e.g., OD600 of ~0.05).

-

Place the flask in a shaking incubator set to the optimal temperature and agitation speed to ensure aeration and prevent cell settling.

-

-

Data Collection:

-

At regular time intervals (e.g., every 2 hours), aseptically remove a small aliquot of the culture.

-

Measure the optical density of the aliquot at a wavelength of 600 nm (OD600) using a spectrophotometer. Use sterile nutrient broth as a blank.

-

Continue measurements until the OD600 readings plateau, indicating that the population has reached its stationary phase.[4]

-

-

Data Analysis:

-

Plot the OD600 values against time.

-

Fit the data to the logistic growth equation using non-linear regression software to estimate the carrying capacity (L), growth rate (k), and midpoint (x₀).

-

Logical Workflow for Bacterial Growth Experiment

Caption: Workflow for a typical bacterial growth curve experiment.

Pharmacology and Drug Development: Dose-Response Relationships

In pharmacology, the logistic function is essential for modeling dose-response relationships.[5] It is used to describe how the effect of a drug changes with its concentration. A common application is the determination of the half-maximal inhibitory concentration (IC50) or half-maximal effective concentration (EC50), which represent the concentration of a drug that elicits 50% of its maximal effect.[6] The four-parameter logistic (4PL) model is widely used for this purpose.[7]

Quantitative Data: Dose-Response Curve for an Inhibitor

The following table shows hypothetical data from a dose-response experiment, where the response is the percentage of enzyme activity inhibited by a drug at various concentrations.

| Drug Concentration (nM) | log(Concentration) | % Inhibition |

| 0.1 | -1.00 | 2.5 |

| 1 | 0.00 | 8.1 |

| 10 | 1.00 | 25.3 |

| 100 | 2.00 | 50.1 |

| 1000 | 3.00 | 75.2 |

| 10000 | 4.00 | 91.8 |

| 100000 | 5.00 | 97.3 |

Experimental Protocol: IC50 Determination via a Cell-Based Assay

This protocol describes a general method for determining the IC50 of a compound using a cell viability assay.

-

Cell Culture and Seeding:

-

Culture a relevant cell line under standard conditions.

-

Harvest the cells and seed them into a 96-well plate at a predetermined density. Allow the cells to adhere and resume growth overnight.

-

-

Compound Treatment:

-

Prepare a serial dilution of the test compound in the appropriate cell culture medium.

-

Remove the old medium from the 96-well plate and add the medium containing the different concentrations of the compound. Include vehicle-only controls (0% inhibition) and a positive control for maximal inhibition if available.

-

-

Incubation:

-

Incubate the plate for a specific period (e.g., 48 or 72 hours) to allow the compound to exert its effect.

-

-

Viability Assay:

-

Add a viability reagent (e.g., MTT, resazurin, or a luciferase-based reagent) to each well according to the manufacturer's instructions.

-

Incubate for the required time to allow for the colorimetric or luminescent reaction to occur.

-

Measure the absorbance or luminescence using a plate reader.

-

-

Data Analysis:

-

Normalize the data to the controls, expressing the results as a percentage of inhibition.

-

Plot the percentage of inhibition against the logarithm of the compound concentration.

-

Fit the data to a four-parameter logistic model to determine the IC50 value.[8]

-

Signaling Pathway Inhibition by a Drug

References

- 1. Logistic function - Wikipedia [en.wikipedia.org]

- 2. researchgate.net [researchgate.net]

- 3. researchgate.net [researchgate.net]

- 4. repligen.com [repligen.com]

- 5. nag.com [nag.com]

- 6. Star Republic: Guide for Biologists [sciencegateway.org]

- 7. Dose-Response Curve Fitting for Ill-Behaved Data (2020-US-30MP-641) - JMP User Community [community.jmp.com]

- 8. Guidelines for accurate EC50/IC50 estimation - PubMed [pubmed.ncbi.nlm.nih.gov]

The Ubiquitous Sigmoid: A Technical Guide to its Role in Nature

Authored for Researchers, Scientists, and Drug Development Professionals

The sigmoid function, characterized by its distinctive "S"-shaped curve, is a fundamental mathematical concept that elegantly describes a multitude of processes in nature. Its gradual start, rapid acceleration, and eventual plateau make it an ideal model for phenomena constrained by limiting factors. This technical guide provides an in-depth exploration of the sigmoid function's role in key biological and pharmacological processes, offering detailed experimental protocols, quantitative data summaries, and visual representations of the underlying mechanisms.

Dose-Response Relationships in Pharmacology

The relationship between the dose of a drug and the magnitude of its biological effect is a cornerstone of pharmacology and is frequently described by a sigmoidal curve.[1] This relationship is critical for determining a drug's efficacy and potency. The steepness of the curve indicates the potency, with a steeper curve signifying that a lower dose is required to achieve a significant effect.[1] The plateau of the curve represents the maximum achievable response, indicating the drug's efficacy.[1]

Quantitative Data: Dose-Response Analysis of a Kinase Inhibitor

The following table summarizes the results of an in vitro experiment to determine the half-maximal inhibitory concentration (IC50) of a novel kinase inhibitor. The percentage of enzyme activity was measured at various inhibitor concentrations.

| Inhibitor Concentration (nM) | % Inhibition |

| 0.1 | 2.5 |

| 1 | 10.2 |

| 10 | 48.9 |

| 50 | 85.1 |

| 100 | 95.3 |

| 500 | 99.1 |

| 1000 | 99.5 |

Experimental Protocol: Determination of IC50 using the MTT Assay

The half-maximal inhibitory concentration (IC50) is a measure of the potency of a substance in inhibiting a specific biological or biochemical function. The MTT (3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide) assay is a colorimetric method used to assess cell viability and is a reliable technique for determining the IC50 value of cytotoxic compounds.[2]

Materials:

-

Adherent cancer cell line of interest

-

Complete cell culture medium (e.g., DMEM with 10% FBS)

-

Test compound (e.g., Edotecarin) dissolved in DMSO[2]

-

MTT solution (5 mg/mL in PBS)

-

Solubilization solution (e.g., DMSO or a solution of 20% SDS in 50% DMF)

-

96-well cell culture plates

-

Multichannel pipette

-

Plate reader (spectrophotometer)

Procedure:

-

Cell Seeding:

-

Harvest and count cells from a logarithmic growth phase culture.

-

Seed the cells in a 96-well plate at a predetermined optimal density (e.g., 5,000-10,000 cells/well) in 100 µL of complete culture medium.

-

Incubate the plate at 37°C in a humidified 5% CO2 incubator for 24 hours to allow for cell attachment.[1]

-

-

Compound Treatment:

-

Prepare a serial dilution of the test compound in culture medium. A common starting concentration might be 10 mM, with 10-point, 3-fold serial dilutions.[2]

-

Carefully remove the medium from the wells and add 100 µL of the prepared compound dilutions to the respective wells. Include vehicle control (medium with DMSO) and blank (medium only) wells.[2] Each treatment should be performed in triplicate.

-

Incubate the plate for a specified exposure time (e.g., 48 or 72 hours).

-

-

MTT Assay:

-

After the incubation period, add 10-20 µL of MTT solution (5 mg/mL) to each well.[2]

-

Incubate the plate for 2-4 hours at 37°C, allowing viable cells to reduce the yellow MTT to purple formazan crystals.[2]

-

Carefully aspirate the medium containing MTT without disturbing the formazan crystals.[2]

-

Add 100-150 µL of solubilization solution (e.g., DMSO) to each well to dissolve the formazan crystals.[2]

-

Wrap the plate in foil and place it on an orbital shaker for 15 minutes to ensure complete dissolution.

-

-

Data Acquisition and Analysis:

-

Measure the absorbance of each well at a wavelength of 570-590 nm using a plate reader.

-

Subtract the average absorbance of the blank wells from the absorbance of all other wells.

-

Calculate the percentage of inhibition for each concentration using the following formula: % Inhibition = 100 * (1 - (Absorbance of treated well / Absorbance of vehicle control well))

-

Plot the % inhibition against the logarithm of the inhibitor concentration.

-

Fit the data to a sigmoidal dose-response curve using non-linear regression analysis to determine the IC50 value, which is the concentration of the inhibitor that causes 50% inhibition of cell growth.[3]

-

Signaling Pathway: Receptor-Ligand Binding

The interaction between a ligand (e.g., a drug) and its cellular receptor is the initial step in many signaling pathways that ultimately lead to a biological response. This binding event can be modeled as a dynamic equilibrium.

References

Decoding the Dose: An In-depth Guide to Dose-Response Relationships

For Researchers, Scientists, and Drug Development Professionals

The fundamental principle that "the dose makes the poison" is a cornerstone of pharmacology and toxicology. Understanding the intricate relationship between the dose of a compound and the biological response it elicits is paramount in the development of safe and effective therapeutics. This technical guide provides an in-depth exploration of the foundational concepts of dose-response relationships, offering detailed experimental protocols, quantitative data summaries, and visual representations of key pathways and workflows to empower researchers in their quest for novel drug discoveries.

Core Principles of Dose-Response Relationships

A dose-response relationship describes the magnitude of the response of an organism to different doses of a stimulus, such as a drug or toxin.[1] This relationship is typically visualized as a dose-response curve, a graphical representation that plots the dose or concentration of a compound against the observed biological effect.[2] These curves are essential tools in pharmacology for characterizing the potency and efficacy of a drug.

Key Parameters of Dose-Response Curves

Several key parameters are derived from dose-response curves to quantify a drug's activity:

-

EC₅₀ (Half Maximal Effective Concentration): The concentration of a drug that produces 50% of the maximal possible effect.[3] A lower EC₅₀ indicates a higher potency.

-

ED₅₀ (Half Maximal Effective Dose): The dose of a drug that produces a therapeutic effect in 50% of the population.[4]

-

IC₅₀ (Half Maximal Inhibitory Concentration): The concentration of an inhibitor that is required to inhibit a biological process by 50%.[5]

-

Potency: The amount of a drug needed to produce a defined effect.[6] It is a comparative measure, with a more potent drug requiring a lower concentration to achieve the same effect as a less potent one.[7]

-

Efficacy (Emax): The maximum response achievable from a drug.[6] It reflects the intrinsic activity of the drug once it is bound to its receptor.[8]

-

Hill Slope: The steepness of the dose-response curve, which can provide insights into the binding cooperativity of the drug.[4]

Types of Dose-Response Relationships

Dose-response relationships can be broadly categorized into two main types:

-

Graded Dose-Response: Describes a continuous increase in the intensity of a response as the dose increases.[9] This is typically observed in an individual subject or an in vitro system.

-

Quantal Dose-Response: Describes an "all-or-none" response, where the effect is either present or absent.[9] These relationships are studied in populations and are used to determine the frequency of a specific outcome at different doses.

Experimental Design and Protocols

The generation of reliable dose-response data hinges on robust experimental design and meticulous execution of protocols. The choice of experimental model, whether in vitro or in vivo, depends on the specific research question and the stage of drug development.

In Vitro Dose-Response Studies

In vitro assays are fundamental for the initial screening and characterization of compounds. They offer a controlled environment to study the direct effects of a drug on cells or specific molecular targets.

Detailed Protocol: Determining IC₅₀ using the MTT Assay

The MTT (3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide) assay is a colorimetric assay for assessing cell metabolic activity, which is often used to infer cell viability and cytotoxicity.[1][4]

Materials:

-

Adherent cells in logarithmic growth phase

-

Complete culture medium

-

Test compound (drug)

-

MTT solution (5 mg/mL in sterile PBS)[10]

-

Solubilization solution (e.g., DMSO or 0.04 N HCl in isopropanol)[10]

-

96-well flat-bottom sterile cell culture plates

-

Multichannel pipette

-

Microplate reader

Procedure:

-

Cell Seeding:

-

Compound Treatment:

-

Prepare a serial dilution of the test compound in complete culture medium. A common approach is to use a 10-point, 3-fold serial dilution.

-

Remove the old medium from the wells and add 100 µL of the medium containing the different concentrations of the test compound. Include vehicle-only controls.

-

Incubate the plate for a specified exposure time (e.g., 48 or 72 hours).[11]

-

-

MTT Addition and Incubation:

-

Solubilization and Absorbance Reading:

-

Carefully aspirate the medium containing MTT.

-

Add 100-150 µL of solubilization solution to each well to dissolve the formazan crystals.[10]

-

Gently shake the plate on an orbital shaker for 15 minutes to ensure complete dissolution.[1]

-

Measure the absorbance at 570 nm using a microplate reader. A reference wavelength of 630 nm can be used to reduce background noise.[10]

-

-

Data Analysis:

-

Subtract the average absorbance of the blank wells (medium only) from all other wells.

-

Calculate the percentage of cell viability for each concentration relative to the vehicle control.

-

Plot the percentage of cell viability against the logarithm of the compound concentration and fit a sigmoidal dose-response curve using non-linear regression analysis to determine the IC₅₀ value.[10]

-

In Vivo Dose-Response Studies

In vivo studies are essential for evaluating the efficacy and safety of a drug in a whole living organism.[12] These studies are more complex and require careful consideration of factors such as animal model selection, route of administration, and dosing regimen.[13]

General Protocol for an In Vivo Dose-Response Study in Mice:

This protocol provides a general framework. Specific details will vary depending on the drug, disease model, and endpoints being measured.

Materials:

-

Appropriate mouse model for the disease under investigation

-

Test compound formulated for the chosen route of administration

-

Vehicle control

-

Calipers for tumor measurement (if applicable)

-

Equipment for blood collection and analysis (if applicable)

Procedure:

-

Animal Acclimation and Model Induction:

-

Randomization and Grouping:

-

Once the disease model is established (e.g., tumors reach a palpable size), randomize the animals into different treatment groups (typically 5-10 animals per group).[15]

-

Groups should include a vehicle control and at least 3-4 dose levels of the test compound.

-

-

Dosing:

-

Monitoring and Endpoint Measurement:

-

Monitor the animals regularly for clinical signs of toxicity and general well-being.

-

Measure the primary efficacy endpoint at specified time points. For example, in a cancer model, tumor volume would be measured with calipers two to three times a week.[15]

-

At the end of the study, collect tissues and/or blood for pharmacokinetic and pharmacodynamic analysis.

-

-

Data Analysis:

-

Plot the mean response (e.g., tumor growth inhibition, reduction in blood pressure) against the dose of the compound.

-

Analyze the data using appropriate statistical methods to determine the dose-response relationship and identify an effective dose range.

-

Quantitative Data Summary

The following tables provide examples of dose-response data for different classes of drugs in various experimental systems.

Table 1: In Vitro Potency of Kinase Inhibitors in Cancer Cell Lines

| Compound | Target | Cell Line | Assay | IC₅₀ (nM) |

| Gefitinib | EGFR | SK-BR-3 | Proliferation | ~100 |

| AG825 | ERBB2 | SK-BR-3 | Proliferation | >10,000 |

| GW583340 | EGFR/ERBB2 | SK-BR-3 | Proliferation | ~50 |

| Gefitinib | EGFR | BT474 | Proliferation | ~5,000 |

| AG825 | ERBB2 | BT474 | Proliferation | ~2,000 |

| GW583340 | EGFR/ERBB2 | BT474 | Proliferation | ~1,000 |

Data adapted from a study on tyrosine kinase inhibitors in breast cancer cell lines.[18]

Table 2: Potency of Dopamine Agonists at the D2 Receptor

| Agonist | Assay | EC₅₀ (µM) |

| Dopamine | Adenylyl Cyclase Inhibition | 2.0 |

| Bromocriptine | Adenylyl Cyclase Inhibition | ~0.01 |

| l-Sulpiride | Adenylyl Cyclase Inhibition | 0.21 |

| (+)-Butaclamol | Adenylyl Cyclase Inhibition | 0.13 |

Data compiled from studies on D2 dopamine receptor pharmacology.[2][19]

Table 3: In Vivo Efficacy of Antihypertensive Drugs in Animal Models

| Drug Class | Animal Model | Endpoint | Typical Effective Dose Range |

| ACE Inhibitors | Spontaneously Hypertensive Rat (SHR) | Reduction in Blood Pressure | 1-10 mg/kg/day |

| Angiotensin II Receptor Blockers | Renal Artery Stenosis Model | Reduction in Blood Pressure | 1-10 mg/kg/day |

| Calcium Channel Blockers | Dahl Salt-Sensitive Rat | Reduction in Blood Pressure | 3-30 mg/kg/day |

| Beta-Blockers | Spontaneously Hypertensive Rat (SHR) | Reduction in Blood Pressure | 1-20 mg/kg/day |

This table provides a general overview; specific doses can vary significantly based on the compound and experimental conditions.[20]

Visualizing Core Concepts

Diagrams are powerful tools for illustrating complex biological pathways, experimental workflows, and logical relationships. The following diagrams are generated using the Graphviz DOT language.

Caption: G-protein coupled receptor (GPCR) signaling pathway.

Caption: Mitogen-Activated Protein Kinase (MAPK) signaling pathway.

References

- 1. MTT assay protocol | Abcam [abcam.com]

- 2. Characterization of dopamine receptors mediating inhibition of adenylate cyclase activity in rat striatum - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. G-Protein-Coupled Receptors Signaling to MAPK/Erk | Cell Signaling Technology [cellsignal.com]

- 4. Protocol for Determining the IC50 of Drugs on Adherent Cells Using MTT Assay - Creative Bioarray | Creative Bioarray [creative-bioarray.com]

- 5. mdpi.com [mdpi.com]

- 6. Dose-Response Data Analysis Workflow [cran.r-project.org]

- 7. storage.imrpress.com [storage.imrpress.com]

- 8. MAPK signaling pathway | Abcam [abcam.com]

- 9. researchgate.net [researchgate.net]

- 10. benchchem.com [benchchem.com]

- 11. texaschildrens.org [texaschildrens.org]

- 12. benchchem.com [benchchem.com]

- 13. researchgate.net [researchgate.net]

- 14. researchgate.net [researchgate.net]

- 15. Bridging the gap between in vitro and in vivo: Dose and schedule predictions for the ATR inhibitor AZD6738 - PMC [pmc.ncbi.nlm.nih.gov]

- 16. ichor.bio [ichor.bio]

- 17. researchgate.net [researchgate.net]

- 18. researchgate.net [researchgate.net]

- 19. Agonist-induced desensitization of D2 dopamine receptors in human Y-79 retinoblastoma cells - PubMed [pubmed.ncbi.nlm.nih.gov]

- 20. Animal Models of Hypertension: A Scientific Statement From the American Heart Association - PMC [pmc.ncbi.nlm.nih.gov]

The Theoretical Bedrock of the Sigmoid Activation Function: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction

In the landscape of neural networks and machine learning, the sigmoid activation function holds a place of historical and foundational importance. Its characteristic 'S'-shaped curve was a pivotal element in the development of early neural networks, enabling them to learn complex patterns through the revolutionary backpropagation algorithm.[1] This technical guide provides an in-depth exploration of the theoretical underpinnings of the sigmoid function, its mathematical properties, probabilistic interpretations, and its role in seminal experiments that paved the way for modern deep learning.

Mathematical and Theoretical Foundations

The sigmoid function, in its most common form, is the logistic function, defined by the formula:

σ(z) = 1 / (1 + e⁻ᶻ)[2]

where 'z' is the input to the function. This elegant mathematical formulation gives rise to several key properties that made it an attractive choice for early neural network researchers.

Core Mathematical Properties

The utility of the sigmoid function in neural networks stems from a combination of its mathematical characteristics:

-

Range: The output of the sigmoid function is always between 0 and 1, regardless of the input value.[3] This property is particularly useful for interpreting the output of a neuron as a probability.

-

Monotonicity: The function is monotonically increasing; as the input increases, the output also increases.[3]

-

Differentiability: The sigmoid function is differentiable everywhere, a crucial requirement for the gradient-based learning methods used in backpropagation.[4] Its derivative is conveniently expressed in terms of the function itself: σ'(z) = σ(z)(1 - σ(z)).[5]

-

Non-linearity: The sigmoid function is non-linear, which allows multi-layer networks to learn complex, non-linear decision boundaries.[4] A network composed solely of linear activation functions would be equivalent to a single linear model, severely limiting its learning capacity.[6]

Probabilistic Interpretation: A Bridge from Statistics

The sigmoid function's output can be interpreted as a probability, a concept deeply rooted in statistical theory, particularly logistic regression.[7] Logistic regression is a statistical model used for binary classification problems, where the goal is to predict the probability of a binary outcome (e.g., an event occurring or not).

The core of logistic regression is the assumption that the log-odds of the positive class is a linear function of the input features. The log-odds, or logit function, is the logarithm of the odds (p / (1-p)), where 'p' is the probability of the positive class. By solving for 'p', we arrive at the sigmoid function. This derivation provides a strong theoretical justification for using the sigmoid function to model probabilities in classification tasks.[8]

This probabilistic framing is a key reason for the sigmoid function's prevalence in the output layer of neural networks for binary classification problems. The network's output can be directly interpreted as the predicted probability of the positive class.[9]

The Biological Analogy: A Glimpse into Neural Inspiration

While artificial neural networks are a significant abstraction of their biological counterparts, the initial inspiration was drawn from the functioning of neurons in the brain.[10] A biological neuron fires an action potential only when the cumulative input signal surpasses a certain threshold.[11] The sigmoid function, with its gradual transition from a non-firing state (close to 0) to a firing state (close to 1), was seen as a smooth, differentiable approximation of this thresholding behavior.[7] This continuous nature was essential for the backpropagation algorithm, which relies on gradients to update the network's weights.

Seminal Experiments and Methodologies

The theoretical appeal of the sigmoid function was solidified by its successful application in early, groundbreaking experiments in neural networks. These experiments demonstrated the power of multi-layer networks trained with backpropagation to learn complex tasks.

The Backpropagation Revolution: Rumelhart, Hinton & Williams (1986)

The 1986 paper "Learning representations by back-propagating errors" by David Rumelhart, Geoffrey Hinton, and Ronald Williams is a landmark publication that was instrumental in popularizing the backpropagation algorithm.[12] This work showed how neural networks with hidden layers and non-linear activation functions, like the sigmoid, could learn internal representations of data.

Experimental Protocol:

One of the key problems tackled in this paper to illustrate the power of backpropagation was the XOR (exclusive OR) problem . This was a significant challenge because it is not linearly separable, meaning a single-layer perceptron could not solve it.

-

Network Architecture: A multi-layer perceptron with an input layer, a hidden layer with sigmoid activation units, and an output layer with a sigmoid activation unit. A common architecture was 2 input neurons, 2 hidden neurons, and 1 output neuron.

-

Dataset: The training data consisted of the four possible input patterns for the XOR function: (0,0), (0,1), (1,0), and (1,1), with their corresponding target outputs (0, 1, 1, 0).

-

Learning Algorithm: The backpropagation algorithm was used to iteratively adjust the weights of the network to minimize the squared error between the network's output and the target output.

-

Activation Function: The sigmoid function was used in the hidden and output layers to introduce non-linearity and allow the network to learn the non-linear decision boundary of the XOR problem.

Quantitative Data Summary:

The success of the experiment was not measured in terms of a single accuracy metric but rather by the network's ability to converge to a solution that correctly classified all four input patterns. The paper demonstrated that through backpropagation, the network could learn the appropriate weights to solve the XOR problem, something that was not possible with simpler models.

Handwritten Digit Recognition: LeCun et al. (1989)

A pivotal practical application of neural networks with sigmoid activations was demonstrated by Yann LeCun and his colleagues in their 1989 paper on handwritten digit recognition.[13] This work laid the groundwork for many modern applications of neural networks in image recognition.

Experimental Protocol:

-

Network Architecture: A multi-layer neural network with a more complex, constrained architecture specifically designed for image recognition. It featured layers of feature extraction followed by classification layers. Sigmoid activation functions were used throughout the network.

-

Dataset: The experiments were conducted on a database of handwritten digits obtained from the U.S. Postal Service. The images were preprocessed and normalized to a fixed size.

-

Learning Algorithm: The backpropagation algorithm was used to train the network.

-

Performance Metrics: The performance of the network was evaluated based on its error rate on a test set of digits that were not used during training.

Quantitative Data Summary:

| Metric | Value |

| Error Rate | 1% |

| Rejection Rate | ~9% |

This level of performance on a real-world task was a significant achievement at the time and showcased the potential of neural networks with sigmoid activations for practical applications.

Experimental and Logical Workflows

The process of training a neural network with a sigmoid activation function using backpropagation follows a clear, logical workflow.

Limitations and the Rise of Alternatives

Despite its foundational role, the sigmoid function has limitations that have led to the development of alternative activation functions. The most significant of these is the vanishing gradient problem .[14] For very large or very small input values, the sigmoid function's gradient becomes extremely close to zero. In deep networks, this can cause the gradients to become vanishingly small as they are propagated backward through many layers, effectively halting the learning process for the earlier layers.

This and other issues, such as the output not being zero-centered, have led to the widespread adoption of other activation functions in modern deep learning, most notably the Rectified Linear Unit (ReLU) and its variants.

Conclusion

The sigmoid activation function represents a critical chapter in the history of artificial intelligence. Its elegant mathematical properties, its deep connection to probabilistic theory, and its role in the success of the backpropagation algorithm were instrumental in moving the field of neural networks forward. While its use in the hidden layers of deep networks has waned, it remains a valuable tool, particularly in the output layer for binary classification problems. For researchers and scientists, understanding the theoretical basis of the sigmoid function provides not only a historical perspective but also a fundamental understanding of the principles that continue to shape the field of machine learning today.

References

- 1. builtin.com [builtin.com]

- 2. Introduction to Activation Functions in Neural Networks | DataCamp [datacamp.com]

- 3. [PDF] Learning representations by back-propagating errors | Semantic Scholar [semanticscholar.org]

- 4. semanticscholar.org [semanticscholar.org]

- 5. proceedings.neurips.cc [proceedings.neurips.cc]

- 6. scispace.com [scispace.com]

- 7. machine learning - Artificial Neural Network- why usually use sigmoid activation function in the hidden layer instead of tanh-sigmoid activation function? - Stack Overflow [stackoverflow.com]

- 8. cs.toronto.edu [cs.toronto.edu]

- 9. scribd.com [scribd.com]

- 10. How do you evaluate the performance of a neural network? [milvus.io]

- 11. arxiv.org [arxiv.org]

- 12. iro.umontreal.ca [iro.umontreal.ca]

- 13. Handwritten Digit Recognition with a Back-Propagation Network [proceedings.neurips.cc]

- 14. medium.com [medium.com]

Discovering Sigmoid Patterns in Experimental Data: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction

In experimental biology and drug discovery, the relationship between the dose of a substance and its resulting biological effect is often not linear. Instead, it frequently follows a characteristic "S"-shaped or sigmoid pattern. This sigmoidal relationship is fundamental to understanding the efficacy and potency of therapeutic agents, the kinetics of enzymatic reactions, and various other biological processes.[1][2] A sigmoid curve graphically represents the dose-response relationship, illustrating how the effect of a drug changes with increasing concentration.[3] At low doses, the response is minimal, followed by a rapid increase as the dose rises, and finally, the response plateaus as it approaches its maximum effect.[2]

The analysis of these curves is crucial for making informed decisions in research and development. It allows scientists to quantify key parameters such as the half-maximal effective concentration (EC50) or inhibitory concentration (IC50), which are measures of a drug's potency.[3][4] Furthermore, the steepness of the curve, often described by the Hill coefficient, provides insights into the cooperativity of the binding process.[1] This guide provides a comprehensive overview of the methodologies for identifying and analyzing sigmoid patterns in experimental data, with a focus on practical applications in a laboratory setting.

Experimental Design for Sigmoid Response Analysis

The ability to accurately model a sigmoid response is highly dependent on a well-designed experiment. The primary goal is to capture the full dynamic range of the biological response, from the baseline to the maximal effect.

Key Considerations for Experimental Design:

-

Dose Selection: A wide range of concentrations is essential. This typically involves a logarithmic series of dilutions to ensure adequate data points along the entire curve, especially in the steep portion.

-

Replicates: Performing multiple replicates at each concentration is critical to assess the variability and reliability of the data.

-

Controls: Including appropriate positive and negative controls is fundamental for establishing the baseline and maximal responses.

-

Endpoint Measurement: The chosen assay must have a measurable output that is directly proportional to the biological effect being studied. The measurements should be taken after the system has reached a steady state.[5]

Common Experimental Assays Yielding Sigmoid Data

Several standard laboratory techniques are known to produce sigmoid dose-response curves. Below are detailed protocols for three such assays.

Cell Viability Assay (MTT Assay)

The MTT assay is a colorimetric method used to assess cell metabolic activity, which serves as an indicator of cell viability, proliferation, and cytotoxicity.[6] It is based on the reduction of the yellow tetrazolium salt (3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide or MTT) to purple formazan crystals by metabolically active cells.[6][7]

Experimental Protocol:

-

Cell Seeding: Seed cells in a 96-well plate at a density of 10⁴–10⁵ cells/well in 100 µL of cell culture medium.[8]

-

Compound Treatment: Add varying concentrations of the test compound to the wells. Include vehicle-only wells as negative controls.

-

Incubation: Incubate the plate for the desired exposure period (e.g., 24, 48, or 72 hours) at 37°C in a CO₂ incubator.[6][8]

-

MTT Addition: Add 10 µL of a 5 mg/mL MTT solution in PBS to each well.[8]

-

Formazan Formation: Incubate the plate for 3-4 hours at 37°C to allow for the formation of formazan crystals.[6][8]

-

Solubilization: Add 100-150 µL of a solubilization solution (e.g., SDS-HCl or DMSO) to each well to dissolve the formazan crystals.[7][8]

-

Absorbance Reading: Measure the absorbance of the samples at a wavelength between 550 and 600 nm using a microplate reader.[6] A reference wavelength of 630 nm or higher can be used to reduce background noise.[9]

Enzyme-Linked Immunosorbent Assay (ELISA)

ELISA is a widely used immunological assay to quantify proteins, antibodies, and hormones. In a competitive ELISA format, a sigmoid curve is often generated when plotting the concentration of an analyte against the signal.

Experimental Protocol:

-

Coating: Coat the wells of a 96-well plate with a known amount of antigen or antibody.

-

Blocking: Block the remaining protein-binding sites in the coated wells to prevent non-specific binding.

-

Sample/Standard Addition: Add a series of dilutions of the standard and unknown samples to the wells. In a competitive assay, a fixed amount of labeled antigen/antibody is also added.

-

Incubation: Incubate the plate to allow for the binding reaction to occur.

-

Washing: Wash the plate to remove any unbound substances.

-

Detection: Add an enzyme-conjugated secondary antibody that binds to the target.

-

Substrate Addition: Add a substrate that is converted by the enzyme into a colored product.

-

Absorbance Reading: Measure the absorbance of the colored product using a microplate reader at the appropriate wavelength.

Ligand-Binding Assay

Ligand-binding assays (LBAs) are used to measure the interaction between a ligand and a receptor or other macromolecule.[10] These assays are fundamental in pharmacology for characterizing drug-receptor interactions.[11]

Experimental Protocol (Radioligand Binding Assay Example):

-

Membrane Preparation: Prepare cell membranes or purified receptors that express the target of interest.

-

Reaction Mixture: In a series of tubes, combine the membrane preparation, a fixed concentration of a radiolabeled ligand, and varying concentrations of an unlabeled competing ligand.

-

Incubation: Incubate the mixture to allow the binding to reach equilibrium.

-

Separation: Separate the bound from the unbound radioligand, typically by rapid filtration through a glass fiber filter.

-

Radioactivity Measurement: Measure the radioactivity retained on the filter using a scintillation counter.

-

Data Analysis: Plot the percentage of bound radioligand against the concentration of the unlabeled competitor.

Data Analysis and Curve Fitting

Once the experimental data is collected, the next step is to fit it to a mathematical model that describes the sigmoid relationship. The most common model used is the four-parameter logistic (4PL) equation, also known as the Hill equation.[1][12]

The Four-Parameter Logistic Equation:

Y = Bottom + (Top - Bottom) / (1 + (X / EC50)^HillSlope)

Where:

-

Y: The measured response

-

X: The concentration of the substance

-

Bottom: The minimum response (baseline)

-

Top: The maximum response (plateau)

-

EC50: The concentration that produces a response halfway between the Bottom and Top. It is a measure of potency.

-

HillSlope: The Hill coefficient, which describes the steepness of the curve.

Non-linear regression analysis is the standard method for fitting experimental data to the 4PL model.[5] Various software packages are available for this purpose.[4][13]

Quantitative Data Summary

The following table summarizes the key parameters obtained from sigmoid curve fitting and their typical interpretation in a pharmacological context.

| Parameter | Description | Typical Interpretation in Drug Development |

| EC50 / IC50 | The concentration of a drug that gives half-maximal response (EC50) or inhibition (IC50).[3] | A measure of the drug's potency . A lower EC50/IC50 indicates a more potent drug.[2] |

| Hill Coefficient (nH) | A measure of the steepness of the dose-response curve.[1] | Indicates the cooperativity of ligand binding. nH > 1 suggests positive cooperativity, nH < 1 suggests negative cooperativity, and nH = 1 indicates non-cooperative binding. |

| Emax | The maximal effect of the drug. | A measure of the drug's efficacy . It represents the maximum biological response the drug can produce.[2] |

Visualizing Experimental Workflows and Signaling Pathways

Understanding the underlying biological processes and experimental procedures is facilitated by clear visual representations.

Experimental Workflow for Dose-Response Analysis

The following diagram illustrates a typical workflow for conducting a dose-response experiment and analyzing the resulting data.

Simplified GPCR Signaling Pathway

Many signaling pathways, such as those mediated by G-protein coupled receptors (GPCRs), exhibit sigmoid responses. The amplification at different stages of the cascade contributes to this characteristic.

Logical Relationship in Competitive Binding

In a competitive binding assay, an unlabeled ligand competes with a labeled ligand for the same binding site. This relationship can be visualized as follows:

Conclusion

References

- 1. Dose–response relationship - Wikipedia [en.wikipedia.org]

- 2. fiveable.me [fiveable.me]

- 3. lifesciences.danaher.com [lifesciences.danaher.com]

- 4. Dose Response Relationships/Sigmoidal Curve Fitting/Enzyme Kinetics [originlab.com]

- 5. How Do I Perform a Dose-Response Experiment? - FAQ 2188 - GraphPad [graphpad.com]

- 6. MTT Assay Protocol for Cell Viability and Proliferation [merckmillipore.com]

- 7. broadpharm.com [broadpharm.com]

- 8. CyQUANT MTT Cell Proliferation Assay Kit Protocol | Thermo Fisher Scientific - JP [thermofisher.com]

- 9. Cell Viability Assays - Assay Guidance Manual - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 10. Ligand binding assay - Wikipedia [en.wikipedia.org]

- 11. Ligand Binding Assays: Definitions, Techniques, and Tips to Avoid Pitfalls - Fluidic Sciences Ltd % [fluidic.com]

- 12. Application of the four-parameter logistic model to bioassay: comparison with slope ratio and parallel line models - PubMed [pubmed.ncbi.nlm.nih.gov]

- 13. youtube.com [youtube.com]

Methodological & Application

Application Notes and Protocols: Applying the Sigmoid Function to Dose-Response Data

For Researchers, Scientists, and Drug Development Professionals

These application notes provide a comprehensive guide to utilizing the sigmoid function for the analysis of dose-response data. This document outlines the theoretical basis, experimental protocols, data analysis procedures, and interpretation of results, facilitating robust and reproducible research in pharmacology and drug development.

Introduction to Dose-Response Relationships and the Sigmoid Model

Dose-response relationships are a cornerstone of pharmacology, describing the magnitude of the response of an organism to a drug or stimulus as a function of the dose or concentration.[1][2] These relationships are typically represented by a sigmoidal (S-shaped) curve when the response is plotted against the logarithm of the concentration.[2][3] The sigmoid model, particularly the four-parameter logistic (4PL) model, is widely used to fit and analyze this type of data due to its ability to accurately describe the characteristic S-shape of the curve.[4][5]

The four-parameter logistic equation is defined as:

Y = Bottom + (Top - Bottom) / (1 + 10^((LogEC50 - X) * HillSlope)) [6]

Where:

-

Y is the measured response.

-

X is the logarithm of the drug concentration.[7]

-

Bottom is the minimum response (the lower plateau of the curve).[6]

-

Top is the maximum response (the upper plateau of the curve).[6]

-

LogEC50 is the logarithm of the concentration that produces a response halfway between the Bottom and Top plateaus. The EC50 (half maximal effective concentration) is a key measure of a drug's potency.[7][8][9] For inhibitory responses, this is referred to as the IC50 (half maximal inhibitory concentration).[6][8]

-

HillSlope (or Hill coefficient) describes the steepness of the curve.[6] A HillSlope greater than 1.0 indicates a steeper curve, while a value less than 1.0 indicates a shallower curve.[6]

Experimental Protocol: Generating Dose-Response Data

A well-designed experiment is crucial for obtaining high-quality dose-response data. The following protocol provides a general framework for an in vitro cell-based assay.

Objective: To determine the dose-response relationship of a compound on a specific cellular response (e.g., cell viability, enzyme activity, reporter gene expression).

Materials:

-

Cell line of interest

-

Cell culture medium and supplements

-

Compound to be tested

-

Assay-specific reagents (e.g., CellTiter-Glo®, ELISA kit)

-

Multi-well plates (e.g., 96-well, 384-well)

-

Multichannel pipettes and other standard laboratory equipment

-

Plate reader (e.g., spectrophotometer, luminometer, fluorometer)

Procedure:

-

Cell Seeding:

-

Culture cells to the appropriate confluency.

-

Trypsinize and resuspend cells in fresh medium to achieve a single-cell suspension.

-