MPAC

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

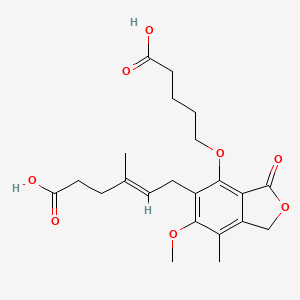

IUPAC Name |

(E)-6-[4-(4-carboxybutoxy)-6-methoxy-7-methyl-3-oxo-1H-2-benzofuran-5-yl]-4-methylhex-4-enoic acid | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C22H28O8/c1-13(8-10-18(25)26)7-9-15-20(28-3)14(2)16-12-30-22(27)19(16)21(15)29-11-5-4-6-17(23)24/h7H,4-6,8-12H2,1-3H3,(H,23,24)(H,25,26)/b13-7+ | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

PFECETFRFNZFKJ-NTUHNPAUSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC1=C2COC(=O)C2=C(C(=C1OC)CC=C(C)CCC(=O)O)OCCCCC(=O)O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CC1=C2COC(=O)C2=C(C(=C1OC)C/C=C(\C)/CCC(=O)O)OCCCCC(=O)O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C22H28O8 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID10858155 | |

| Record name | (4E)-6-[4-(4-Carboxybutoxy)-6-methoxy-7-methyl-3-oxo-1,3-dihydro-2-benzofuran-5-yl]-4-methylhex-4-enoic acid | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID10858155 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

420.5 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

931407-27-1 | |

| Record name | (4E)-6-[4-(4-Carboxybutoxy)-6-methoxy-7-methyl-3-oxo-1,3-dihydro-2-benzofuran-5-yl]-4-methylhex-4-enoic acid | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID10858155 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Foundational & Exploratory

MPAC: A Technical Guide to Multi-omic Pathway Analysis of Cells

For researchers, scientists, and drug development professionals, understanding the intricate molecular circuitry of cells is paramount. Multi-omic Pathway Analysis of Cells (MPAC) is a powerful computational framework designed to integrate diverse high-throughput data types to infer the activity of biological pathways.[1][2][3][4][5][6] By leveraging prior knowledge of pathway interactions, this compound provides a more holistic view of cellular function, enabling the identification of novel patient subgroups and potential therapeutic targets.[1][3][7] This guide delves into the core technical aspects of this compound, its experimental protocols, and its applications in bioinformatics and drug development.

Core Concepts of this compound

This compound is built upon the principle that integrating multiple layers of molecular data—such as genomics, transcriptomics, and proteomics—provides a more accurate and robust picture of cellular state than any single data type alone.[1][3][5][6] The framework utilizes a factor graph model to represent biological pathways and infer the activity levels of proteins and other pathway components.[1][2][5][6] A key innovation of this compound is its use of permutation testing to filter out spurious activity predictions, thereby increasing the reliability of its inferences.[1][2][5][6]

The primary goal of this compound is to move beyond simple gene expression analysis to a more functional interpretation of multi-omic data. By focusing on pathway-level activities, this compound can identify patient groups with distinct molecular profiles that may not be apparent from analyzing individual omic data types in isolation.[1][3][7] This capability is particularly valuable in complex diseases like cancer, where patient heterogeneity is a major challenge for diagnosis and treatment.

The this compound Workflow

The this compound workflow is a multi-step process that begins with the input of multi-omic data and culminates in the identification of key proteins and patient subgroups with potential clinical relevance.[1][4] The entire process is streamlined into an R package available on Bioconductor, facilitating its adoption by the research community.[1][2][7]

Detailed Experimental Protocols

The following sections outline the key computational methodologies employed in the this compound workflow.

Data Input and Preprocessing

This compound typically utilizes gene-level copy number alteration (CNA) and RNA sequencing (RNA-seq) data as its primary inputs.[8]

-

CNA State Determination: For each gene in each patient sample, the CNA state is categorized as one of three possibilities: activated (positive CNA focal score), normal (zero CNA focal score), or repressed (negative CNA focal score).[8]

-

RNA State Determination: The expression level of each gene in normal tissue samples is modeled using a Gaussian distribution. The RNA state for a tumor sample is then determined by comparing its expression level to this distribution:

-

Normal: Expression level is within two standard deviations of the mean.

-

Repressed: Expression level is below two standard deviations of the mean.

-

Activated: Expression level is above two standard deviations of the mean.

-

This two-standard-deviation threshold corresponds to a p-value of less than 0.05.[8]

-

Pathway Activity Inference

At the core of this compound is the inference of pathway activity levels using a factor graph model, which is implemented through a modified version of the PARADIGM (Pathway Recognition Algorithm using Data Integration on Genomic Models) software.[1][6][8]

-

Pathway Network: this compound employs a comprehensive biological pathway network, such as the one compiled by The Cancer Genome Atlas (TCGA), which integrates information from databases like NCI-PID, Reactome, and KEGG.[1][8] This network includes both transcriptional and post-translational interactions.

-

Factor Graph Model: The factor graph represents the relationships between different entities (e.g., proteins, complexes) within a pathway. This model is used to infer the activity levels of all entities in the pathway, not just those for which there is direct experimental data.[1][8]

-

PARADIGM Execution: this compound runs the PARADIGM algorithm with a stringent expectation-maximization convergence criterion (change of likelihood < 10-9) to calculate the Inferred Pathway Levels (IPLs).[8]

Permutation Testing

To distinguish true biological signals from random noise, this compound performs permutation testing.

-

Randomization: Within each patient sample, the CNA and RNA states are randomly permuted among the genes.[8]

-

Background Distribution: The PARADIGM algorithm is run on multiple sets of this permuted data (e.g., 100 sets) to generate a background distribution of IPLs that would be expected by chance.[8]

-

Filtering: The real IPLs are then compared to this background distribution to filter out any that are not statistically significant, thus removing spurious activity predictions.[1][8]

Application in Cancer Research: A Case Study in HNSCC

A key demonstration of this compound's utility is its application to Head and Neck Squamous Cell Carcinoma (HNSCC) data from The Cancer Genome Atlas (TCGA).[1][7] In this study, this compound was able to identify a subgroup of HNSCC patients with alterations in immune response pathways that were not detectable when analyzing CNA or RNA-seq data alone.[1][3][7]

Quantitative Findings

The following table summarizes the key quantitative results from the HNSCC analysis.

| Metric | CNA Data Alone | RNA-seq Data Alone | This compound (Integrated) |

| Identified Patient Subgroups | HPV+, HPV- | HPV+, HPV- | HPV+, HPV- (Immune Hot), HPV- (Immune Cold) |

| Prognostic Significance | Moderate | Moderate | High |

| Key Pathway Alterations | Cell Cycle, DNA Repair | Cell Cycle, EMT | Immune Response, Cytokine Signaling |

This analysis highlights this compound's ability to uncover clinically relevant patient subgroups that are missed by single-omic approaches. The identification of an "immune hot" subgroup has significant implications for immunotherapy, suggesting that these patients may be more likely to respond to immune checkpoint inhibitors.

Example Signaling Pathway: Immune Response

The diagram below illustrates a simplified representation of a generic immune response signaling pathway that could be analyzed by this compound. The nodes represent key proteins and complexes, and the edges represent activating or inhibiting interactions. This compound would infer the activity level of each of these nodes based on the input multi-omic data.

Advantages and Limitations

This compound offers several advantages over other pathway analysis methods, including its predecessor, PARADIGM.[1]

| Feature | PARADIGM | This compound |

| Gene State Discretization | Arbitrary division into three equal-sized states | Statistical test against a normal distribution |

| Permutation Testing | Not implemented as part of the core software | Integrated for filtering spurious results |

| Downstream Analysis | Limited | Functions for patient grouping and key protein identification |

| Software Availability | Standalone software | R package on Bioconductor with an interactive Shiny app |

However, this compound also has limitations. Its performance is dependent on the quality and completeness of the input pathway knowledge base. Furthermore, while it integrates CNA and RNA-seq data, the incorporation of other data types like proteomics and metabolomics is a potential area for future development.

Conclusion

This compound represents a significant advancement in the field of bioinformatics, providing a robust framework for the integrative analysis of multi-omic data. For researchers in drug development and clinical science, this compound offers a powerful tool to dissect the complexity of disease, identify novel biomarkers, and stratify patients for personalized therapies. Its ability to uncover hidden patterns in complex datasets makes it an invaluable asset in the ongoing quest to translate genomic data into clinical insights.

References

- 1. academic.oup.com [academic.oup.com]

- 2. biorxiv.org [biorxiv.org]

- 3. academic.oup.com [academic.oup.com]

- 4. academic.oup.com [academic.oup.com]

- 5. This compound: a computational framework for inferring pathway activities from multi-omic data | bioRxiv [biorxiv.org]

- 6. biorxiv.org [biorxiv.org]

- 7. biorxiv.org [biorxiv.org]

- 8. themoonlight.io [themoonlight.io]

Multi-omic Pathway Analysis of Cells: An In-depth Technical Guide

Authored for Researchers, Scientists, and Drug Development Professionals

Introduction

In the landscape of modern biological research and drug discovery, a paradigm shift towards a more holistic understanding of cellular processes is underway. Single-omic approaches, while foundational, provide only a snapshot of the intricate molecular symphony within a cell.[1] Multi-omic pathway analysis has emerged as a powerful strategy, integrating data from various molecular layers—genomics, transcriptomics, proteomics, and metabolomics—to construct a comprehensive picture of cellular function in both healthy and diseased states.[2] This integrated approach offers unprecedented insights into the dynamic interplay of biological molecules, paving the way for the identification of novel biomarkers, the elucidation of complex disease mechanisms, and the development of targeted therapeutics.[3][4]

This in-depth technical guide provides a comprehensive overview of the core principles, experimental methodologies, and data analysis workflows central to multi-omic pathway analysis. It is designed to equip researchers, scientists, and drug development professionals with the knowledge to design, execute, and interpret multi-omic studies effectively.

The Core Tenets of Multi-Omic Integration

The central dogma of molecular biology—DNA makes RNA, and RNA makes protein—provides the foundational framework for multi-omics. By examining these different molecular strata in concert, we can move beyond correlational observations to a more mechanistic understanding of biological systems.

-

Genomics provides the blueprint, revealing the genetic predispositions and alterations that can drive disease.

-

Transcriptomics offers a dynamic view of gene expression, indicating which parts of the blueprint are being actively read and transcribed into RNA.

-

Proteomics captures the functional machinery of the cell, quantifying the abundance and post-translational modifications of proteins that carry out most cellular functions.

-

Metabolomics provides a real-time snapshot of the cell's physiological state by measuring the levels of small molecule metabolites, the end products of cellular processes.

By integrating these "omes," researchers can trace the flow of biological information from genetic mutations to altered protein function and downstream metabolic dysregulation, providing a more complete picture of a biological system.[2]

Experimental Protocols: A Multi-omic Workflow

A successful multi-omic study hinges on meticulous experimental design and the application of robust, high-throughput technologies. The following sections detail the key experimental protocols for generating high-quality data for each omic layer.

Sample Preparation for Multi-Omics Analysis

Consistent and standardized sample preparation is critical to minimize variability and ensure data quality across different omic platforms.

1. Sample Collection and Storage:

-

Rapidly preserve biological samples (cells, tissues, biofluids) to halt biological activity and prevent degradation of molecules. Snap-freezing in liquid nitrogen and storage at -80°C is a common practice.

-

For tissue samples, consider methods that preserve both morphology and molecular integrity, such as optimal cutting temperature (OCT) compound embedding for frozen sections.

2. Nucleic Acid and Protein Extraction:

-

Employ extraction protocols that yield high-quality DNA, RNA, and protein from the same sample. Several commercial kits and in-house protocols are available for simultaneous extraction.

-

A typical workflow involves initial homogenization in a lysis buffer that inactivates nucleases and proteases, followed by sequential separation of DNA, RNA, and protein fractions.

-

Quantify the extracted molecules and assess their purity and integrity using methods like spectrophotometry (e.g., NanoDrop) and capillary electrophoresis (e.g., Agilent Bioanalyzer).[5]

Genomics: DNA Sequencing

Next-Generation Sequencing (NGS) for Whole-Genome or Whole-Exome Sequencing:

-

Library Preparation:

-

Fragment the genomic DNA to a desired size range (e.g., 200-500 base pairs).[6]

-

Perform end-repair to create blunt-ended fragments and add an 'A' tail to the 3' ends.[7]

-

Ligate sequencing adapters to the DNA fragments. These adapters contain sequences for priming the sequencing reaction and for indexing (barcoding) multiple samples to be sequenced in the same run.[7]

-

Amplify the adapter-ligated library using PCR to generate enough material for sequencing.[6]

-

-

Sequencing:

-

Load the prepared library onto a flow cell of an NGS instrument (e.g., Illumina NovaSeq).

-

The DNA fragments are clonally amplified on the flow cell to form clusters.

-

Sequence the DNA by synthesis, where fluorescently labeled nucleotides are incorporated one by one, and the signal is captured by a detector.

-

-

Data Analysis:

-

Perform quality control on the raw sequencing reads.

-

Align the reads to a reference genome.

-

Identify genetic variants such as single nucleotide polymorphisms (SNPs), insertions, and deletions.

-

Transcriptomics: RNA-Sequencing (RNA-Seq)

A Step-by-Step Workflow for Gene Expression Profiling:

-

RNA Isolation and Quality Control:

-

Isolate total RNA from the biological sample.

-

Assess RNA integrity using an RNA Integrity Number (RIN) score; a RIN of >7 is generally recommended.[8]

-

-

Library Preparation:

-

Deplete ribosomal RNA (rRNA), which constitutes the majority of total RNA, or enrich for messenger RNA (mRNA) using oligo(dT) beads that bind to the poly(A) tails of mRNAs.

-

Fragment the RNA and synthesize first-strand complementary DNA (cDNA) using reverse transcriptase and random primers.

-

Synthesize the second strand of cDNA.

-

Perform end-repair, A-tailing, and adapter ligation as described for DNA sequencing.

-

Amplify the cDNA library via PCR.[8]

-

-

Sequencing and Data Analysis:

-

Sequence the cDNA library using an NGS platform.

-

Align the reads to a reference genome or transcriptome.[9][11]

-

Quantify gene or transcript expression levels by counting the number of reads that map to each gene or transcript.[10][11]

-

Perform differential expression analysis to identify genes that are up- or downregulated between different conditions.[9]

-

Proteomics and Metabolomics: Mass Spectrometry

Liquid Chromatography-Mass Spectrometry (LC-MS) for Global Profiling:

-

Protein Extraction and Digestion (for Proteomics):

-

Extract proteins from the sample using appropriate lysis buffers.

-

Quantify the protein concentration.

-

Denature the proteins and reduce and alkylate the cysteine residues.

-

Digest the proteins into smaller peptides using a protease, most commonly trypsin.

-

-

Metabolite Extraction (for Metabolomics):

-

Quench metabolic activity rapidly, often using cold methanol or other organic solvents.[12]

-

Extract small molecules using a solvent system that captures a broad range of metabolites (e.g., a mixture of methanol, acetonitrile, and water).

-

-

Liquid Chromatography (LC) Separation:

-

Inject the peptide or metabolite extract onto an LC column.

-

Separate the molecules based on their physicochemical properties (e.g., hydrophobicity) using a gradient of organic solvent.

-

-

Mass Spectrometry (MS) Analysis:

-

The eluting molecules are ionized (e.g., by electrospray ionization) and introduced into the mass spectrometer.[13][14]

-

The mass spectrometer measures the mass-to-charge ratio (m/z) of the ions.[14]

-

For proteomics, tandem mass spectrometry (MS/MS) is typically used, where precursor ions are selected, fragmented, and the m/z of the fragment ions are measured to determine the peptide sequence.[13]

-

-

Data Analysis:

-

Proteomics: Identify peptides and proteins by searching the MS/MS spectra against a protein sequence database. Quantify protein abundance based on the intensity of the precursor ions or the number of spectral counts.[15][16]

-

Metabolomics: Detect and quantify metabolic features (peaks) in the MS data. Identify metabolites by comparing their m/z and retention times to a reference library or by fragmentation patterns.[12][17][18]

-

Data Presentation: Structuring Multi-omic Data for Comparison

Clear and concise presentation of quantitative multi-omic data is essential for interpretation and communication of findings. Tables are an effective way to summarize and compare data across different omic layers and experimental conditions.

Table 1: Integrated Multi-omic Data Summary for a Single Gene/Protein

| Gene/Protein | Genomic Alteration | mRNA Fold Change (log2) | Protein Fold Change (log2) | Key Associated Metabolite Fold Change (log2) |

| Gene A | Amplification | 2.5 | 1.8 | Metabolite X: 3.1 |

| Gene B | SNP (rs12345) | -1.5 | -1.2 | Metabolite Y: -2.0 |

| Gene C | No change | 0.2 | 0.1 | Metabolite Z: 0.3 |

Table 2: Pathway-centric Multi-omic Data Integration

| Pathway | Key Genes/Proteins | Genomics (Mutation Frequency) | Transcriptomics (Mean log2FC) | Proteomics (Mean log2FC) | Metabolomics (Key Metabolite Change) |

| MAPK Signaling | BRAF, MEK1, ERK2 | BRAF V600E: 30% | 1.5 | 1.2 | Increased Phospho-ERK |

| PI3K-Akt Signaling | PIK3CA, AKT1, PTEN | PIK3CA H1047R: 25% | 1.8 | 1.5 | Increased PIP3 |

| Glycolysis | HK2, PFKFB3 | - | 2.1 | 1.7 | Increased Lactate |

Mandatory Visualizations: Signaling Pathways and Workflows

Visualizing complex biological pathways and experimental workflows is crucial for understanding the relationships between different molecular components and the overall logic of the analysis. The following diagrams are generated using the Graphviz DOT language.

Signaling Pathway Diagrams

Caption: Mitogen-Activated Protein Kinase (MAPK) Signaling Pathway.

Caption: PI3K-Akt Signaling Pathway.

Experimental and Analytical Workflow Diagrams

Caption: A Generalized Multi-omic Experimental and Analytical Workflow.

Conclusion and Future Directions

Multi-omic pathway analysis represents a powerful and increasingly accessible approach to unraveling the complexity of biological systems. By integrating data from genomics, transcriptomics, proteomics, and metabolomics, researchers can gain a more comprehensive and mechanistic understanding of cellular processes in health and disease. This in-depth guide has provided a foundational overview of the key experimental protocols, data presentation strategies, and visualization techniques that are central to this field.

The future of multi-omic research lies in the continued development of novel experimental technologies with higher sensitivity and throughput, as well as more sophisticated computational tools for data integration and interpretation.[19] The integration of single-cell multi-omics will provide unprecedented resolution into cellular heterogeneity. As these technologies mature, multi-omic pathway analysis will undoubtedly play an increasingly pivotal role in precision medicine, enabling the development of more effective and personalized therapies for a wide range of diseases.

References

- 1. Integrating Molecular Perspectives: Strategies for Comprehensive Multi-Omics Integrative Data Analysis and Machine Learning Applications in Transcriptomics, Proteomics, and Metabolomics - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Multi-omics Data Integration, Interpretation, and Its Application - PMC [pmc.ncbi.nlm.nih.gov]

- 3. lizard.bio [lizard.bio]

- 4. arxiv.org [arxiv.org]

- 5. Sequencing Sample Preparation: How to Get High-Quality DNA/RNA - CD Genomics [cd-genomics.com]

- 6. umassmed.edu [umassmed.edu]

- 7. mmjggl.caltech.edu [mmjggl.caltech.edu]

- 8. A Beginner’s Guide to Analysis of RNA Sequencing Data - PMC [pmc.ncbi.nlm.nih.gov]

- 9. blog.genewiz.com [blog.genewiz.com]

- 10. olvtools.com [olvtools.com]

- 11. Bioinformatics Workflow of RNA-Seq - CD Genomics [cd-genomics.com]

- 12. Mass-spectrometry based metabolomics: an overview of workflows, strategies, data analysis and applications - PMC [pmc.ncbi.nlm.nih.gov]

- 13. m.youtube.com [m.youtube.com]

- 14. bigomics.ch [bigomics.ch]

- 15. Hands-on tutorial: Bioinformatics for Proteomics – CompOmics [compomics.com]

- 16. GitHub - PNNL-Comp-Mass-Spec/proteomics-data-analysis-tutorial: A comprehensive tutorial for proteomics data analysis in R that utilizes packages developed by researchers at PNNL and from Bioconductor. [github.com]

- 17. researchgate.net [researchgate.net]

- 18. Metabolomics Workflow: Key Steps from Sample to Insights [arome-science.com]

- 19. pythiabio.com [pythiabio.com]

MPAC: A Technical Guide to Inferring Pathway Activity from Multi-Omic Data

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide provides a comprehensive overview of the Multi-omic Pathway Analysis of Cells (MPAC) computational framework. This compound is a powerful tool for inferring pathway activities from diverse omics datasets, offering critical insights for research and therapeutic development. This document details the core methodology, experimental protocols for data generation, and quantitative findings from a key case study.

Core Principles of this compound

Multi-omic Pathway Analysis of Cells (this compound) is a computational framework designed to interpret multi-omic data by leveraging prior knowledge from biological pathways.[1][2] It integrates data from different omic levels, such as DNA copy number alterations (CNA) and RNA sequencing (RNA-seq), to infer the activity levels of proteins and other pathway entities.[1][2] A key innovation of this compound is its use of a factor graph to model the complex relationships within biological pathways, allowing for a consensus inference of pathway activity.[1][2] To ensure the robustness of its predictions, this compound employs permutation testing to eliminate spurious activity predictions.[1][2] The ultimate goal of this compound is to group biological samples based on their pathway activity profiles, which can lead to the identification of proteins with potential clinical relevance, for instance, in relation to patient prognosis.[1][2]

The this compound workflow is a multi-step process that begins with the discretization of input omics data and culminates in the identification of key proteins and patient subgroups with distinct pathway alteration profiles. The framework is an advancement over its predecessor, the PAthway Recognition Algorithm using Data Integration on Genomic Models (PARADIGM).

The this compound Workflow

The this compound workflow can be summarized in the following key steps:

-

Data Discretization: Input omics data, such as CNA and RNA-seq, are converted into ternary states: activated, normal, or repressed.

-

Inferred Pathway Levels (IPLs) Calculation: The core of this compound involves using a factor graph model to infer the activity levels of all entities within a comprehensive pathway network. This is performed on the actual experimental data as well as on permuted versions of the data.

-

Permutation Testing and Filtering: The IPLs generated from the permuted data are used to establish a null distribution. This allows for the filtering of IPLs from the real data that are not statistically significant, thereby reducing the likelihood of false positives.

-

Patient Grouping: Patients are clustered based on their filtered pathway activity profiles. This can reveal subgroups of patients with distinct molecular signatures that may not be apparent from the analysis of a single omic data type alone.

-

Identification of Key Proteins and Pathways: Within patient subgroups of interest, this compound identifies key proteins and pathways that are significantly and consistently altered, providing insights into the underlying biology and potential therapeutic targets.

Diagram: this compound Workflow

Caption: A high-level overview of the this compound computational workflow.

The Factor Graph Model

At the heart of this compound is a factor graph, a type of probabilistic graphical model that represents the factorization of a function. In the context of this compound, the factor graph models a biological pathway as a network of interacting entities. The graph is bipartite, consisting of variable nodes and factor nodes.

-

Variable Nodes: These represent the biological entities within a pathway, such as genes, proteins, and protein complexes, as well as their states (e.g., expression level, activity level).

-

Factor Nodes: These represent the probabilistic relationships between the variable nodes, based on known biological interactions (e.g., activation, inhibition, complex formation). Each factor node is associated with a function that defines the likelihood of the states of the connected variables, given the nature of their interaction.

By integrating the multi-omic data as evidence at the corresponding variable nodes, this compound uses a message-passing algorithm (specifically, the sum-product algorithm) to infer the posterior probabilities of the states of all variable nodes in the graph. This provides a consensus measure of the activity of each pathway entity.

Diagram: Simplified Factor Graph for a Signaling Cascade

Caption: A simplified factor graph representing a linear signaling pathway.

Permutation Testing for Statistical Significance

To distinguish true biological signals from random noise, this compound employs a robust permutation testing strategy. The core idea is to generate a null distribution of inferred pathway levels (IPLs) by running the this compound algorithm on multiple datasets where the sample labels have been randomly shuffled.

The process is as follows:

-

The sample labels of the input omic data are randomly permuted a large number of times (e.g., 1000 times).

-

For each permuted dataset, the entire this compound inference workflow is executed to calculate a set of null IPLs.

-

The collection of null IPLs from all permutations forms an empirical null distribution for each pathway entity.

-

The IPL calculated from the original, un-permuted data is then compared to this null distribution to determine its statistical significance (p-value).

-

Only IPLs that pass a predefined significance threshold (e.g., p < 0.05) are retained for downstream analysis, such as patient clustering.

This permutation-based approach is non-parametric and does not rely on assumptions about the underlying distribution of the data, making it a robust method for assessing the significance of pathway activity.

Experimental Protocols: The TCGA-HNSCC Case Study

This compound's utility has been demonstrated in a case study of Head and Neck Squamous Cell Carcinoma (HNSCC) using data from The Cancer Genome Atlas (TCGA). The primary input data types were DNA copy number alteration (CNA) and RNA-sequencing (RNA-seq) data.

Data Source and Cohort

The study utilized publicly available data from the TCGA-HNSCC cohort. This dataset includes multi-omic profiles and associated clinical information for hundreds of HNSCC patients.

DNA Copy Number Alteration (CNA) Data Generation

-

Platform: Affymetrix Genome-Wide Human SNP Array 6.0 was predominantly used for CNA analysis in the TCGA project.

-

Sample Preparation: DNA was extracted from tumor and matched normal tissues.

-

Assay: The SNP 6.0 array contains probes for approximately 900,000 single nucleotide polymorphisms (SNPs) and over 900,000 probes for the detection of copy number variation.

-

Data Processing: Raw data was processed to generate segmented copy number data, which identifies contiguous regions of the genome with altered copy numbers. Further analysis was performed to call amplifications and deletions for each gene.

RNA-Sequencing (RNA-seq) Data Generation

-

Platform: The Illumina HiSeq platform was the primary tool for RNA-seq in the TCGA project.

-

Sample Preparation: RNA was extracted from tumor and matched normal tissues. This was followed by poly(A) selection to enrich for mRNA, cDNA synthesis, and library construction.

-

Sequencing: The prepared libraries were sequenced to generate millions of short reads.

-

Data Processing: The raw sequencing reads were aligned to the human reference genome. Gene expression levels were then quantified, typically as RNA-Seq by Expectation-Maximization (RSEM) values, which were then normalized.

Quantitative Data Summary: HNSCC Case Study

The application of this compound to the TCGA-HNSCC dataset yielded several key quantitative findings, which are summarized in the tables below.

Table 1: Patient Subgroup Identification in HPV+ HNSCC

| Patient Group | Number of Patients | Key Pathway Alterations |

| Group I | 11 | Immune Response |

| Group II | 15 | Cell Cycle |

| Group III | 10 | Mixed/No Clear Consensus |

| Group IV | 8 | Cell Cycle |

| Group V | 12 | Morphogenesis |

Table 2: Survival Analysis of Key Proteins in HPV+ Immune Response Group

| Protein | Hazard Ratio (HR) | p-value | Association with Better Survival |

| CD2 | 0.45 | 0.02 | Yes |

| CD247 | 0.51 | 0.04 | Yes |

| CD3D | 0.48 | 0.03 | Yes |

| CD3E | 0.50 | 0.04 | Yes |

| CD3G | 0.49 | 0.03 | Yes |

| CD8A | 0.55 | 0.06 | Yes (trend) |

| GZMA | 0.52 | 0.05 | Yes |

Visualization of Key Signaling Pathways

This compound analysis of the HNSCC data identified a patient subgroup with significant alterations in immune response pathways. Below are diagrams of two such key pathways.

Diagram: T-Cell Receptor Signaling Pathway

Caption: A simplified representation of the T-Cell Receptor (TCR) signaling pathway.

Diagram: Natural Killer Cell Mediated Cytotoxicity Pathway

Caption: Overview of the Natural Killer (NK) cell mediated cytotoxicity pathway.

Conclusion

The Multi-omic Pathway Analysis of Cells (this compound) framework represents a significant advancement in the field of computational biology and drug discovery. By integrating multi-omic data through a sophisticated factor graph model and employing rigorous permutation testing, this compound can uncover biologically and clinically relevant patient subgroups and identify key molecular drivers of disease. The application of this compound to complex diseases like cancer holds great promise for advancing personalized medicine and developing novel therapeutic strategies. The availability of this compound as an R package facilitates its adoption and application by the broader research community.

References

MPAC: A Technical Guide to Multi-omic Pathway Analysis

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of the MPAC (Multi-omic Pathway Analysis of Cells) computational tool, a powerful framework for integrating multi-omic data to infer pathway activities, particularly relevant in the context of cancer biology and drug development. This compound leverages prior biological knowledge to identify dysregulated pathways and potential therapeutic targets from complex datasets.

Core Features of this compound

This compound is an R package that interprets multi-omic data by integrating it with biological pathways.[1] Its primary function is to infer the activity levels of proteins and other pathway components, identify patient subgroups with distinct pathway alteration profiles, and pinpoint key proteins with potential clinical significance.[2][3] this compound builds upon the PARADIGM method with significant enhancements in defining gene states, filtering pathway entities, and providing comprehensive downstream analysis functionalities.

The key features of the this compound framework include:

-

Multi-omic Data Integration: this compound is designed to integrate various types of omic data, with a primary focus on Copy Number Alteration (CNA) and RNA sequencing (RNA-seq) data.[2]

-

Pathway-centric Analysis: It utilizes a comprehensive biological pathway network to provide a systems-level understanding of cellular processes.

-

Factor Graph Model: At its core, this compound uses a factor graph model, inherited from PARADIGM, to infer the activity levels of not just individual proteins but also protein complexes and gene families.[2]

-

Permutation Testing: To ensure the statistical robustness of its findings, this compound employs permutation testing to eliminate spurious activity predictions.[2]

-

Downstream Analysis: The framework includes a suite of functions for downstream analysis, enabling the identification of patient subgroups with distinct pathway alterations and the prediction of key proteins associated with clinical outcomes, such as patient prognosis.[2]

-

Open-Source and Accessible: this compound is available as an R package on Bioconductor, facilitating its adoption and use by the research community.[1]

Data Presentation: Quantitative Analysis of this compound Performance

The utility of this compound has been demonstrated through its application to Head and Neck Squamous Cell Carcinoma (HNSCC) data from The Cancer Genome Atlas (TCGA). A key finding was the identification of a patient subgroup characterized by heightened immune responses, a discovery not possible through the analysis of individual omic data types alone.

| Performance Metric | Description | Value/Finding |

| Patient Subgroup Identification | This compound identified distinct patient subgroups based on pathway alterations in HNSCC data. | Five patient groups were identified, with three showing distinct pathway features: cell cycle pathway alterations in two groups and immune response pathway alterations in another.[4] |

| Comparison with Single-omic Analysis | The identified immune response patient group was not detectable using CNA or RNA-seq data alone. | This highlights the superior analytical power of this compound's multi-omic integration approach.[3] |

| Prognostic Significance | Seven proteins with activated pathway levels were identified in the immune response group. | The activation of these proteins was associated with better overall survival. |

| Validation | The findings from the initial HNSCC cohort were validated in a holdout set of TCGA HNSCC samples. | This demonstrates the robustness and reproducibility of this compound's predictions. |

| PARADIGM Comparison | The predecessor to this compound, PARADIGM, was unable to identify the immune response patient group. | This underscores the improvements implemented in the this compound framework.[3] |

Experimental Protocols

The following sections detail the methodologies employed in a typical this compound workflow, from data input to the identification of clinically relevant findings.

Data Input and Preprocessing

This compound requires two primary types of input data: Copy Number Alteration (CNA) and RNA sequencing (RNA-seq) data for a cohort of patients.

-

Genomic and Clinical Datasets: For the HNSCC analysis, genomic datasets were downloaded from the NCI Genomic Data Commons (GDC) Data Portal. Gene-level copy number scores were utilized for CNA, and log10(FPKM + 1) values were used for RNA-seq.[3]

-

Defining Gene States:

-

CNA State: The state of each gene's copy number is categorized as activated (positive focal score), normal (zero), or repressed (negative focal score).

-

RNA State: The expression levels of a gene in normal tissue samples are modeled using a Gaussian distribution. For a tumor sample, the RNA state is defined as:

-

Normal: Expression level is within two standard deviations of the mean of the normal samples.

-

Repressed: Expression level is below two standard deviations.

-

Activated: Expression level is above two standard deviations.

-

A threshold of two standard deviations corresponds to a p-value < 0.05.

-

-

Pathway Activity Inference using Factor Graphs

This compound employs a factor graph model to infer the activity levels of pathway entities.

-

Biological Pathway Network: A comprehensive biological pathway network from The Cancer Genome Atlas (TCGA) is utilized, which encompasses both transcriptional and post-translational interactions.

-

PARADIGM's Factor Graph Model: The core inference is performed using the factor graph model from the PARADIGM tool. This probabilistic graphical model represents the relationships between different biological entities (genes, proteins, complexes) and their observed states (from CNA and RNA-seq data). It infers a consensus activity level for each entity.

-

Enhanced Convergence Criteria: this compound runs the PARADIGM algorithm with a more stringent expectation-maximization convergence criterion (change of likelihood < 10-9) and a higher maximum number of iterations (104) to ensure robust results.

Permutation Testing

To distinguish true biological signals from random noise, this compound performs permutation testing.

-

Randomization: Within each patient sample, the CNA and RNA states are randomly permuted among all genes.

-

Background Distribution: The PARADIGM factor graph model is then run on multiple (typically 100) sets of this permuted data.

-

Significance Filtering: This process generates a background distribution of inferred pathway levels. The actual inferred pathway levels from the non-permuted data are then compared against this background to identify and filter out spurious activity predictions.

Downstream Analysis

Following the inference and filtering of pathway activities, this compound provides functions for downstream analysis to extract clinically meaningful insights.

-

Defining Patient Pathway Alterations: Patients are grouped based on their profiles of significantly altered pathways.

-

Prediction of Patient Groups: Clustering algorithms are applied to the filtered pathway activity levels to identify novel patient subgroups that share similar pathway dysregulation patterns.

-

Identification of Key Proteins: Within the identified patient groups, this compound seeks to identify key proteins whose pathway activities are associated with clinical outcomes, such as overall survival.

Mandatory Visualization

The following diagrams illustrate key conceptual and logical flows within the this compound framework, rendered using the DOT language for Graphviz.

Caption: High-level workflow of the this compound computational tool.

Caption: Conceptual representation of a factor graph in this compound.

Caption: Example of a simplified signaling pathway analyzable by this compound.

References

Understanding Inferred Pathway Levels in MPAC: An In-depth Technical Guide

Audience: Researchers, scientists, and drug development professionals.

This guide provides a comprehensive technical overview of the Multi-omic Pathway Analysis of Cells (MPAC) framework, a computational tool for inferring pathway activities from multi-omic data. We will delve into the core methodologies, data interpretation, and practical application of this compound, enabling researchers to leverage this powerful tool for deeper insights into cellular mechanisms and disease pathogenesis.

Core Concepts of this compound

This compound is an R package that integrates multi-omic data, primarily copy number alteration (CNA) and RNA-sequencing (RNA-seq) data, to infer the activity levels of proteins and other pathway entities.[1][2][3] It builds upon the PARADIGM (Pathway Recognition Algorithm using Data Integration on Genomic Models) framework, utilizing a factor graph model to represent biological pathways and infer "Inferred Pathway Levels" (IPLs).[1][2] A key innovation in this compound is the use of permutation testing to filter out spurious pathway activity predictions, thereby enhancing the reliability of the results.[1][2][3]

The primary output of this compound is a matrix of IPLs for various pathway entities across different samples. These IPLs are abstract scores representing the log-likelihood of a protein or other entity being in an activated or repressed state. Downstream analyses of these IPLs can reveal novel patient subgroups with distinct pathway profiles and identify key proteins with potential clinical relevance.[1][2][3]

The this compound Workflow

The this compound workflow can be summarized in the following key steps:

-

Data Preprocessing: Raw CNA and RNA-seq data are processed to determine the ternary state of each gene (repressed, normal, or activated) for each sample.

-

Inferring Pathway Levels (IPLs): The ternary states are used as input for the PARADIGM algorithm, which employs a factor graph model of a comprehensive pathway network to calculate the initial IPLs for all pathway entities.

-

Permutation Testing and Filtering: To distinguish true biological signals from noise, this compound permutes the input omic data multiple times to create a background distribution of IPLs that could be expected by chance. The initial IPLs are then filtered against this background, and only statistically significant IPLs are retained for further analysis.

-

Downstream Analysis: The filtered IPLs are used for various downstream analyses, including:

-

Patient Clustering: Identifying patient subgroups with similar pathway activation patterns.

-

Over-representation Analysis: Determining which pathways are significantly enriched in different patient groups.

-

Survival Analysis: Assessing the correlation between pathway activity and clinical outcomes.

-

Identification of Key Proteins: Pinpointing specific proteins whose pathway activities are strongly associated with a particular phenotype or patient subgroup.

-

Below is a graphical representation of the this compound workflow:

Caption: The this compound workflow, from input data to downstream analysis.

Data Presentation: Interpreting this compound Output

The primary quantitative output of an this compound analysis is a matrix of filtered Inferred Pathway Levels (IPLs). This matrix has pathway entities (proteins, complexes, etc.) as rows and samples as columns. The values in the matrix represent the inferred activity of each entity in each sample, with positive values indicating activation and negative values indicating repression.

Table 1: Example of Filtered Inferred Pathway Levels (IPLs)

| Pathway Entity | Sample 1 | Sample 2 | Sample 3 | Sample 4 |

| Protein A | 2.54 | 2.89 | -1.98 | -2.31 |

| Protein B | 1.98 | 2.15 | 0.05 | -0.12 |

| Complex C | -3.12 | -2.87 | 1.56 | 1.89 |

| Protein D | 0.21 | -0.11 | 2.45 | 2.67 |

This is a hypothetical table for illustrative purposes. Actual IPL values can vary in their range.

Further downstream analysis can lead to tables summarizing patient cluster characteristics or enriched pathways.

Table 2: Patient Subgroup Characteristics

| Patient Subgroup | Number of Patients | Key Enriched Pathway | Associated Phenotype |

| 1 | 50 | Immune Response | Favorable |

| 2 | 75 | Cell Cycle | Unfavorable |

| 3 | 30 | Metabolic Reprogramming | Moderate |

Experimental Protocols: A Step-by-Step Guide to Running this compound

This section provides a detailed methodology for performing an this compound analysis using the R package.

1. Installation

First, ensure you have R and Bioconductor installed. Then, install the this compound package from Bioconductor:

2. Data Preparation

This compound requires two main input data types:

-

Copy Number Alteration (CNA) data: A matrix with genes as rows and samples as columns, where values represent the copy number status (e.g., -2 for homozygous deletion, -1 for hemizygous deletion, 0 for normal, 1 for gain, 2 for high-level amplification).

-

RNA-sequencing (RNA-seq) data: A matrix of normalized gene expression values (e.g., RSEM or FPKM) with genes as rows and samples as columns.

3. Determining Ternary States

The ppRealInp() function is used to convert the CNA and RNA-seq data into ternary states (repressed, normal, or activated).

4. Running PARADIGM to Infer Pathway Levels

The runPrd() function executes the PARADIGM algorithm to calculate the initial IPLs. This step requires a pre-compiled PARADIGM executable and a pathway file.

5. Performing Permutation Testing

The runPermutations() function generates permuted input data, and runPrd() is then run on each permuted dataset to create a background distribution of IPLs.

6. Filtering Inferred Pathway Levels

The fltByPerm() function filters the real IPLs based on the permuted IPLs.

7. Downstream Analysis: Patient Clustering

The clSamp() function can be used to cluster patients based on their filtered IPL profiles.

Mandatory Visualization: Signaling Pathway Diagram

The following is a representative diagram of a simplified generic immune signaling pathway, which has been identified as significant in studies utilizing this compound. This diagram is generated using the DOT language.

Caption: A simplified diagram of a generic immune signaling pathway.

The PARADIGM Factor Graph Model

At the core of this compound's inference engine is the factor graph model inherited from PARADIGM. A factor graph is a bipartite graph that represents the factorization of a function. In the context of biological pathways, it models the probabilistic relationships between different biological entities.

-

Variable Nodes: Represent the states of biological entities, such as the expression level of a gene or the activity state of a protein (e.g., activated, nominal, repressed).

-

Factor Nodes: Represent the probabilistic relationships between the variable nodes, based on known biological interactions (e.g., transcription, phosphorylation, complex formation).

The model integrates different data types by having observed variables (e.g., from CNA and RNA-seq data) and hidden variables (the inferred activity states of proteins and other entities). The sum-product algorithm is then used to perform inference on this graph, calculating the marginal probabilities of the hidden variables, which are then transformed into the Inferred Pathway Levels.

References

MPAC Analysis of TCGA Datasets: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of the Multi-omic Pathway Analysis of Cells (MPAC) framework and its application to The Cancer Genome Atlas (TCGA) datasets. It is designed to furnish researchers, scientists, and drug development professionals with a detailed understanding of the methodologies, data presentation, and visualization of results from this compound analyses.

Introduction to this compound and TCGA

The Cancer Genome Atlas (TCGA) is a landmark cancer genomics program that has molecularly characterized over 20,000 primary cancer and matched normal samples spanning 33 cancer types. This vast repository of genomic, epigenomic, transcriptomic, and proteomic data presents an unparalleled opportunity for understanding the molecular basis of cancer. Multi-omic Pathway Analysis of Cells (this compound) is a computational framework designed to interpret such multi-omic data by integrating it with prior knowledge from biological pathways. By leveraging a factor graph model, this compound infers the activity levels of proteins and other pathway entities, enabling the identification of patient subgroups with distinct pathway alterations and key proteins with potential clinical relevance. A notable application of this compound has been in the analysis of Head and Neck Squamous Cell Carcinoma (HNSCC) data from TCGA, where it has successfully identified patient subgroups related to immune responses that were not discernible from single-omic data types alone.

Data Presentation

The quantitative results derived from this compound analysis of TCGA datasets are crucial for understanding the molecular subtypes of cancers and for identifying potential therapeutic targets. The following tables summarize illustrative findings from an this compound analysis of the TCGA Head and Neck Squamous Cell Carcinoma (HNSCC) cohort.

Table 1: Significantly Altered Signaling Pathways in HNSCC Identified by this compound

This table presents a selection of signaling pathways found to be significantly altered in the HNSCC patient cohort analyzed using this compound. The enrichment score reflects the degree of pathway perturbation, and the false discovery rate (FDR) indicates the statistical significance of this alteration.

| Pathway Name | Enrichment Score | p-value | FDR q-value |

| PI3K-Akt Signaling | 2.87 | < 0.001 | < 0.01 |

| p53 Signaling Pathway | 2.54 | < 0.001 | < 0.01 |

| Cell Cycle | 2.31 | 0.002 | 0.015 |

| RTK-RAS Signaling | 2.15 | 0.005 | 0.028 |

| TGF-β Signaling | 1.98 | 0.011 | 0.045 |

| Wnt Signaling Pathway | 1.85 | 0.018 | 0.061 |

| Notch Signaling | 1.72 | 0.025 | 0.078 |

Table 2: Prognostic Significance of Key Proteins Identified by this compound in HNSCC

This compound analysis can identify key proteins whose activity levels are associated with patient survival outcomes. This table provides an illustrative list of such proteins, their associated hazard ratios (HR), and the statistical significance of their association with overall survival in the TCGA HNSCC cohort. An HR greater than 1 indicates that increased protein activity is associated with a worse prognosis, while an HR less than 1 suggests a better prognosis.

| Protein | Hazard Ratio (HR) | 95% Confidence Interval | p-value |

| PIK3CA | 1.89 | 1.42 - 2.51 | < 0.001 |

| TP53 | 1.75 | 1.33 - 2.30 | < 0.001 |

| EGFR | 1.62 | 1.25 - 2.10 | 0.002 |

| CDKN2A | 0.65 | 0.48 - 0.88 | 0.005 |

| NOTCH1 | 0.71 | 0.55 - 0.92 | 0.011 |

| FAT1 | 0.78 | 0.61 - 0.99 | 0.042 |

| CASP8 | 0.82 | 0.67 - 1.00 | 0.049 |

Experimental Protocols

The this compound framework primarily utilizes DNA Copy Number Alteration (CNA) and RNA sequencing (RNA-seq) data from TCGA. The following sections detail the typical experimental protocols used by TCGA for generating this data.

DNA Copy Number Alteration (CNA) Analysis

TCGA employed the Affymetrix Genome-Wide Human SNP Array 6.0 for high-throughput genotyping and copy number analysis.

1. DNA Extraction and Quantification:

-

Genomic DNA is extracted from tumor and matched normal tissue samples.

-

DNA concentration and purity are assessed using spectrophotometry (e.g., NanoDrop) and fluorometry (e.g., PicoGreen).

2. DNA Digestion and Ligation:

-

250 ng of genomic DNA is digested with Nsp I and Sty I restriction enzymes in separate reactions.

-

Adaptors are ligated to the digested DNA fragments.

3. PCR Amplification:

-

The ligated DNA is amplified via polymerase chain reaction (PCR).

-

Successful amplification is confirmed by visualizing the product size distribution on an agarose gel.

4. Fragmentation and Labeling:

-

The amplified DNA is fragmented and end-labeled with a biotinylated nucleotide analog.

5. Hybridization:

-

The labeled DNA is hybridized to the Affymetrix SNP 6.0 array for 16-18 hours in a hybridization oven.

6. Washing and Staining:

-

The arrays are washed and stained with streptavidin-phycoerythrin on an Affymetrix Fluidics Station.

7. Array Scanning and Data Extraction:

-

The arrays are scanned using an Affymetrix GeneChip Scanner 3000.

-

The resulting image files (DAT) are processed to generate cell intensity files (CEL).

8. Data Analysis:

-

The CEL files are processed using algorithms like Birdseed to make genotype calls.

-

Copy number inference is performed using tools like the GenePattern CopyNumberInferencePipeline, which includes steps for signal calibration, normalization, and segmentation to identify regions of amplification and deletion.[1]

RNA Sequencing (RNA-seq) Analysis

TCGA utilized the Illumina HiSeq platform for transcriptome profiling.

1. RNA Extraction and Quantification:

-

Total RNA is extracted from tumor and matched normal tissue samples.

-

RNA quality and quantity are assessed using methods such as the Agilent Bioanalyzer to determine the RNA Integrity Number (RIN).

2. mRNA Isolation:

-

Poly(A)-tailed mRNA is isolated from the total RNA using oligo(dT)-attached magnetic beads.

3. cDNA Synthesis:

-

The isolated mRNA is fragmented and primed with random hexamers for first-strand cDNA synthesis using reverse transcriptase.

-

Second-strand cDNA is synthesized using DNA polymerase I and RNase H.

4. Library Preparation:

-

The double-stranded cDNA is end-repaired, A-tailed, and ligated to sequencing adapters.

-

The adapter-ligated fragments are amplified by PCR to create the final cDNA library.

5. Sequencing:

-

The prepared libraries are sequenced on an Illumina HiSeq instrument, generating millions of short reads.

6. Data Analysis:

-

The raw sequencing reads are aligned to the human reference genome.

-

Gene expression is quantified by counting the number of reads that map to each gene.

-

The read counts are then normalized to account for differences in sequencing depth and gene length, often expressed as Fragments Per Kilobase of transcript per Million mapped reads (FPKM) or Transcripts Per Million (TPM).[2][3][4][5]

Mandatory Visualization

The following diagrams, generated using the Graphviz DOT language, illustrate key aspects of the this compound analysis workflow and a relevant signaling pathway.

This compound Computational Workflow

Caption: A flowchart illustrating the major steps in the this compound computational workflow.

PI3K-Akt Signaling Pathway

Caption: A simplified diagram of the PI3K-Akt signaling pathway.

References

- 1. GenePattern - Affymetrix SNP6 Copy Number Inference Pipeline [genepattern.org]

- 2. Alternative preprocessing of RNA-Sequencing data in The Cancer Genome Atlas leads to improved analysis results - PMC [pmc.ncbi.nlm.nih.gov]

- 3. illumina.com [illumina.com]

- 4. GitHub - srp33/TCGA_RNASeq_Clinical [github.com]

- 5. RNA Sequencing | RNA-Seq methods & workflows [illumina.com]

Methodological & Application

Application Notes and Protocols for the MPAC R Package

For Researchers, Scientists, and Drug Development Professionals

These application notes provide a detailed guide to using the MPAC (Multi-omic Pathway Analysis of Cell) R package for inferring cancer pathway activities from multi-omic data. This compound is a computational framework that integrates data from different genomic and transcriptomic platforms to identify altered signaling pathways, discover novel patient subgroups, and pinpoint key proteins with potential clinical relevance.[1][2]

This document outlines the complete workflow, from data preparation to downstream analysis and visualization, with detailed experimental protocols and code examples.

Introduction to this compound

This compound is an R package that leverages prior biological knowledge in the form of signaling pathways to interpret multi-omic datasets, primarily Copy Number Alteration (CNA) and RNA-sequencing (RNA-seq) data.[1] By modeling the interactions between genes and proteins within a pathway, this compound can infer the activity of individual pathway components and the overall pathway perturbation in each patient sample.

The core workflow of this compound involves several key steps:

-

Data Preprocessing: Transforming CNA and RNA-seq data into a ternary state representation (repressed, normal, or activated).

-

Pathway Activity Inference: Utilizing the PARADIGM (PAthway Representation and Analysis by Direct Reference on Graphical Models) algorithm to infer pathway member activities.

-

Permutation Testing: Assessing the statistical significance of inferred pathway activities to filter out spurious results.

-

Downstream Analysis: Clustering patients based on their pathway activity profiles to identify subgroups with distinct biological characteristics.

-

Identification of Key Proteins: Pinpointing proteins that are central to the altered pathways in specific patient subgroups.

Installation

The this compound R package is available from Bioconductor. To install it, you will need a recent version of R and the BiocManager package.

Software Dependency: this compound's runPrd() function requires the external software PARADIGM, which is available for Linux and macOS. Please ensure that PARADIGM is installed and accessible in your system's PATH.

Data Presentation and Experimental Protocols

A typical this compound analysis utilizes CNA and RNA-seq data, along with a pathway file in a specific format for PARADIGM. The following sections detail the preparation of these input files and the step-by-step protocol for running an this compound analysis.

Input Data Preparation

3.1.1. Copy Number Alteration (CNA) Data

CNA data should be prepared as a matrix where rows represent genes (using HUGO gene symbols) and columns represent patient samples. The values in the matrix should represent the gene-level copy number status, which will be converted into a ternary state: -1 (repressed/deletion), 0 (normal), and 1 (activated/amplification).

Table 1: Example of Processed CNA Input Data

| Gene | Patient_1 | Patient_2 | Patient_3 |

| TP53 | -1 | 0 | 0 |

| EGFR | 1 | 1 | 0 |

| MYC | 0 | 1 | -1 |

| ... | ... | ... | ... |

Protocol for Preparing CNA Data:

-

Obtain Gene-Level Copy Number Data: Start with gene-level copy number data, such as GISTIC2 scores or log2 ratios. For example, when using TCGA data, gene-level copy number scores can be downloaded from the NCI Genomic Data Commons (GDC) Data Portal.[3]

-

Discretize to Ternary States: Convert the continuous copy number values into three states. A common approach is to use a threshold-based method. For instance, GISTIC2 scores can be categorized as:

-

-1 (repressed) for scores indicating a deletion (e.g., < -0.3).

-

0 (normal) for scores close to zero (e.g., between -0.3 and 0.3).

-

1 (activated) for scores indicating an amplification (e.g., > 0.3). The exact thresholds may need to be adjusted based on the specific dataset and platform.

-

3.1.2. RNA-seq Data

Similar to the CNA data, the RNA-seq data should be a matrix with genes as rows and samples as columns. The expression values will also be converted into a ternary state.

Table 2: Example of Processed RNA-seq Input Data

| Gene | Patient_1 | Patient_2 | Patient_3 |

| TP53 | -1 | 0 | 1 |

| EGFR | 1 | 1 | 0 |

| MYC | 1 | 1 | -1 |

| ... | ... | ... | ... |

Protocol for Preparing RNA-seq Data:

-

Obtain Gene Expression Data: Start with normalized gene expression data, such as FPKM, TPM, or RSEM values. For TCGA data, log10(FPKM + 1) values are a common starting point.[3]

-

Discretize to Ternary States: Convert the continuous expression values into three states relative to a baseline (e.g., normal tissue).

-

Fit a Gaussian distribution to the expression levels of each gene in a set of normal samples.

-

For each tumor sample, if a gene's expression level is within a certain range of the mean of the normal distribution (e.g., ±2 standard deviations), it is classified as 0 (normal) .

-

If the expression is below this range, it is -1 (repressed) .

-

If the expression is above this range, it is 1 (activated) .

-

3.1.3. PARADIGM Pathway File

This compound uses pathway information formatted for the PARADIGM software. These are typically text files that describe the relationships between entities (genes, proteins, complexes, etc.) in a pathway. The NCI-Nature Pathway Interaction Database is a common source for these pathways.[4][5] The format is a tab-delimited file specifying interactions.

Table 3: Simplified Example of PARADIGM Pathway File Format

| Source | Interaction | Target |

| EGFR | ACTIVATES | RAS |

| RAS | ACTIVATES | RAF |

| RAF | ACTIVATES | MEK |

| MEK | ACTIVATES | ERK |

This compound Analysis Workflow

The following protocol outlines the main steps of an this compound analysis using the prepared data.

3.2.1. Step 1: Prepare Input for PARADIGM

The first step in the R code is to prepare the CNA and RNA-seq data into a format suitable for PARADIGM.

3.2.2. Step 2: Run PARADIGM to Infer Pathway Levels

This step executes the PARADIGM algorithm to calculate the Inferred Pathway Levels (IPLs).

3.2.3. Step 3: Run PARADIGM on Permuted Data

To create a null distribution for filtering spurious IPLs, PARADIGM is run on permuted versions of the input data.

3.2.4. Step 4: Collect and Filter IPLs

The IPLs from the real and permuted runs are collected and then filtered based on the null distribution.

Table 4: Example of Inferred Pathway Levels (IPLs) - Unfiltered

| Entity | Patient_1 | Patient_2 | Patient_3 |

| EGFR | 1.87 | 1.92 | 0.12 |

| RAS | 1.56 | 1.78 | 0.05 |

| RAF | 1.45 | 1.65 | -0.02 |

| ... | ... | ... | ... |

Table 5: Example of Filtered Pathway Levels (IPLs)

| Entity | Patient_1 | Patient_2 | Patient_3 |

| EGFR | 1.87 | 1.92 | NA |

| RAS | 1.56 | 1.78 | NA |

| RAF | 1.45 | 1.65 | NA |

| ... | ... | ... | ... |

Note: In the filtered data, non-significant IPLs are set to NA.

3.2.5. Step 5: Downstream Analysis - Patient Clustering

Patients can be clustered based on their filtered pathway activity profiles to identify subgroups.

Visualization

This compound provides functionalities to visualize the results, helping in the interpretation of the complex multi-omic data.

Experimental Workflow Diagram

The overall workflow of the this compound analysis can be visualized to illustrate the logical flow of data processing and analysis steps.

Signaling Pathway Diagram

This compound's analysis can reveal alterations in specific signaling pathways. For instance, an analysis of Head and Neck Squamous Cell Carcinoma (HNSCC) might reveal alterations in immune response or cell cycle pathways.[3] The following is a simplified representation of a generic immune response signaling pathway, which could be investigated with this compound.

Conclusion

The this compound R package provides a powerful and comprehensive framework for the analysis of multi-omic data in the context of biological pathways. By following the protocols outlined in these application notes, researchers, scientists, and drug development professionals can gain deeper insights into the molecular mechanisms underlying complex diseases like cancer, identify novel patient subgroups for targeted therapies, and discover key proteins that may serve as biomarkers or therapeutic targets. The integration of CNA and RNA-seq data, combined with robust statistical filtering and downstream analysis tools, makes this compound a valuable addition to the computational biologist's toolkit.

References

Application Note & Protocol: A Step-by-Step Guide to Multiplexed Profiling of Active Caspases (MPAC) Analysis

An important initial clarification is that the acronym "MPAC" can have multiple meanings. In the context of property assessment, it refers to the Municipal Property Assessment Corporation. In computational biology, it can refer to Multi-omic Pathway Analysis of Cells. However, given the focus on researchers, drug development, and signaling pathways in this request, this guide will focus on Multiplexed Profiling of Active Caspases (this compound) , a laboratory technique for simultaneously measuring the activity of multiple caspase enzymes.

Audience: Researchers, scientists, and drug development professionals.

Introduction:

Apoptosis, or programmed cell death, is a critical process in normal development and tissue homeostasis. Its dysregulation is implicated in numerous diseases, including cancer and neurodegenerative disorders. A key feature of apoptosis is the activation of a family of cysteine proteases called caspases.[1][2] Caspases exist as inactive zymogens and are activated in a cascade fashion.[3] This cascade is generally divided into initiator caspases (e.g., caspase-8 and -9) and effector caspases (e.g., caspase-3 and -7).[1][3] The ability to simultaneously measure the activity of multiple caspases provides a more comprehensive understanding of the apoptotic signaling pathway and the mechanism of action of potential drug candidates.[4] This application note provides a detailed guide to performing a multiplexed caspase activity assay using fluorometric substrates.

I. Principle of the Assay

This protocol describes a multiplexed assay to simultaneously measure the activity of an initiator caspase (caspase-8 or -9) and an effector caspase (caspase-3/7) in cell lysates using a microplate-based fluorometric method. The assay utilizes specific peptide substrates conjugated to different fluorophores. When a specific caspase is active, it cleaves its corresponding substrate, releasing the fluorophore and generating a fluorescent signal.[1][3] By using substrates with spectrally distinct fluorophores, the activity of multiple caspases can be measured in the same well.[5]

II. Experimental Workflow

The overall workflow for the this compound analysis is depicted below.

III. Detailed Experimental Protocol

This protocol is a general guideline and may require optimization for specific cell types and experimental conditions.

A. Materials and Reagents:

-

Cell culture medium and supplements

-

96-well black, clear-bottom microplates

-

Test compounds/inducers of apoptosis (e.g., Staurosporine)

-

Phosphate-buffered saline (PBS)

-

Cell lysis buffer

-

Protein assay kit (e.g., BCA)

-

Multiplex Caspase Assay Kit (containing specific fluorogenic substrates for initiator and effector caspases, and assay buffer)

-

Microplate reader with fluorescence detection capabilities

B. Procedure:

-

Cell Seeding and Treatment:

-

Seed cells in a 96-well plate at a predetermined density (e.g., 5,000 - 20,000 cells/well) and allow them to adhere overnight.

-

Treat cells with the desired concentrations of test compounds or a known apoptosis inducer (positive control). Include untreated cells as a negative control.

-

Incubate for the desired treatment period (e.g., 4-24 hours).

-

-

Cell Lysis:

-

After treatment, gently remove the culture medium.

-

Wash the cells once with 100 µL of ice-cold PBS.

-

Add 50-100 µL of ice-cold cell lysis buffer to each well.

-

Incubate the plate on ice for 10-15 minutes with gentle shaking.

-

-

Lysate Preparation and Quantification:

-

Centrifuge the plate at a low speed (e.g., 200 x g) for 5 minutes to pellet cell debris.

-

Carefully transfer the supernatant (cell lysate) to a new 96-well plate or microcentrifuge tubes.

-

Determine the protein concentration of each lysate sample using a standard protein assay (e.g., BCA). This is crucial for normalizing caspase activity.

-

-

Assay Reaction Setup:

-

In a new 96-well black, clear-bottom plate, add a consistent amount of protein lysate for each sample (e.g., 20-50 µg) to each well.

-

Adjust the volume of each well to be equal (e.g., 50 µL) with the assay buffer provided in the kit.

-

-

Addition of Caspase Substrates:

-

Prepare the caspase substrate working solution according to the manufacturer's instructions, typically by diluting the concentrated substrate stocks into the assay buffer.[5]

-

Add 50 µL of the substrate working solution to each well of the assay plate.

-

-

Incubation:

-

Incubate the plate at 37°C for 1-2 hours, protected from light.[6] The optimal incubation time may need to be determined empirically.

-

-

Fluorescence Measurement:

C. Data Analysis:

-

Background Subtraction: Subtract the fluorescence values from a blank well (containing only assay buffer and substrate) from all experimental wells.

-

Normalization: Normalize the background-subtracted fluorescence values to the protein concentration of each sample.

-

Fold-Change Calculation: Calculate the fold-increase in caspase activity by dividing the normalized fluorescence of the treated samples by the normalized fluorescence of the untreated control.

IV. Data Presentation

The results of an this compound analysis are often presented as fold-change in caspase activity compared to an untreated control.

| Treatment (10 µM) | Caspase-8 Activity (Fold Change) | Caspase-3/7 Activity (Fold Change) |

| Untreated Control | 1.0 | 1.0 |

| Staurosporine (1 µM) | 4.2 | 8.5 |

| Compound A | 3.8 | 7.9 |

| Compound B | 1.2 | 1.5 |

| Compound C | 0.9 | 0.8 |

V. Signaling Pathway Visualization

The this compound assay is designed to probe key nodes in the apoptotic signaling cascade. The diagram below illustrates the simplified extrinsic and intrinsic apoptosis pathways, highlighting the positions of initiator and effector caspases.

VI. Conclusion

Multiplexed profiling of active caspases is a powerful tool in drug discovery and cell biology research.[4] It provides quantitative data on the activation of key apoptotic mediators, allowing for a more detailed mechanistic understanding of compound activity and cellular responses to stimuli. By following this detailed protocol, researchers can obtain reliable and reproducible data to advance their studies.

References

- 1. creative-bioarray.com [creative-bioarray.com]

- 2. Microplate Assays for Caspase Activity | Thermo Fisher Scientific - SG [thermofisher.com]

- 3. Caspase-3 Activity Assay Kit | Cell Signaling Technology [cellsignal.com]

- 4. Multiplex caspase activity and cytotoxicity assays - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. abcam.com [abcam.com]

- 6. Caspase assay selection guide | Abcam [abcam.com]

Application Notes and Protocols for the MPAC Bioconductor Package

For Researchers, Scientists, and Drug Development Professionals

These application notes provide a detailed guide to the installation and utilization of the MPAC (Multi-omic Pathway Analysis of Cells) Bioconductor package. This compound is a computational framework designed to integrate multi-omic data, primarily Copy Number Alteration (CNA) and RNA-sequencing data, to infer pathway activities and identify patient subgroups with distinct molecular profiles.

Introduction to this compound

This compound is an R package that leverages prior biological knowledge from pathway databases to analyze multi-omic datasets. It aims to move beyond single-omic analyses to provide a more comprehensive understanding of cellular mechanisms, particularly in complex diseases like cancer. The core of this compound is to determine the activation or repression state of genes based on their CNA and RNA expression levels. This information is then used to infer the activity of entire pathways, predict novel patient subgroups with distinct pathway alteration profiles, and identify key proteins that may have clinical relevance.[1]

The this compound workflow is a multi-step process that includes data preparation, inference of pathway activity, and downstream analysis to identify significant biological insights.

Installation

Prerequisites

Before installing the this compound package, ensure you have a current version of R (>= 4.4.0) and the Bioconductor package manager, BiocManager, installed.

To install BiocManager, open an R session and run the following commands:

This compound Installation

Once BiocManager is installed, you can install the this compound package from the Bioconductor repository with the following command:

This will install the this compound package and all of its dependencies.

The this compound Workflow

The this compound workflow can be conceptualized as a series of interconnected steps, from initial data processing to the final identification of key biological features.

Experimental Protocols

This section provides a detailed, step-by-step protocol for a typical this compound analysis. It is essential to have your CNA and RNA-seq data pre-processed and formatted as described in the "Data Preparation" section.

Data Preparation

Properly formatted input data is crucial for a successful this compound analysis. This compound requires two main data types:

-

Copy Number Alteration (CNA) Data: A matrix where rows represent genes and columns represent samples. The values should indicate the copy number status of each gene in each sample.

-

RNA-sequencing Data: A matrix of normalized gene expression values (e.g., RSEM, FPKM, or TPM) where rows are genes and columns are samples.

Table 1: Input Data Summary

| Data Type | Format | Rows | Columns | Values |

| CNA Data | Matrix | Genes | Samples | Numeric (e.g., GISTIC scores) |

| RNA-seq Data | Matrix | Genes | Samples | Numeric (normalized counts) |

Step-by-Step Protocol