CID 78070001

Description

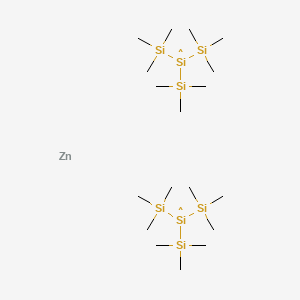

Based on evidence from Figure 1 in , its chemical structure was characterized using GC-MS and vacuum distillation techniques, which revealed distinct chromatographic profiles and mass spectral fragmentation patterns . Further purification and characterization methods, such as fraction-specific content analysis via vacuum distillation, highlight its complexity and the need for advanced analytical workflows .

Properties

CAS No. |

108168-22-5 |

|---|---|

Molecular Formula |

C18H54Si8Zn |

Molecular Weight |

560.69 |

IUPAC Name |

tris(trimethylsilyl)silicon;zinc |

InChI |

InChI=1S/2C9H27Si4.Zn/c2*1-11(2,3)10(12(4,5)6)13(7,8)9;/h2*1-9H3; |

InChI Key |

JOPVAAWEWWZZDK-UHFFFAOYSA-N |

SMILES |

C[Si](C)(C)[Si]([Si](C)(C)C)[Si](C)(C)C.C[Si](C)(C)[Si]([Si](C)(C)C)[Si](C)(C)C.[Zn] |

Synonyms |

Bis[tris(trimethylsilyl)silyl]zinc |

Origin of Product |

United States |

Preparation Methods

Synthetic Routes and Reaction Conditions

CID 78070001 can be synthesized through the reaction of tris(trimethylsilyl)silyl lithium with zinc chloride. The reaction typically occurs in a tetrahydrofuran (THF) solvent under an inert atmosphere to prevent any unwanted reactions with moisture or oxygen . Another method involves the reaction of tris(trimethylsilyl)silane with diethylzinc .

Industrial Production Methods

While specific industrial production methods for bis[tris(trimethylsilyl)silyl]zinc are not well-documented, the general approach involves scaling up the laboratory synthesis methods. This includes ensuring stringent control over reaction conditions to maintain the purity and yield of the compound.

Chemical Reactions Analysis

Types of Reactions

CID 78070001 undergoes various types of chemical reactions, including:

Oxidation: The compound can be oxidized to form zinc oxide and corresponding silyl groups.

Reduction: It can participate in reduction reactions where it acts as a reducing agent.

Substitution: The silyl groups can be substituted with other functional groups under appropriate conditions.

Common Reagents and Conditions

Common reagents used in reactions with bis[tris(trimethylsilyl)silyl]zinc include halogens, acids, and bases. The reactions are typically carried out under inert atmospheres to prevent oxidation and hydrolysis .

Major Products

The major products formed from these reactions depend on the specific reagents and conditions used. For example, oxidation reactions yield zinc oxide, while substitution reactions can produce a variety of organosilicon compounds .

Scientific Research Applications

CID 78070001 has several applications in scientific research:

Chemistry: It is used as a reagent in organic synthesis, particularly in the formation of carbon-silicon bonds.

Biology: Its potential use in biological systems is being explored, although detailed applications are still under investigation.

Medicine: Research is ongoing to determine its potential medicinal applications, particularly in drug delivery systems.

Mechanism of Action

The mechanism of action of bis[tris(trimethylsilyl)silyl]zinc involves its ability to donate electrons and form bonds with various substrates. The bulky silyl groups provide steric protection, allowing the zinc center to participate in selective reactions. The molecular targets and pathways involved depend on the specific application and reaction conditions .

Comparison with Similar Compounds

Comparison with Structurally Similar Compounds

To contextualize CID 78070001, we compare it with oscillatoxin derivatives and other compounds sharing structural motifs or functional groups. Key comparisons are summarized in Table 1 .

Table 1: Structural and Physicochemical Comparison of this compound and Analogues

Key Observations

However, its exact backbone remains uncharacterized.

Functional Diversity : Unlike this compound, oscillatoxins exhibit defined biological activities, such as cytotoxicity and protease inhibition, which are linked to their macrocyclic structures .

Synthetic vs. Natural Origins : CID 57892468 (CAS 899809-61-1) and CID 72863 (CAS 1761-61-1) are synthetic compounds with well-documented synthetic pathways and applications in drug discovery, contrasting with the natural product-like profile of this compound .

Solubility and Bioavailability : CID 57892468 shows moderate solubility (0.019–0.0849 mg/ml), while CID 72863 is highly soluble (0.687 mg/ml). These properties are critical for pharmaceutical applications but remain unexplored for this compound .

Q & A

Basic Research Questions

Q. How should researchers design controlled experiments to compare Transformer-based models (e.g., BERT vs. RoBERTa) on specific NLP tasks?

- Methodological Answer :

- Task Selection : Choose tasks that align with the models' pretraining objectives (e.g., masked language modeling for BERT, sequence generation for BART) .

- Dataset Preprocessing : Ensure datasets are standardized (e.g., WMT for translation, GLUE for generalization) to reduce variability. Use identical tokenization rules across models .

- Hyperparameter Consistency : Match training epochs, batch sizes, and learning rates for fair comparison. For example, RoBERTa demonstrated improved performance by optimizing training duration and data size .

- Evaluation Metrics : Use task-specific metrics (e.g., BLEU for translation, F1 for QA) and report statistical significance via bootstrapping .

Q. What frameworks guide the formulation of research questions for studying model generalization in pre-trained language models?

- Methodological Answer :

- PICOT Framework : Define Population (e.g., model architecture), Intervention (e.g., fine-tuning strategy), Comparison (e.g., baseline models), Outcome (e.g., accuracy), and Timeframe (e.g., training iterations) .

- FINER Criteria : Ensure questions are Feasible (computationally tractable), Interesting (addresses gaps), Novel (e.g., exploring understudied tasks), Ethical (data usage compliance), and Relevant (impact on NLP benchmarks) .

Q. How can researchers ensure reproducibility in NLP experiments involving Transformer architectures?

- Methodological Answer :

- Detailed Documentation : Follow academic guidelines (e.g., Materials & Methods sections) to specify model configurations, datasets, and code versions .

- Open-Source Releases : Share pretrained weights, preprocessing scripts, and hyperparameters, as done in BERT and RoBERTa studies .

- Cross-Validation : Use k-fold validation on diverse datasets to confirm consistency .

Q. What are the best practices for collecting and annotating data in NLP research?

- Methodological Answer :

- Mixed-Method Design : Combine qualitative (e.g., expert interviews) and quantitative (e.g., crowdsourced labeling) approaches .

- Annotation Guidelines : Provide clear instructions to annotators, including examples and edge cases. Measure inter-annotator agreement (e.g., Cohen’s κ) .

- Bias Mitigation : Stratify datasets to represent diverse linguistic patterns and demographics .

Q. How to structure a research paper to highlight contributions in NLP model development?

- Methodological Answer :

- IMRaD Format : Use Introduction (gap identification), Methods (reproducible protocols), Results (visualized metrics), and Discussion (comparison with prior work) .

- Highlight Novelty : Emphasize architectural innovations (e.g., BART’s denoising pretraining) or training improvements (e.g., RoBERTa’s optimized hyperparameters) .

Advanced Research Questions

Q. How can researchers resolve contradictions in performance metrics when evaluating models across heterogeneous datasets?

- Methodological Answer :

- Meta-Analysis : Aggregate results from multiple studies (e.g., GLUE leaderboard comparisons) to identify trends .

- Sensitivity Analysis : Test how variations in data splits or preprocessing affect outcomes. For example, BART’s performance varied significantly with different noising strategies .

- Error Analysis : Categorize failure cases (e.g., syntactic vs. semantic errors) to pinpoint model limitations .

Q. What strategies optimize pretraining for language models under computational constraints?

- Methodological Answer :

- Dynamic Masking : Randomize masked token positions during training to improve data efficiency, as in RoBERTa .

- Gradient Accumulation : Split large batches into smaller chunks to reduce GPU memory usage .

- Distillation : Train smaller models (e.g., DistilBERT) using knowledge distillation from larger models .

Q. How to address overfitting in Transformer-based models when training on limited-domain data?

- Methodological Answer :

- Regularization Techniques : Apply dropout, weight decay, or layer dropout. BERT used 0.1 dropout probability in attention layers .

- Data Augmentation : Use back-translation (e.g., BART) or synonym replacement to expand training data .

- Early Stopping : Monitor validation loss and halt training when performance plateaus .

Q. What methodologies validate the robustness of NLP models against adversarial attacks?

- Methodological Answer :

- Adversarial Training : Inject perturbed examples (e.g., typos, word substitutions) during training .

- Stress Testing : Evaluate models on challenge sets (e.g., ANLI for natural language inference) .

- Gradient-Based Analysis : Use saliency maps to identify vulnerable attention heads .

Q. How to design ablation studies to isolate the impact of specific components in Transformer architectures?

- Methodological Answer :

- Component Removal : Sequentially disable modules (e.g., positional embeddings, multi-head attention) and measure performance drops .

- Controlled Reimplementation : Rebuild models (e.g., BERT-base vs. BERT-large) while varying one parameter (e.g., layers, heads) .

- Statistical Reporting : Use ANOVA or t-tests to quantify the significance of each component’s contribution .

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.