Ratio

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

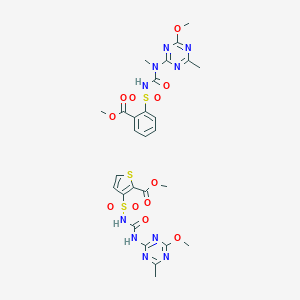

CAS No. |

126100-42-3 |

|---|---|

Molecular Formula |

C27H30N10O12S3 |

Molecular Weight |

782.8 g/mol |

IUPAC Name |

methyl 3-[(4-methoxy-6-methyl-1,3,5-triazin-2-yl)carbamoylsulfamoyl]thiophene-2-carboxylate;methyl 2-[[(4-methoxy-6-methyl-1,3,5-triazin-2-yl)-methylcarbamoyl]sulfamoyl]benzoate |

InChI |

InChI=1S/C15H17N5O6S.C12H13N5O6S2/c1-9-16-13(18-14(17-9)26-4)20(2)15(22)19-27(23,24)11-8-6-5-7-10(11)12(21)25-3;1-6-13-10(16-12(14-6)23-3)15-11(19)17-25(20,21)7-4-5-24-8(7)9(18)22-2/h5-8H,1-4H3,(H,19,22);4-5H,1-3H3,(H2,13,14,15,16,17,19) |

InChI Key |

LVICQXQUORHDFE-UHFFFAOYSA-N |

SMILES |

CC1=NC(=NC(=N1)OC)NC(=O)NS(=O)(=O)C2=C(SC=C2)C(=O)OC.CC1=NC(=NC(=N1)OC)N(C)C(=O)NS(=O)(=O)C2=CC=CC=C2C(=O)OC |

Canonical SMILES |

CC1=NC(=NC(=N1)OC)NC(=O)NS(=O)(=O)C2=C(SC=C2)C(=O)OC.CC1=NC(=NC(=N1)OC)N(C)C(=O)NS(=O)(=O)C2=CC=CC=C2C(=O)OC |

Other CAS No. |

126100-42-3 |

Synonyms |

methyl 3-[(4-methoxy-6-methyl-1,3,5-triazin-2-yl)carbamoylsulfamoyl]th iophene-2-carboxylate, methyl 2-[[(4-methoxy-6-methyl-1,3,5-triazin-2- yl)-methyl-carbamoyl]sulfamoyl]benzoate |

Origin of Product |

United States |

Foundational & Exploratory

Foundational Concepts of Scientific Ratios: An In-depth Technical Guide

Authored for Researchers, Scientists, and Drug Development Professionals

Abstract

In the quantitative landscape of scientific research and drug development, ratios serve as a cornerstone for data interpretation, hypothesis testing, and decision-making. A ratio, at its core, is a comparison of two quantities, providing a relative measure that can standardize data and reveal underlying biological and chemical relationships.[1][2][3] This technical guide delineates the foundational concepts of scientific ratios, their critical applications in experimental science, and detailed protocols for their determination. From dose-response relationships to synergistic drug interactions and enzyme kinetics, this paper provides a comprehensive overview for professionals in the field.

Introduction to Scientific Ratios

A scientific this compound is a mathematical expression that quantifies the relationship between two numbers.[3][4] In experimental sciences, these are not mere numerical exercises; they are potent tools that normalize data, enabling comparisons across different experiments, conditions, and scales.[2] For instance, by comparing a measured value to a control or a standard, ratios can elucidate the potency of a compound, the efficiency of a biological process, or the relative safety of a therapeutic agent.[5][6]

The utility of ratios is widespread, from the Body Mass Index (BMI) in health, which is a this compound of weight to height, to the golden this compound observed in natural patterns.[1][7] In the context of drug development and biomedical research, specific ratios are indispensable for characterizing the activity and therapeutic potential of new chemical entities.

Key Scientific Ratios in Drug Development

The progression of a compound from initial discovery to a potential therapeutic involves the determination of several critical ratios. These ratios provide quantitative measures of a drug's efficacy, potency, and safety.

Ratios in Dose-Response Assessment: IC50 and EC50

A fundamental aspect of pharmacology is understanding how a drug's effect changes with its concentration. This relationship is typically represented by a dose-response curve, a sigmoidal plot of drug concentration versus a measured biological response.[8][9] Two key ratios derived from this curve are the half-maximal inhibitory concentration (IC50) and the half-maximal effective concentration (EC50).

-

IC50 (Half-Maximal Inhibitory Concentration): This is the concentration of an inhibitor at which the response (e.g., enzyme activity, cell growth) is reduced by half.[10][11] A lower IC50 value indicates a more potent inhibitor.

-

EC50 (Half-Maximal Effective Concentration): This is the concentration of a drug that induces a response halfway between the baseline and the maximum effect.[12][13] A lower EC50 value signifies greater potency.

These values are crucial for comparing the potency of different compounds and are central to structure-activity relationship (SAR) studies.[14]

Table 1: Comparison of IC50 and EC50

| Parameter | Definition | Application | Interpretation |

| IC50 | Concentration of an inhibitor that produces 50% inhibition of a biological response. | Quantifying the potency of an antagonist or inhibitor. | A lower IC50 indicates a more potent inhibitor. |

| EC50 | Concentration of a drug that produces 50% of the maximal response. | Quantifying the potency of an agonist or activator. | A lower EC50 indicates a more potent agonist. |

Therapeutic Index (TI): A Measure of Drug Safety

The Therapeutic Index (TI) is a critical this compound that provides a quantitative measure of a drug's safety margin.[5][6][15] It is the this compound of the dose that produces toxicity in 50% of the population (TD50) to the dose that produces a clinically desired or effective response in 50% of the population (ED50).[6][15][16]

Therapeutic Index (TI) = TD50 / ED50 [16]

A higher TI is desirable for a drug, as it indicates a wide margin between the effective dose and the toxic dose.[5][15] Drugs with a narrow therapeutic index require careful monitoring to avoid adverse effects.[6][15]

Table 2: Interpreting the Therapeutic Index

| Therapeutic Index | Safety Margin | Clinical Implication | Example Drugs |

| High TI | Wide | Generally considered safer; less need for intensive monitoring. | Penicillin |

| Low (Narrow) TI | Narrow | Higher risk of toxicity; requires careful dose titration and patient monitoring.[6][15] | Warfarin, Lithium[6] |

Ratios in Drug Combination Studies: The Combination Index (CI)

In many therapeutic areas, particularly oncology, drugs are often used in combination to enhance efficacy, overcome resistance, or reduce toxicity.[17][18] The Combination Index (CI) is a widely used method to quantify the nature of the interaction between two or more drugs.[1][18][19]

The CI is calculated based on the dose-effect relationships of the individual drugs and their combination.[18][19] The interpretation of the CI value is as follows:

-

CI < 1: Synergistic effect (the combined effect is greater than the sum of the individual effects).

-

CI = 1: Additive effect (the combined effect is equal to the sum of the individual effects).

-

CI > 1: Antagonistic effect (the combined effect is less than the sum of the individual effects).

Table 3: Interpretation of Combination Index (CI) Values

| CI Value | Interaction Type | Description |

| < 1 | Synergy | The drugs work together to produce a greater effect than expected.[1][19] |

| = 1 | Additivity | The combined effect is the sum of the individual drug effects.[1][19] |

| > 1 | Antagonism | The drugs interfere with each other, resulting in a reduced overall effect.[1][19] |

Ratios in Enzyme Kinetics: The Michaelis-Menten Constant (Km)

Enzyme kinetics is the study of the rates of enzyme-catalyzed chemical reactions. The Michaelis-Menten equation describes the relationship between the initial reaction velocity (V₀), the maximum reaction velocity (Vmax), and the substrate concentration ([S]).[20][21] The Michaelis constant (Km) is a key this compound derived from this model.

Km is the substrate concentration at which the reaction rate is half of Vmax.[21] It is an inverse measure of the substrate's affinity for the enzyme; a lower Km indicates a higher affinity.[21]

Table 4: Key Parameters in Michaelis-Menten Kinetics

| Parameter | Definition | Significance |

| Vmax | The maximum rate of the reaction when the enzyme is saturated with substrate.[21] | Indicates the catalytic efficiency of the enzyme. |

| Km | The substrate concentration at which the reaction rate is half of Vmax.[21] | Represents the affinity of the enzyme for its substrate.[21] |

Experimental Protocols

The accurate determination of these scientific ratios is paramount. The following sections provide detailed methodologies for key experiments.

Protocol for Determining IC50/EC50 via Dose-Response Curve

This protocol outlines the steps for generating a dose-response curve and calculating the IC50 or EC50 value using a cell-based assay.

Materials:

-

Cells in culture

-

Test compound (inhibitor or activator)

-

Appropriate cell culture medium and supplements

-

96-well microplates

-

Reagent for assessing cell viability (e.g., MTT, resazurin)[2][22]

-

Multichannel pipette

-

Plate reader (spectrophotometer or fluorometer)

-

Sterile phosphate-buffered saline (PBS)

-

Dimethyl sulfoxide (DMSO) for compound dilution

Procedure:

-

Cell Seeding:

-

Harvest and count cells in the logarithmic growth phase.

-

Dilute the cells to the desired concentration (e.g., 5,000-10,000 cells/well) in culture medium.

-

Seed 100 µL of the cell suspension into each well of a 96-well plate.

-

Incubate the plate for 24 hours to allow cells to attach.

-

-

Compound Preparation and Addition:

-

Prepare a stock solution of the test compound in DMSO.

-

Perform a serial dilution of the compound to obtain a range of concentrations (typically 8-12 concentrations).

-

Add a small volume (e.g., 1 µL) of each compound concentration to the respective wells. Include vehicle control (DMSO only) and untreated control wells.

-

-

Incubation:

-

Incubate the plate for a predetermined period (e.g., 48-72 hours) under standard cell culture conditions (37°C, 5% CO₂).

-

-

Cell Viability Assay:

-

Add the viability reagent (e.g., 20 µL of MTT solution) to each well.

-

Incubate for the time specified by the reagent manufacturer (e.g., 2-4 hours for MTT).

-

If using MTT, add a solubilizing agent (e.g., 100 µL of DMSO) to dissolve the formazan crystals.

-

-

Data Acquisition:

-

Measure the absorbance or fluorescence using a plate reader at the appropriate wavelength.

-

-

Data Analysis:

-

Subtract the background reading (media only).

-

Normalize the data to the vehicle control (100% viability or activity) and a positive control for inhibition if available (0% viability).

-

Plot the normalized response versus the logarithm of the compound concentration.

-

Fit the data to a sigmoidal dose-response curve (variable slope) using a suitable software package (e.g., GraphPad Prism).[23][24]

-

The software will calculate the IC50 or EC50 value from the fitted curve.[11][23]

-

Diagram 1: Experimental Workflow for IC50/EC50 Determination

Caption: Workflow for determining IC50/EC50 values from a cell-based assay.

Protocol for Drug Combination Synergy Assay (Combination Index Method)

This protocol describes how to assess the interaction between two drugs using the Combination Index (CI) method.

Materials:

-

Same as for IC50/EC50 determination, but with two test compounds.

Procedure:

-

Determine Individual IC50s:

-

First, perform dose-response experiments for each drug individually to determine their respective IC50 values as described in Protocol 3.1.

-

-

Design Combination Matrix:

-

Prepare serial dilutions of each drug.

-

In a 96-well plate, create a matrix of drug combinations. This can be a checkerboard layout where concentrations of Drug A vary along the rows and concentrations of Drug B vary along the columns.[25]

-

Include wells with each drug alone and vehicle control wells.

-

-

Cell Seeding and Treatment:

-

Seed cells as in Protocol 3.1.

-

Add the drug combinations to the appropriate wells.

-

-

Incubation and Viability Assay:

-

Follow the incubation and cell viability assay steps as in Protocol 3.1.

-

-

Data Analysis:

-

Normalize the data for each drug combination.

-

Use specialized software (e.g., CompuSyn) that employs the Chou-Talalay method to calculate the Combination Index (CI) for each combination.[18][19]

-

The software will generate CI values, Fa-CI plots (fraction affected vs. CI), and isobolograms to visualize the drug interaction.

-

Diagram 2: Logical Flow for Drug Synergy Analysis

Caption: Logical workflow for determining drug synergy using the Combination Index method.

Protocol for Determining Michaelis-Menten Parameters (Km and Vmax)

This protocol outlines a general procedure for determining the Km and Vmax of an enzyme.

Materials:

-

Purified enzyme

-

Substrate

-

Reaction buffer

-

Spectrophotometer

-

Cuvettes

-

Stop solution (if necessary)

Procedure:

-

Preliminary Assays:

-

Determine the optimal conditions for the enzyme assay (pH, temperature, buffer composition).

-

Establish a time course for the reaction to ensure initial velocity is measured (the linear phase of product formation over time).

-

-

Substrate Dilutions:

-

Prepare a series of substrate concentrations in the reaction buffer.

-

-

Enzyme Reaction:

-

In a cuvette, mix the reaction buffer and a specific substrate concentration.

-

Initiate the reaction by adding a fixed amount of the enzyme.

-

Immediately place the cuvette in the spectrophotometer and monitor the change in absorbance over time at a wavelength where the product absorbs light.

-

-

Measure Initial Velocities (V₀):

-

For each substrate concentration, calculate the initial velocity (V₀) from the linear portion of the absorbance vs. time plot.

-

-

Data Analysis:

-

Plot the initial velocities (V₀) against the corresponding substrate concentrations ([S]).

-

Fit the data to the Michaelis-Menten equation using non-linear regression software (e.g., GraphPad Prism).

-

The software will provide the values for Vmax and Km.

-

Alternatively, a Lineweaver-Burk plot (1/V₀ vs. 1/[S]) can be used for a linear representation of the data, though non-linear regression is generally more accurate.[26][27]

-

Diagram 3: Michaelis-Menten to Lineweaver-Burk Transformation

Caption: Data analysis pathway for determining enzyme kinetic parameters.

Conclusion

Scientific ratios are fundamental to the quantitative rigor of research and drug development. They provide a standardized language for comparing results, evaluating the potency and safety of new compounds, and understanding complex biological systems. A thorough understanding of how to experimentally determine and interpret key ratios such as IC50, EC50, Therapeutic Index, Combination Index, and Km is essential for any scientist in this field. The protocols and conceptual frameworks presented in this guide offer a robust foundation for the application of scientific ratios in a laboratory setting. Adherence to meticulous experimental design and data analysis will ensure the generation of reliable and reproducible results, ultimately driving progress in the development of new and effective therapies.

References

- 1. researchgate.net [researchgate.net]

- 2. creative-bioarray.com [creative-bioarray.com]

- 3. Therapeutic index - Wikipedia [en.wikipedia.org]

- 4. 2.10. Drug Combination Test and Synergy Calculations [bio-protocol.org]

- 5. Canadian Society of Pharmacology and Therapeutics (CSPT) - Therapeutic Index [pharmacologycanada.org]

- 6. What is the therapeutic index of drugs? [medicalnewstoday.com]

- 7. austinpublishinggroup.com [austinpublishinggroup.com]

- 8. news-medical.net [news-medical.net]

- 9. Dose–response relationship - Wikipedia [en.wikipedia.org]

- 10. researchgate.net [researchgate.net]

- 11. Star Republic: Guide for Biologists [sciencegateway.org]

- 12. researchgate.net [researchgate.net]

- 13. EC50 - Wikipedia [en.wikipedia.org]

- 14. pubs.acs.org [pubs.acs.org]

- 15. buzzrx.com [buzzrx.com]

- 16. studysmarter.co.uk [studysmarter.co.uk]

- 17. Assessment of Cell Viability in Drug Therapy: IC50 and Other New Time-Independent Indices for Evaluating Chemotherapy Efficacy - PMC [pmc.ncbi.nlm.nih.gov]

- 18. aacrjournals.org [aacrjournals.org]

- 19. Synergistic combination of microtubule targeting anticancer fludelone with cytoprotective panaxytriol derived from panax ginseng against MX-1 cells in vitro: experimental design and data analysis using the combination index method - PMC [pmc.ncbi.nlm.nih.gov]

- 20. researchgate.net [researchgate.net]

- 21. Enzyme Kinetics (Michaelis-Menten plot, Line-Weaver Burke plot) Enzyme Inhibitors with Examples | Pharmaguideline [pharmaguideline.com]

- 22. EC50 analysis - Alsford Lab [blogs.lshtm.ac.uk]

- 23. researchgate.net [researchgate.net]

- 24. How Do I Perform a Dose-Response Experiment? - FAQ 2188 - GraphPad [graphpad.com]

- 25. Diagonal Method to Measure Synergy Among Any Number of Drugs - PMC [pmc.ncbi.nlm.nih.gov]

- 26. Experimental Enzyme Kinetics; Linear Plots and Enzyme Inhibition – BIOC*2580: Introduction to Biochemistry [ecampusontario.pressbooks.pub]

- 27. teachmephysiology.com [teachmephysiology.com]

Whitepaper: Leveraging Exploratory Analysis Using Ratios in Scientific Research

Audience: Researchers, Scientists, and Drug Development Professionals

This guide provides an in-depth look at the application of exploratory data analysis (EDA) using ratios in scientific research, with a particular focus on drug development and molecular biology. Ratios are a powerful tool in a researcher's arsenal, providing a means to normalize data, compare results across experiments, and uncover underlying biological relationships.

The Foundation: Exploratory Data Analysis and Ratio Data

Exploratory Data Analysis (EDA) is an approach to analyzing data sets to summarize their main characteristics, often using visual methods and descriptive statistics.[1][2][3] It is a critical first step that precedes formal hypothesis testing, allowing researchers to gain intuition about the data's structure, identify anomalies, and formulate hypotheses.[1][2]

At the core of many EDA techniques in the sciences is the use of This compound data . A this compound scale is a quantitative scale that has a true, meaningful zero point and equal intervals between neighboring points.[4][5][6] This "true zero" signifies the complete absence of the variable being measured (e.g., zero mass, zero concentration).[5][6] This property allows for all arithmetic operations, including multiplication and division, making statements like "twice as much" or "half the activity" meaningful.[4][5][7]

Applications of this compound Analysis in Research and Drug Development

Ratios are ubiquitous in biological and preclinical research for their ability to standardize results and reveal comparative insights.

Dose-Response Relationships in Pharmacology

The dose-response relationship is fundamental to pharmacology, describing the magnitude of a response to a stimulus or stressor.[8] Dose-response curves, which are typically sigmoidal, are used to derive several critical ratios:

-

EC₅₀ (Half Maximal Effective Concentration): The concentration of a drug that gives half of the maximal response. It is a primary measure of a drug's potency.[8]

-

IC₅₀ (Half Maximal Inhibitory Concentration): The concentration of an inhibitor that is required for 50% inhibition of a biological or biochemical function.

-

LD₅₀ (Median Lethal Dose): The dose of a substance required to kill half the members of a tested population.

-

Therapeutic Index (TI): The this compound of the minimum toxic concentration to the median effective concentration (e.g., LD₅₀ / ED₅₀).[9] A higher TI indicates a safer drug.[10]

These ratios allow for the comparison of potency and efficacy between different compounds, guiding lead selection in drug discovery.[11]

Assay Quality and Performance

In high-throughput screening and other biochemical assays, ratios are essential for validating the quality and robustness of an experiment.

-

Signal-to-Background (S/B) this compound: Compares the mean signal of a positive control to the mean signal of a negative (background) control.[12]

-

Signal-to-Noise (S/N) this compound: Measures the confidence that a signal is real by comparing it to the variability of the background noise.[12]

-

Z-factor: A statistical measure that accounts for the variability in both positive and negative controls to determine assay quality.

Relative Quantification in Molecular Biology

Ratios are the standard for reporting changes in gene and protein expression, as they normalize the data to a stable reference.

-

Fold Change: A this compound that describes how much a quantity changes between an experimental condition and a control. For example, a 2-fold increase in gene expression means the experimental value is twice the control value.

-

Protein Quantification: In techniques like Western Blotting, the intensity of the protein of interest is divided by the intensity of a loading control (e.g., a housekeeping protein like GAPDH or β-actin) to correct for variations in sample loading.

-

Tumor-to-Muscle (T/M) this compound: In preclinical imaging studies (e.g., PET scans), this this compound quantifies the uptake of a labeled probe in tumor tissue relative to background muscle tissue, indicating target engagement.[13]

Signaling Pathway Analysis

To understand the activation state of a signaling pathway, researchers often measure the this compound of a modified protein to its total, unmodified form.

-

Phospho-Protein to Total Protein this compound: The this compound of a phosphorylated (activated) protein to the total amount of that protein indicates the degree of pathway activation in response to a stimulus. This is a cornerstone of cell signaling research.

Data Presentation: Summarizing this compound-Based Data

Clear presentation of quantitative data is crucial. Tables should be structured to facilitate easy comparison.

Table 1: Dose-Response Characteristics of Investigational Compounds

| Compound | EC₅₀ (nM) | IC₅₀ (nM) | Therapeutic Index |

| Drug A | 15.2 | 120.5 | 7.9 |

| Drug B | 45.7 | 850.1 | 18.6 |

| Drug C | 8.1 | 95.3 | 11.8 |

Table 2: Relative Protein Expression in Response to Drug A Treatment

| Target Protein | Condition | Protein Band Intensity (Arbitrary Units) | Loading Control (GAPDH) Intensity | Normalized this compound (Target/GAPDH) | Fold Change (vs. Control) |

| Protein X | Control (Vehicle) | 11050 | 35200 | 0.31 | 1.0 |

| Protein X | Drug A (100 nM) | 29850 | 34900 | 0.86 | 2.77 |

| Protein Y | Control (Vehicle) | 45300 | 35200 | 1.29 | 1.0 |

| Protein Y | Drug A (100 nM) | 12100 | 34900 | 0.35 | 0.27 |

Experimental Protocols

Detailed methodologies are essential for reproducibility. Below are protocols for two key experimental techniques that rely heavily on this compound analysis.

Protocol: Western Blot for Relative Protein Quantification

-

Protein Extraction: Lyse cultured cells or homogenized tissue in RIPA buffer containing protease and phosphatase inhibitors.

-

Protein Quantification: Determine the protein concentration of each lysate using a BCA or Bradford assay.

-

Sample Preparation: Normalize all samples to the same concentration (e.g., 1 µg/µL) with lysis buffer and Laemmli sample buffer. Denature samples by heating at 95°C for 5 minutes.

-

SDS-PAGE: Load equal amounts of total protein (e.g., 20 µg) per lane onto a polyacrylamide gel. Run the gel to separate proteins by size.

-

Protein Transfer: Transfer the separated proteins from the gel to a PVDF or nitrocellulose membrane.

-

Blocking: Block the membrane with 5% non-fat milk or Bovine Serum Albumin (BSA) in Tris-Buffered Saline with Tween 20 (TBST) for 1 hour at room temperature to prevent non-specific antibody binding.

-

Primary Antibody Incubation: Incubate the membrane with primary antibodies diluted in blocking buffer overnight at 4°C. Use antibodies specific for the target protein and a loading control (e.g., anti-GAPDH).

-

Washing: Wash the membrane three times with TBST for 10 minutes each.

-

Secondary Antibody Incubation: Incubate the membrane with a horseradish peroxidase (HRP)-conjugated secondary antibody for 1 hour at room temperature.

-

Signal Detection: Add an enhanced chemiluminescence (ECL) substrate to the membrane and capture the signal using a digital imager.

-

Densitometry Analysis: Quantify the band intensities for the target protein and the loading control using image analysis software (e.g., ImageJ).

-

This compound Calculation: For each sample, divide the intensity of the target protein band by the intensity of its corresponding loading control band to get the normalized this compound.

Protocol: Cell Viability Assay for IC₅₀ Determination

-

Cell Plating: Seed cells in a 96-well plate at a predetermined density and allow them to adhere overnight.

-

Compound Dilution: Prepare a serial dilution of the test compound in cell culture medium. Typically, a 10-point, 3-fold dilution series is used.

-

Treatment: Remove the old medium from the cells and add the medium containing the various concentrations of the compound. Include vehicle-only wells as a negative control and a cytotoxic agent (e.g., staurosporine) as a positive control.

-

Incubation: Incubate the plate for a specified period (e.g., 72 hours).

-

Viability Reagent Addition: Add a viability reagent (e.g., CellTiter-Glo®, which measures ATP, or a resazurin-based reagent) to each well according to the manufacturer's instructions.

-

Signal Measurement: After a short incubation, measure the luminescent or fluorescent signal using a plate reader.

-

Data Normalization:

-

Average the signal from the vehicle-only wells (this is your 100% viability signal).

-

Average the signal from a "no cells" or positive control well (this is your 0% viability signal).

-

Normalize the data for each well as a percentage of the vehicle control.

-

-

IC₅₀ Calculation: Plot the normalized response (Y-axis) against the log of the compound concentration (X-axis). Fit the data to a four-parameter logistic (4PL) non-linear regression model to determine the IC₅₀ value.

Mandatory Visualizations

Diagrams are indispensable for illustrating complex workflows, pathways, and relationships.

Caption: Workflow for relative protein quantification using Western Blot.

Caption: this compound analysis of protein phosphorylation in a signaling pathway.

Caption: Logical workflow for determining an EC50 or IC50 value.

Statistical Analysis of this compound Data

Because this compound data is quantitative with a true zero, it is compatible with a wide range of statistical tests.[4][5][7][14]

-

Descriptive Statistics: Mean, median, mode, standard deviation, and variance can all be calculated to summarize the data.[4][5][14]

-

Inferential Statistics: Parametric tests are generally preferred for normally distributed this compound data.[6]

-

T-tests: Used to compare the means of two groups (e.g., comparing the normalized protein expression between a control and a treated group).[4][7]

-

ANOVA (Analysis of Variance): Used to compare the means of three or more groups (e.g., comparing the effect of multiple drug concentrations on cell viability).[4][7]

-

Regression Analysis: Used to model the relationship between variables, such as in dose-response curve fitting.[4][7]

-

Conclusion

Exploratory analysis using ratios is an indispensable practice in modern research and drug development. Ratios provide a robust framework for normalizing complex biological data, enabling meaningful comparisons of assay performance, compound potency, and changes in molecular activity. By integrating this compound-based analysis with sound experimental design and appropriate statistical methods, researchers can enhance the reliability of their findings and accelerate the pace of scientific discovery.

References

- 1. medium.com [medium.com]

- 2. Exploratory data analysis of a clinical study group: Development of a procedure for exploring multidimensional data - PMC [pmc.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. chi2innovations.com [chi2innovations.com]

- 5. statisticalaid.com [statisticalaid.com]

- 6. What Is this compound Data? | Examples & Definition [scribbr.co.uk]

- 7. proprofssurvey.com [proprofssurvey.com]

- 8. Dose–response relationship - Wikipedia [en.wikipedia.org]

- 9. Dose-Response Relationships - Clinical Pharmacology - MSD Manual Professional Edition [msdmanuals.com]

- 10. youtube.com [youtube.com]

- 11. How do dose-response relationships guide dosing decisions? [synapse.patsnap.com]

- 12. What Metrics Are Used to Assess Assay Quality? – BIT 479/579 High-throughput Discovery [htds.wordpress.ncsu.edu]

- 13. pubs.acs.org [pubs.acs.org]

- 14. researchprospect.com [researchprospect.com]

The Cornerstone of Chemical Synthesis: An In-depth Guide to Stoichiometric Ratios for Researchers and Drug Development Professionals

In the precise and exacting world of chemical research and pharmaceutical development, the concept of stoichiometry is paramount. It is the quantitative bedrock upon which all chemical reactions are understood, controlled, and optimized. This technical guide provides a comprehensive exploration of stoichiometric ratios, detailing their theoretical underpinnings, experimental determination, and critical applications in the synthesis of active pharmaceutical ingredients (APIs) and the elucidation of biological pathways.

Core Principles of Stoichiometry

Stoichiometry is the branch of chemistry that deals with the quantitative relationships between reactants and products in a chemical reaction.[1][2] It is founded on the law of conservation of mass, which dictates that in a chemical reaction, matter is neither created nor destroyed.[3] Consequently, the total mass of the reactants must equal the total mass of the products.[4] This fundamental principle allows for the precise calculation of the amounts of substances consumed and produced in chemical reactions.

At the heart of stoichiometric calculations is the mole, the SI unit for the amount of a substance. One mole contains Avogadro's number of entities (approximately 6.022 x 10²³). The molar mass of a substance, expressed in grams per mole ( g/mol ), provides the crucial link between the macroscopic mass of a substance and the number of moles.

A balanced chemical equation is the essential starting point for all stoichiometric calculations. The coefficients in a balanced equation represent the molar ratios of reactants and products.[1][5] For example, in the synthesis of water from hydrogen and oxygen:

2H₂ + O₂ → 2H₂O

This equation indicates that two moles of hydrogen gas react with one mole of oxygen gas to produce two moles of water. This 2:1:2 ratio is the stoichiometric this compound of the reaction.

Applications in Drug Development and Synthesis

In the pharmaceutical industry, the precise control of stoichiometric ratios is critical for the efficient, safe, and cost-effective synthesis of APIs.[6][7] Accurate stoichiometry ensures the maximum yield of the desired product while minimizing the formation of impurities and byproducts, which can be difficult and costly to remove.[7]

Key applications include:

-

API Synthesis: Ensuring the optimal this compound of starting materials and reagents to maximize the yield and purity of the final drug substance.[8][9]

-

Quality Control: Verifying the composition and purity of raw materials, intermediates, and final products.[6]

-

Formulation Development: Determining the precise amounts of active ingredients and excipients in a final drug product.[10]

Quantitative Data in Pharmaceutical Synthesis

The following tables summarize stoichiometric data for the synthesis of two widely used pharmaceutical drugs, Aspirin and Atorvastatin.

Table 1: Stoichiometric Data for the Synthesis of Aspirin

| Reactant/Product | Chemical Formula | Molar Mass ( g/mol ) | Stoichiometric this compound | Moles | Mass (g) |

| Salicylic Acid | C₇H₆O₃ | 138.12 | 1 | 1.00 | 138.12 |

| Acetic Anhydride | C₄H₆O₃ | 102.09 | 1 | 1.00 | 102.09 |

| Product | |||||

| Aspirin | C₉H₈O₄ | 180.16 | 1 | 1.00 | 180.16 |

| Acetic Acid | C₂H₄O₂ | 60.05 | 1 | 1.00 | 60.05 |

This table illustrates a 1:1 stoichiometric relationship between the reactants, salicylic acid and acetic anhydride, in the synthesis of aspirin.[10]

Table 2: Stoichiometric Data for a Key Step in Atorvastatin Synthesis (Paal-Knorr Pyrrole Synthesis)

| Reactant/Product | Role | Molar Mass ( g/mol ) | Stoichiometric this compound | Moles | Mass (g) |

| 1,4-Diketone Intermediate | Reactant | (Varies) | 1 | 1.00 | (Varies) |

| Primary Amine Intermediate | Reactant | (Varies) | 1 | 1.00 | (Varies) |

| Product | |||||

| Atorvastatin Precursor | Product | (Varies) | 1 | 1.00 | (Varies) |

The Paal-Knorr synthesis of the pyrrole core of Atorvastatin involves a 1:1 condensation of a 1,4-diketone and a primary amine.[11][12] The exact masses will depend on the specific intermediates used in the chosen synthetic route.

Experimental Determination of Stoichiometric Ratios

Several experimental techniques are employed to determine the stoichiometric ratios of chemical reactions. These methods are crucial for characterizing new reactions and for quality control in manufacturing processes.

Gravimetric Analysis

Gravimetric analysis is a quantitative method that involves determining the amount of a substance by weighing.[13] A common application is the determination of the stoichiometry of a precipitation reaction.

Experimental Protocol: Gravimetric Determination of Chloride Ion Stoichiometry

-

Sample Preparation: Accurately weigh a sample of a soluble chloride salt and dissolve it in deionized water.

-

Precipitation: Add a solution of silver nitrate (AgNO₃) in excess to the chloride solution. This will precipitate the chloride ions as silver chloride (AgCl), which is insoluble. The reaction is: Ag⁺(aq) + Cl⁻(aq) → AgCl(s).

-

Digestion: Gently heat the solution containing the precipitate. This process, known as digestion, encourages the formation of larger, more easily filterable crystals.

-

Filtration: Carefully filter the precipitate from the solution using a pre-weighed sintered glass crucible.

-

Washing: Wash the precipitate with a small amount of dilute nitric acid to remove any co-precipitated impurities, followed by a final wash with a small amount of deionized water.

-

Drying: Dry the crucible containing the precipitate in an oven at a specific temperature until a constant weight is achieved.

-

Weighing: After cooling in a desiccator, accurately weigh the crucible and the dried precipitate.

-

Calculation: From the mass of the AgCl precipitate, the moles of AgCl can be calculated. Based on the 1:1 stoichiometry of the reaction, the moles of chloride ions in the original sample can be determined.

Titration Methods

Titration is a quantitative chemical analysis method used to determine the concentration of an identified analyte.[14] A solution of known concentration, called the titrant, is added to a solution of the analyte until the reaction between the two is just complete, a point known as the equivalence point.[15]

Experimental Protocol: Acid-Base Titration to Determine the Stoichiometry of a Neutralization Reaction

-

Preparation: Accurately measure a known volume of an acid solution with an unknown concentration (the analyte) into an Erlenmeyer flask. Add a few drops of a suitable pH indicator.

-

Titrant Preparation: Fill a burette with a standardized solution of a base (the titrant) of known concentration. Record the initial volume.

-

Titration: Slowly add the titrant to the analyte while continuously swirling the flask.

-

Endpoint Determination: Continue adding the titrant dropwise until the indicator changes color permanently, signaling the endpoint of the titration. The endpoint is a close approximation of the equivalence point.

-

Volume Measurement: Record the final volume of the titrant in the burette. The difference between the initial and final volumes gives the volume of titrant used.

-

Calculation: Using the known concentration and the volume of the titrant, calculate the moles of the titrant. From the balanced chemical equation for the acid-base reaction, use the stoichiometric this compound to determine the moles of the analyte in the original solution.

Spectrophotometric Methods

Spectrophotometry can be used to determine the stoichiometry of reactions that involve a colored reactant or product. The method of continuous variations (Job's plot) and the mole-ratio method are two common approaches.[16][17]

Experimental Protocol: Method of Continuous Variations (Job's Plot)

-

Solution Preparation: Prepare a series of solutions where the total molar concentration of the two reactants (e.g., a metal ion and a ligand) is kept constant, but their mole fractions are varied.

-

Absorbance Measurement: Measure the absorbance of each solution at a wavelength where the product (the metal-ligand complex) absorbs strongly, but the reactants do not.

-

Data Plotting: Plot the absorbance versus the mole fraction of one of the reactants.

-

Stoichiometry Determination: The plot will typically consist of two linear portions that intersect. The mole fraction at the point of intersection corresponds to the stoichiometric this compound of the reactants in the complex.[16] For example, a maximum absorbance at a mole fraction of 0.5 indicates a 1:1 stoichiometry.

Visualizing Stoichiometric Relationships and Workflows

Diagrams are powerful tools for visualizing the complex relationships in signaling pathways and experimental workflows where stoichiometry plays a critical role.

Stoichiometry in Cellular Signaling

Cellular signaling pathways rely on precise protein-protein interactions with specific stoichiometries to transmit signals effectively. The Epidermal Growth Factor Receptor (EGFR) signaling pathway is a key regulator of cell proliferation and differentiation, and its dysregulation is often implicated in cancer.[13][18]

Caption: EGFR signaling pathway illustrating 1:1 ligand binding and 2:2 receptor dimerization.

Workflow for Drug Discovery

High-throughput screening (HTS) is a key process in drug discovery for identifying compounds that interact with a specific biological target. The workflow involves a series of steps where stoichiometric considerations are important for assay development and data analysis.

References

- 1. semanticscholar.org [semanticscholar.org]

- 2. m.youtube.com [m.youtube.com]

- 3. researchgate.net [researchgate.net]

- 4. nalam.ca [nalam.ca]

- 5. youtube.com [youtube.com]

- 6. A comprehensive pathway map of epidermal growth factor receptor signaling - PMC [pmc.ncbi.nlm.nih.gov]

- 7. The synthesis of active pharmaceutical ingredients (APIs) using continuous flow chemistry - PMC [pmc.ncbi.nlm.nih.gov]

- 8. tianmingpharm.com [tianmingpharm.com]

- 9. arborpharmchem.com [arborpharmchem.com]

- 10. solubilityofthings.com [solubilityofthings.com]

- 11. Atorvastatin (Lipitor) by MCR - PMC [pmc.ncbi.nlm.nih.gov]

- 12. atlantis-press.com [atlantis-press.com]

- 13. bio-rad-antibodies.com [bio-rad-antibodies.com]

- 14. Protein abundance of AKT and ERK pathway components governs cell type‐specific regulation of proliferation - PMC [pmc.ncbi.nlm.nih.gov]

- 15. fda.gov [fda.gov]

- 16. fda.gov [fda.gov]

- 17. Stoichiometry of chromatin-associated protein complexes revealed by label-free quantitative mass spectrometry-based proteomics - PMC [pmc.ncbi.nlm.nih.gov]

- 18. creative-diagnostics.com [creative-diagnostics.com]

Understanding Odds Ratios in Clinical Studies: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of odds ratios (ORs), a critical statistical measure used in clinical studies to quantify the strength of association between an exposure (such as a drug treatment or a risk factor) and an outcome (like a disease or a side effect). Understanding the calculation, interpretation, and limitations of odds ratios is paramount for accurately interpreting clinical research and making informed decisions in drug development.

Core Concepts: What is an Odds Ratio?

An odds this compound is a measure of association between an exposure and an outcome.[1][2] It represents the odds that an outcome will occur given a particular exposure, compared to the odds of the outcome occurring in the absence of that exposure.[1] Odds ratios are commonly used in case-control studies, but also appear in cross-sectional and cohort studies.[1][3]

An odds this compound is calculated from a 2x2 contingency table, which cross-tabulates the exposure and outcome.[4][5][6][7][8]

Interpreting the Odds this compound:

The value of the odds this compound indicates the strength and direction of the association:

-

OR = 1: The exposure does not affect the odds of the outcome. There is no association.[1][2][9]

-

OR > 1: The exposure is associated with higher odds of the outcome. This suggests the exposure may be a risk factor.[1][9]

-

OR < 1: The exposure is associated with lower odds of the outcome. This suggests a protective effect.[1][9][10]

Data Presentation and Calculation

Quantitative data from a clinical study examining the association between an exposure and an outcome is typically summarized in a 2x2 table.

The 2x2 Contingency Table

This table is the foundation for calculating the odds this compound.

| Outcome Present (e.g., Disease) | Outcome Absent (e.g., No Disease) | |

| Exposed | a | b |

| Not Exposed | c | d |

-

a: Number of exposed individuals with the outcome.[1]

-

b: Number of exposed individuals without the outcome.[1]

-

c: Number of unexposed individuals with the outcome.[1]

-

d: Number of unexposed individuals without the outcome.[1]

Calculating the Odds this compound

The odds this compound is calculated as the this compound of the odds of the outcome in the exposed group to the odds of the outcome in the unexposed group.[4]

-

Odds of outcome in the exposed group: a / b[4]

-

Odds of outcome in the unexposed group: c / d[4]

-

Odds this compound (OR) = (a / b) / (c / d) = ad / bc [4][5][6]

Example Calculation:

Consider a study investigating the association between smoking and lung cancer.

| Lung Cancer | No Lung Cancer | |

| Smokers | 17 | 83 |

| Non-smokers | 1 | 99 |

Using the formula: OR = (17 * 99) / (83 * 1) = 1683 / 83 ≈ 20.28

Interpretation: In this hypothetical study, the odds of developing lung cancer among smokers are over 20 times the odds of developing lung cancer among non-smokers.[4]

Statistical Significance and Precision

The odds this compound calculated from a sample is a point estimate. To understand its reliability, we must consider its confidence interval and p-value.

Confidence Intervals

A 95% confidence interval (CI) provides a range of values within which the true population odds this compound is likely to lie with 95% confidence.[3][11]

-

A narrow CI suggests a more precise estimate of the odds this compound.[1]

-

A wide CI indicates less precision.[1]

Crucially, if the 95% CI for an odds this compound includes 1.0, the result is not considered statistically significant at the 0.05 level.[1][9][10][12] This means that the data is consistent with there being no association between the exposure and the outcome.

P-value

The p-value tests the null hypothesis that there is no association between the exposure and the outcome (i.e., the true odds this compound is 1.0).[9][13]

-

A p-value < 0.05 is typically considered statistically significant, suggesting that the observed association is unlikely to be due to chance.[9][14]

-

A p-value ≥ 0.05 indicates that there is not enough evidence to reject the null hypothesis of no association.[14]

The presence of a statistically significant p-value should align with a 95% confidence interval that does not cross 1.0.

Summary of Quantitative Interpretation

| Odds this compound (OR) | 95% Confidence Interval (CI) | P-value | Interpretation |

| > 1 | Does not include 1.0 | < 0.05 | Statistically significant increased odds of the outcome with exposure. |

| < 1 | Does not include 1.0 | < 0.05 | Statistically significant decreased odds of the outcome with exposure (protective effect). |

| Any value | Includes 1.0 | ≥ 0.05 | No statistically significant association between the exposure and the outcome. The observed association could be due to chance.[1][9][12] |

| = 1 | - | > 0.99 | No association between exposure and outcome. |

Methodologies of Key Experimental Protocols

The "experimental protocol" in this context refers to the design of the clinical study. The choice of study design influences how the odds this compound is used and interpreted.

Case-Control Studies

Methodology:

-

Identify Cases: Researchers identify individuals who have the outcome of interest (cases).

-

Select Controls: A comparable group of individuals who do not have the outcome are selected (controls).

-

Ascertain Exposure: Researchers look back in time (retrospectively) to determine the exposure status of individuals in both groups.

-

Data Analysis: A 2x2 table is constructed, and the odds this compound is calculated to compare the odds of exposure among cases to the odds of exposure among controls.[15]

Case-control studies are particularly efficient for studying rare diseases.[15] In this design, the odds this compound is the primary measure of association.[15][16]

Cohort Studies

Methodology:

-

Select Cohorts: Researchers select a group of individuals who are initially free of the outcome. This group is divided into those who are exposed to a risk factor and those who are not.

-

Follow-up: Both cohorts are followed over time.

-

Ascertain Outcome: The incidence of the outcome is measured in both the exposed and unexposed groups.

-

Data Analysis: A 2x2 table is constructed. While a risk this compound (relative risk) is the preferred measure of association in cohort studies, an odds this compound can also be calculated.

Randomized Controlled Trials (RCTs)

Methodology:

-

Participant Recruitment: A sample of participants is recruited.

-

Randomization: Participants are randomly assigned to either an intervention group (exposed) or a control group (unexposed).

-

Follow-up: Both groups are followed for a predefined period.

-

Outcome Assessment: The number of participants experiencing the outcome of interest is recorded in each group.

-

Data Analysis: A 2x2 table is created, and the odds this compound can be calculated. As with cohort studies, the risk this compound is often the more direct measure of effect.

Mandatory Visualizations

Logical Workflow for Calculating and Interpreting an Odds this compound

Caption: Logical workflow for odds this compound analysis.

Signaling Pathway Association Example

Odds ratios are frequently used in genetic association studies to determine if a particular gene variant (exposure) is associated with a disease (outcome). This can provide clues about the involvement of certain signaling pathways.

Consider a hypothetical study investigating the association of a variant in Gene X (a component of the "Growth Factor Signaling Pathway") with a specific type of cancer.

Caption: Association of a gene variant with a clinical outcome.

Common Misinterpretations and Caveats

Odds this compound vs. Relative Risk (Risk this compound)

A frequent error is interpreting the odds this compound as a relative risk (RR).[17] While they are often used interchangeably, they are mathematically distinct.

-

Relative Risk (RR): The this compound of the probability of an event in the exposed group to the probability in the unexposed group. RR = [a / (a + b)] / [c / (c + d)].

-

Odds this compound (OR): The this compound of the odds of an event.

The odds this compound will always overestimate the relative risk when the OR is greater than 1 and underestimate it when the OR is less than 1. This exaggeration becomes more pronounced as the prevalence of the outcome increases.[18]

The Rare Disease Assumption: When the outcome of interest is rare (generally considered to have a prevalence of <10%), the odds this compound provides a good approximation of the relative risk.[2][19]

Magnitude and Clinical Significance

A statistically significant odds this compound does not automatically imply clinical significance. A large odds this compound with a very wide confidence interval may be less meaningful than a smaller, more precise odds this compound. The practical importance of the finding must always be considered in the context of the disease, the intervention, and patient populations.[12]

Reporting Standards

The Consolidated Standards of Reporting Trials (CONSORT) statement provides guidelines for reporting clinical trials.[20][21] When reporting odds ratios, it is recommended to include:

-

The odds this compound value.

-

The 95% confidence interval.[22]

-

The corresponding p-value.

-

Both relative and absolute measures of association where possible.[23][24]

This comprehensive reporting allows for a more complete and transparent interpretation of the study's findings.

References

- 1. Explaining Odds Ratios - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Odds this compound - Wikipedia [en.wikipedia.org]

- 3. Odds ratios and 95% confidence intervals | rBiostatistics.com [rbiostatistics.com]

- 4. Odds this compound - StatPearls - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 5. biochemia-medica.com [biochemia-medica.com]

- 6. researchgate.net [researchgate.net]

- 7. openepi.com [openepi.com]

- 8. m.youtube.com [m.youtube.com]

- 9. statisticsbyjim.com [statisticsbyjim.com]

- 10. 2minutemedicine.com [2minutemedicine.com]

- 11. m.youtube.com [m.youtube.com]

- 12. fiveable.me [fiveable.me]

- 13. stats.stackexchange.com [stats.stackexchange.com]

- 14. testingtreatments.org [testingtreatments.org]

- 15. radiopaedia.org [radiopaedia.org]

- 16. applications.emro.who.int [applications.emro.who.int]

- 17. m.youtube.com [m.youtube.com]

- 18. feinberg.northwestern.edu [feinberg.northwestern.edu]

- 19. When can odds ratios mislead? - PMC [pmc.ncbi.nlm.nih.gov]

- 20. Reporting Guidelines: The Consolidated Standards of Reporting Trials (CONSORT) Framework - PMC [pmc.ncbi.nlm.nih.gov]

- 21. rama.mahidol.ac.th [rama.mahidol.ac.th]

- 22. The Complete Guide: How to Report Odds Ratios [statology.org]

- 23. Foundational Statistical Principles in Medical Research: A Tutorial on Odds Ratios, Relative Risk, Absolute Risk, and Number Needed to Treat - PMC [pmc.ncbi.nlm.nih.gov]

- 24. ascopubs.org [ascopubs.org]

Unlocking Cellular Secrets: A Technical Guide to Isotopic Ratio Analysis in Drug Development

For Researchers, Scientists, and Drug Development Professionals

In the intricate world of drug discovery and development, understanding the precise mechanisms of action, metabolic fates, and pathway dynamics of novel therapeutic agents is paramount. Isotopic ratio analysis has emerged as a powerful and indispensable tool, offering unparalleled insights into the complex biological processes that govern a drug's efficacy and safety. This technical guide delves into the core principles of key isotopic this compound analysis techniques, providing detailed experimental protocols and comparative data to empower researchers in leveraging these methods for accelerated and informed drug development.

Core Principles of Isotopic this compound Analysis

Isotopic this compound analysis is founded on the principle of measuring the relative abundance of isotopes in a sample. Isotopes are atoms of the same element that possess an equal number of protons but differ in their number of neutrons, resulting in different atomic masses. This subtle mass difference is the basis for their separation and quantification.

The isotopic composition of a sample is typically expressed in delta (δ) notation, which represents the deviation of the sample's isotope this compound from that of an international standard in parts per thousand (‰ or "per mil"). This standardized notation allows for the comparison of data across different laboratories and experiments. The delta value is calculated using the following formula:

δ (‰) = [(R_sample / R_standard) - 1] * 1000

Where R_sample is the this compound of the heavy to light isotope in the sample and R_standard is the corresponding this compound in the international standard.

Three primary techniques dominate the landscape of isotopic this compound analysis in drug development and life sciences:

-

Isotope this compound Mass Spectrometry (IRMS): A highly precise technique for measuring the relative abundance of stable isotopes (e.g., ¹³C/¹²C, ¹⁵N/¹⁴N).

-

Accelerator Mass Spectrometry (AMS): An ultra-sensitive method for quantifying rare, long-lived radioisotopes, most notably ¹⁴C, at exceptionally low levels.[1][2]

-

Nuclear Magnetic Resonance (NMR) Spectroscopy: A powerful tool for determining the position-specific isotopic composition within a molecule, providing detailed insights into metabolic pathways.

Comparative Analysis of Key Techniques

The choice of analytical technique depends on the specific research question, the nature of the isotopic label, and the required sensitivity and precision. The following table summarizes the key performance characteristics of IRMS, AMS, and NMR.

| Feature | Isotope this compound Mass Spectrometry (IRMS) | Accelerator Mass Spectrometry (AMS) | Nuclear Magnetic Resonance (NMR) Spectroscopy |

| Principle | Measures the this compound of stable isotopes by separating ions based on their mass-to-charge this compound. | Counts individual rare radioisotope atoms after accelerating them to high energies.[1] | Measures the nuclear magnetic resonance of isotopes to determine their chemical environment and abundance. |

| Isotopes Measured | Stable isotopes (e.g., ²H, ¹³C, ¹⁵N, ¹⁸O, ³⁴S). | Long-lived radioisotopes (primarily ¹⁴C, also ³H, ²⁶Al, ³⁶Cl, ¹²⁹I). | NMR-active stable isotopes (e.g., ¹H, ¹³C, ¹⁵N, ³¹P).[3] |

| Sensitivity | High (ppm to ppb range). | Ultra-high (attomole to zeptomole range), up to a million times more sensitive than decay counting.[1][4] | Lower compared to MS-based methods. |

| Precision | Very high (typically <0.2‰).[5] | High, with measurements usually achieving higher precision than radiometric dating methods.[6] | Good, with differences between IRMS and NMR for intramolecular ¹³C distribution being statistically insignificant (<0.3‰).[7] |

| Sample Size | Milligram to microgram range.[8] | Microgram to nanogram range.[9] | Milligram range. |

| Analysis Time | Relatively fast (minutes per sample for automated systems). | Can be longer due to sample preparation (graphitization), but the run time per sample is a few hours.[6] | Can be time-consuming, especially for complex mixtures or low-abundance metabolites. |

| Key Applications in Drug Development | Bulk stable isotope analysis, metabolic tracing, food authenticity.[10][11] | Microdosing studies, absolute bioavailability, ADME studies, metabolite profiling at very low concentrations.[12][13] | Metabolic flux analysis, pathway elucidation, position-specific isotope analysis.[14][15][16] |

Experimental Protocols

Detailed and standardized experimental protocols are crucial for obtaining accurate and reproducible results. The following sections provide step-by-step methodologies for the three key isotopic this compound analysis techniques.

Elemental Analysis - Isotope this compound Mass Spectrometry (EA-IRMS) for Bulk Stable Isotope Analysis

This protocol outlines the general procedure for determining the bulk ¹³C and ¹⁵N isotopic composition of a biological sample.

Methodology:

-

Sample Preparation:

-

Dry the biological sample to a constant weight (e.g., freeze-drying or oven-drying at 60°C).

-

Homogenize the dried sample to a fine powder using a ball mill or mortar and pestle. This ensures a representative subsample is taken for analysis.

-

For samples containing carbonates, an acid fumigation step (e.g., with 12M HCl) is required to remove the inorganic carbon.

-

Accurately weigh 0.5-1.5 mg of the prepared sample into a tin capsule.

-

-

Combustion and Gas Purification:

-

The tin capsule containing the sample is dropped into a high-temperature (typically >1000°C) combustion furnace of the elemental analyzer.

-

The sample is flash-combusted in the presence of a pulse of pure oxygen.

-

The resulting gases (CO₂, N₂, H₂O, SO₂) are carried by a helium carrier gas through a reduction furnace (containing copper wires) to reduce nitrogen oxides to N₂ and remove excess oxygen.

-

Water is removed by a chemical trap (e.g., magnesium perchlorate).

-

-

Gas Chromatography and Introduction to IRMS:

-

The purified CO₂ and N₂ gases are separated by a gas chromatography column.

-

The separated gases are introduced into the ion source of the IRMS via a continuous flow interface.

-

-

Mass Analysis:

-

In the ion source, the gas molecules are ionized by electron impact.

-

The resulting ions are accelerated and passed through a magnetic field, which separates them based on their mass-to-charge this compound.

-

For CO₂, ion beams corresponding to masses 44 (¹²C¹⁶O₂), 45 (¹³C¹⁶O₂ and ¹²C¹⁷O¹⁶O), and 46 (¹²C¹⁸O¹⁶O) are simultaneously collected in separate Faraday cup detectors.

-

For N₂, ion beams for masses 28 (¹⁴N¹⁴N), 29 (¹⁴N¹⁵N), and 30 (¹⁵N¹⁵N) are collected.

-

-

Data Analysis:

-

The instrument software calculates the isotope ratios from the measured ion beam intensities.

-

The raw data is corrected for instrumental fractionation and calibrated against international standards (e.g., Vienna Pee Dee Belemnite (VPDB) for carbon, atmospheric N₂ for nitrogen) to obtain the final δ¹³C and δ¹⁵N values.

-

Accelerator Mass Spectrometry (AMS) for ¹⁴C-Labeled Compounds in Drug Metabolism Studies

This protocol describes the general workflow for quantifying a ¹⁴C-labeled drug and its metabolites in biological matrices.

Methodology:

-

Sample Collection and Preparation:

-

Following administration of a ¹⁴C-labeled drug, collect biological samples (e.g., plasma, urine, tissue).

-

If necessary, perform sample preparation to isolate the analyte of interest or to separate the parent drug from its metabolites (e.g., liquid chromatography).

-

The prepared sample, containing the ¹⁴C-labeled compound, is placed in a quartz tube with an excess of copper(II) oxide.

-

-

Combustion and CO₂ Purification:

-

The quartz tube is sealed under vacuum and heated to ~900°C to combust all organic material to CO₂.

-

The resulting CO₂ is cryogenically purified to remove water and other non-condensable gases.

-

-

Graphitization:

-

The purified CO₂ is converted to solid graphite, which is the required form for the AMS ion source.

-

This is typically achieved by reducing the CO₂ with hydrogen gas at high temperature (~600°C) in the presence of a metal catalyst (e.g., iron or cobalt powder).

-

The resulting graphite is pressed into an aluminum target holder.

-

-

AMS Analysis:

-

The graphite target is placed in the ion source of the AMS instrument.

-

A beam of cesium ions is directed at the target, sputtering negative carbon ions.

-

The negative ions are accelerated to high energies (mega-electron volts) in a tandem Van de Graaff accelerator.

-

In the high-voltage terminal, the ions pass through a "stripper" (a thin foil or gas), which removes several electrons, converting them into positive ions and breaking up any molecular isobars (e.g., ¹³CH⁻).

-

The positive ions are further accelerated and then separated by a series of magnets and electrostatic analyzers based on their mass, energy, and charge.

-

The rare ¹⁴C ions are counted individually in a detector, while the abundant stable isotopes (¹²C and ¹³C) are measured as a current in a Faraday cup.

-

-

Data Analysis:

-

The this compound of ¹⁴C to total carbon is calculated.

-

This this compound is then used to determine the concentration of the ¹⁴C-labeled drug or metabolite in the original biological sample.

-

NMR-Based Metabolic Flux Analysis Using Stable Isotope Tracers

This protocol provides a general framework for tracing the metabolic fate of a stable isotope-labeled substrate (e.g., ¹³C-glucose) in cell culture.

Methodology:

-

Cell Culture and Isotope Labeling:

-

Culture cells in a defined medium.

-

Replace the standard medium with a medium containing a known concentration of the stable isotope-labeled substrate (e.g., [U-¹³C]-glucose, where all six carbon atoms are ¹³C).

-

Incubate the cells for a specific period to allow for the uptake and metabolism of the labeled substrate. The incubation time will depend on the metabolic pathway of interest and the rate of flux.

-

-

Metabolite Extraction:

-

Rapidly quench metabolism to halt enzymatic activity. This is typically done by aspirating the medium and adding a cold solvent, such as 80% methanol pre-chilled to -80°C.

-

Scrape the cells and collect the cell-solvent mixture.

-

Lyse the cells (e.g., by sonication or freeze-thaw cycles).

-

Centrifuge the lysate to pellet cell debris.

-

Collect the supernatant, which contains the intracellular metabolites.

-

-

Sample Preparation for NMR:

-

Lyophilize or evaporate the supernatant to dryness.

-

Reconstitute the dried metabolite extract in a deuterated solvent (e.g., D₂O) containing a known concentration of an internal standard (e.g., DSS or TSP) for chemical shift referencing and quantification.

-

Transfer the sample to an NMR tube.

-

-

NMR Data Acquisition:

-

Acquire one-dimensional (1D) and/or two-dimensional (2D) NMR spectra.

-

1D ¹H NMR provides an overview of the metabolite profile.

-

2D heteronuclear correlation spectra, such as ¹H-¹³C HSQC (Heteronuclear Single Quantum Coherence), are crucial for resolving overlapping signals and identifying which protons are attached to ¹³C-labeled carbons.

-

Other 2D experiments like ¹H-¹³C HSQC-TOCSY can provide information about the connectivity of ¹³C atoms within a molecule.

-

-

Data Analysis and Flux Interpretation:

-

Process the NMR spectra (e.g., Fourier transformation, phasing, baseline correction).

-

Identify metabolites by comparing the chemical shifts and coupling patterns to spectral databases.

-

Quantify the relative abundance of different isotopomers (molecules with the same chemical formula but different isotopic compositions) for each metabolite. The pattern of ¹³C incorporation into downstream metabolites reveals the activity of different metabolic pathways.

-

Metabolic flux analysis software can be used to model the data and calculate the rates of metabolic reactions.

-

Visualizing Cellular Processes: Workflows and Pathways

Understanding the complex interplay of molecules within a cell is critical for effective drug development. Isotopic this compound analysis provides the data to map these interactions. The following diagrams, generated using the DOT language, illustrate key experimental workflows and a prominent signaling pathway relevant to drug discovery.

Experimental Workflow for Isotope this compound Mass Spectrometry

Workflow for Accelerator Mass Spectrometry of ¹⁴C-Labeled Compounds

References

- 1. Accelerator mass spectrometry in pharmaceutical research and development--a new ultrasensitive analytical method for isotope measurement - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. Parallel Accelerator and Molecular Mass Spectrometry Measurement of Carbon-14-Labeled Analytes - PMC [pmc.ncbi.nlm.nih.gov]

- 3. NMR-Based Stable Isotope Tracing of Cancer Metabolism | Springer Nature Experiments [experiments.springernature.com]

- 4. Accelerator Mass Spectrometry in Pharmaceutical Research and Deve...: Ingenta Connect [ingentaconnect.com]

- 5. researchgate.net [researchgate.net]

- 6. Accelerator Mass Spectrometry, C14 Dating, What is AMS? [radiocarbon.com]

- 7. forensic-isotopes.org [forensic-isotopes.org]

- 8. Solid Samples | Environmental Isotope Laboratory | University of Waterloo [uwaterloo.ca]

- 9. researchgate.net [researchgate.net]

- 10. metsol.com [metsol.com]

- 11. Forensic application of irms | PDF [slideshare.net]

- 12. openmedscience.com [openmedscience.com]

- 13. researchgate.net [researchgate.net]

- 14. NMR Based Metabolomics - PMC [pmc.ncbi.nlm.nih.gov]

- 15. NMR-Based Metabolic Flux Analysis - Creative Proteomics MFA [creative-proteomics.com]

- 16. Stable Isotope-Resolved Metabolomics by NMR | Springer Nature Experiments [experiments.springernature.com]

The Redfield Ratio in Oceanography: A Core Technical Guide

Authored for Researchers, Scientists, and Drug Development Professionals

Abstract

The Redfield ratio, a foundational concept in oceanography, describes the remarkably consistent atomic this compound of carbon (C), nitrogen (N), and phosphorus (P) in marine phytoplankton and, consequently, in deep ocean water masses. This technical guide provides an in-depth exploration of the Redfield this compound, its historical context, biogeochemical significance, and the experimental methodologies used for its determination. It further delves into the observed variations of this this compound across different oceanic provinces and phytoplankton species, presenting quantitative data in structured tables for comparative analysis. Detailed experimental protocols for the measurement of key elemental and nutrient concentrations are provided to facilitate reproducible research. Finally, a conceptual diagram illustrates the central role of the Redfield this compound in the cycling of essential nutrients within marine ecosystems.

Introduction

In 1934, American oceanographer Alfred C. Redfield first described a consistent atomic this compound of essential elements within marine biomass.[1][2] Through meticulous analysis of phytoplankton composition and dissolved nutrient concentrations in the Atlantic, Indian, and Pacific Oceans, he established the canonical Redfield this compound of C:N:P = 106:16:1 .[1][3] This stoichiometry reflects the fundamental building blocks of life in the sea and suggests a profound link between the biogeochemistry of the oceans and the physiological requirements of phytoplankton, the primary producers at the base of the marine food web.

The Redfield this compound is a cornerstone of marine biogeochemistry, providing a framework for understanding nutrient limitation, carbon sequestration, and the overall functioning of ocean ecosystems.[1] It posits that the elemental composition of phytoplankton governs the relative concentrations of dissolved inorganic nutrients in the deep ocean through processes of photosynthesis in the sunlit surface waters and remineralization at depth.

While the canonical 106:16:1 this compound serves as a valuable benchmark, significant deviations have been observed.[1][2] These variations are influenced by a multitude of factors, including phytoplankton species composition, nutrient availability, geographic location, and physical oceanographic conditions.[4][5] Understanding these variations is critical for refining global biogeochemical models and for assessing the impact of climate change on marine productivity and carbon cycling.

This guide provides a technical overview of the Redfield this compound, with a focus on the quantitative data that defines its variations and the experimental protocols required to measure its components.

Quantitative Data on Elemental Ratios

The elemental composition of marine organic matter is not static. The following tables summarize the canonical Redfield this compound and its observed variations in different oceanic regions and among various phytoplankton taxa.

Table 1: The Canonical and Extended Redfield Ratios

| This compound Type | C | N | P | Si | Fe | O₂ |

| Canonical Redfield this compound[1][3] | 106 | 16 | 1 | - | - | - |

| Redfield-Brzezinski this compound (for diatoms)[1] | 106 | 16 | 1 | 15 | - | - |

| Extended Redfield this compound (with Iron)[1] | 106 | 16 | 1 | - | 0.1-0.001 | - |

| Oxygen to Carbon this compound[1] | 106 | - | - | - | - | 138 |

Table 2: Regional Variations in Particulate Organic Matter C:N:P Ratios

| Oceanic Region | C:N:P this compound | Reference |

| Global Median (post-1970s data) | 163:22:1 | [2] |

| Oligotrophic Subtropical Gyres | ~195:28:1 | [4] |

| Eutrophic Polar Waters | ~78:13:1 | [4] |

| Southern Ocean (Polar) | ~12.5:1 (N:P) | [6] |

| Southern Ocean (Sub-Antarctic) | ~20:1 (N:P) | [6] |

Table 3: Variations in N:P Ratios Among Phytoplankton

| Condition | N:P this compound Range | Reference |

| Nitrogen or Phosphorus Limitation | 6:1 to 60:1 | [1] |

| Nutrient-Replete Laboratory Cultures | 5:1 to 19:1 | [7] |

Experimental Protocols

Accurate determination of the Redfield this compound relies on precise measurements of the elemental composition of particulate organic matter (primarily phytoplankton) and the concentrations of dissolved inorganic nutrients in seawater.

Determination of Particulate Organic Carbon (POC) and Particulate Nitrogen (PN)

The analysis of POC and PN in marine particulate matter is typically performed using a CHNS/O elemental analyzer.[8][9][10][11][12]

Methodology:

-

Sample Collection: Seawater samples are filtered through pre-combusted glass fiber filters (e.g., Whatman GF/F) to collect particulate matter.[13][14] The volume of water filtered is recorded.

-

Sample Preparation: The filters are dried to remove water. To remove inorganic carbon (carbonates), the filters are exposed to acid fumes (e.g., hydrochloric acid) in a desiccator.[13]

-

Combustion: A small punch of the filter is placed in a tin capsule and introduced into a high-temperature combustion furnace (typically around 900-1000°C) of the elemental analyzer.[8][9]

-

Gas Separation and Detection: The combustion process converts carbon to carbon dioxide (CO₂) and nitrogen to nitrogen gas (N₂). These gases are separated by gas chromatography and quantified using a thermal conductivity detector (TCD).[8][9][12]

-

Quantification: The instrument is calibrated using a standard of known C and N content (e.g., acetanilide).[8][13] The amounts of C and N in the sample are then calculated based on the detector response.

Determination of Dissolved Inorganic Nutrients

3.2.1. Nitrate (NO₃⁻) Analysis: Cadmium Reduction Method

This is a widely used colorimetric method for the determination of nitrate in seawater.[1][15][16][17][18][19]

Methodology:

-

Reduction: The seawater sample is passed through a column containing copper-coated cadmium filings.[1][17] The cadmium reduces nitrate to nitrite (NO₂⁻).

-

Diazotization: The nitrite then reacts with sulfanilamide in an acidic solution to form a diazonium compound.[1][17]

-

Coupling: N-(1-Naphthyl)-ethylenediamine dihydrochloride is added, which couples with the diazonium compound to form a colored azo dye.[1][17]

-

Spectrophotometry: The intensity of the pink/red color is proportional to the original nitrate concentration (plus any initial nitrite) and is measured using a spectrophotometer at a specific wavelength (typically around 540-550 nm).[19]

-

Correction: A separate analysis is performed for nitrite without the cadmium reduction step, and this value is subtracted from the combined nitrate+nitrite measurement to determine the nitrate concentration.

3.2.2. Phosphate (PO₄³⁻) Analysis: Molybdenum Blue Method

This is a standard colorimetric method for the determination of soluble reactive phosphorus in seawater.[7][19][20][21][22][23]

Methodology:

-

Complex Formation: Acidified ammonium molybdate is added to the seawater sample, which reacts with orthophosphate to form a phosphomolybdate complex.[19][20]

-

Reduction: Ascorbic acid is then used to reduce the phosphomolybdate complex to a intensely colored molybdenum blue complex.[19][20] The reaction is often catalyzed by antimony potassium tartrate.[20]

-

Spectrophotometry: The absorbance of the molybdenum blue color is measured with a spectrophotometer at a wavelength of approximately 880 nm. The intensity of the color is directly proportional to the phosphate concentration.

-

Automation: For high-throughput analysis, these colorimetric methods are often automated using continuous flow analyzers or flow injection analysis systems.[24][25]

Visualization of the Redfield this compound in Marine Biogeochemical Cycling

The following diagram illustrates the central role of phytoplankton in linking the pools of dissolved inorganic nutrients to the composition of organic matter, as described by the Redfield this compound.

Conclusion

The Redfield this compound remains a fundamental tenet in oceanography, providing a powerful framework for understanding the intricate coupling between marine life and the chemical environment of the world's oceans. While the canonical 106:16:1 stoichiometry is a useful approximation, it is now well-established that significant and systematic variations exist. These deviations, driven by a combination of biological and physical factors, highlight the dynamic and heterogeneous nature of marine biogeochemical cycles.

For researchers, scientists, and professionals in fields such as drug development, where marine-derived compounds are of interest, a thorough understanding of the elemental stoichiometry of marine primary producers is crucial. It provides insights into the nutritional requirements and potential biochemical composition of these organisms. The continued application of precise and standardized experimental protocols, such as those outlined in this guide, is essential for advancing our knowledge of the factors that control the elemental composition of life in the sea and for predicting how these might change in the future.

References

- 1. 2024.sci-hub.se [2024.sci-hub.se]

- 2. Redfield this compound - Wikipedia [en.wikipedia.org]

- 3. mmab.ca [mmab.ca]

- 4. tos.org [tos.org]

- 5. Geologic controls on phytoplankton elemental composition - PMC [pmc.ncbi.nlm.nih.gov]

- 6. ftp.soest.hawaii.edu [ftp.soest.hawaii.edu]

- 7. dgtresearch.com [dgtresearch.com]

- 8. documents.thermofisher.com [documents.thermofisher.com]

- 9. CHNS ANALYSIS [www-odp.tamu.edu]

- 10. justagriculture.in [justagriculture.in]

- 11. contractlaboratory.com [contractlaboratory.com]

- 12. rsc.org [rsc.org]

- 13. Chapter 15 - Determination of Particulate Organic and Particulate Nitrogen [nodc.noaa.gov]

- 14. Frontiers | The Macromolecular Basis of Phytoplankton C:N:P Under Nitrogen Starvation [frontiersin.org]

- 15. cefns.nau.edu [cefns.nau.edu]

- 16. Determination of nitrate in sea water by cadmium-copper reduction to nitrite | Journal of the Marine Biological Association of the United Kingdom | Cambridge Core [cambridge.org]

- 17. Chapter 9 - The Determination of Nitrate in Sea Water [nodc.noaa.gov]

- 18. chemetrics.b-cdn.net [chemetrics.b-cdn.net]