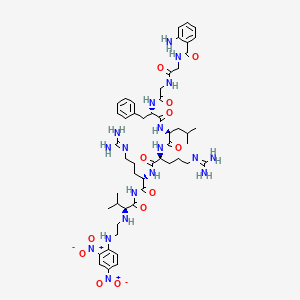

QF-Erp7

Descripción

The Transformer architecture, introduced in 2017, revolutionized natural language processing (NLP) by replacing recurrent and convolutional layers with self-attention mechanisms. This enabled parallelized training and superior performance on tasks like translation and parsing . Subsequent models like BERT, RoBERTa, and BART built on this foundation, refining pretraining objectives, data efficiency, and task adaptability.

Propiedades

Número CAS |

132472-84-5 |

|---|---|

Fórmula molecular |

C51H74N18O12 |

Peso molecular |

1131.2 g/mol |

Nombre IUPAC |

2-amino-N-[2-[[2-[[(2S)-1-[[(2S)-1-[[(2S)-5-(diaminomethylideneamino)-1-[[(2S)-5-(diaminomethylideneamino)-1-[[(2S)-2-[2-(2,4-dinitroanilino)ethylamino]-3-methylbutanoyl]amino]-1-oxopentan-2-yl]amino]-1-oxopentan-2-yl]amino]-4-methyl-1-oxopentan-2-yl]amino]-1-oxo-3-phenylpropan-2-yl]amino]-2-oxoethyl]amino]-2-oxoethyl]benzamide |

InChI |

InChI=1S/C51H74N18O12/c1-29(2)24-38(66-48(76)39(25-31-12-6-5-7-13-31)63-42(71)28-61-41(70)27-62-44(72)33-14-8-9-15-34(33)52)47(75)65-36(16-10-20-59-50(53)54)45(73)64-37(17-11-21-60-51(55)56)46(74)67-49(77)43(30(3)4)58-23-22-57-35-19-18-32(68(78)79)26-40(35)69(80)81/h5-9,12-15,18-19,26,29-30,36-39,43,57-58H,10-11,16-17,20-25,27-28,52H2,1-4H3,(H,61,70)(H,62,72)(H,63,71)(H,64,73)(H,65,75)(H,66,76)(H4,53,54,59)(H4,55,56,60)(H,67,74,77)/t36-,37-,38-,39-,43-/m0/s1 |

Clave InChI |

JDYFMQPAPMPKCG-WWFQFRPWSA-N |

SMILES |

CC(C)CC(C(=O)NC(CCCN=C(N)N)C(=O)NC(CCCN=C(N)N)C(=O)NC(=O)C(C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)C(CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

SMILES isomérico |

CC(C)C[C@@H](C(=O)N[C@@H](CCCN=C(N)N)C(=O)N[C@@H](CCCN=C(N)N)C(=O)NC(=O)[C@H](C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)[C@H](CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

SMILES canónico |

CC(C)CC(C(=O)NC(CCCN=C(N)N)C(=O)NC(CCCN=C(N)N)C(=O)NC(=O)C(C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)C(CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

Apariencia |

Solid powder |

Otros números CAS |

132472-84-5 |

Pureza |

>98% (or refer to the Certificate of Analysis) |

Vida útil |

>3 years if stored properly |

Solubilidad |

Soluble in DMSO |

Almacenamiento |

Dry, dark and at 0 - 4 C for short term (days to weeks) or -20 C for long term (months to years). |

Sinónimos |

2-aminobenzoyl-glycyl-glycyl-phenylalanyl-leucyl-arginyl-arginyl-valyl-N-(2,4-dinitrophenyl)ethylenediamine Abz-G-G-F-L-R-R-V-EDDn Abz-Gly-Gly-Phe-Leu-Arg-Arg-Val-EDDn QF-ERP7 |

Origen del producto |

United States |

Comparación Con Compuestos Similares

Comparison with Similar Models

The following table compares key Transformer-based models using metrics and methodologies from the evidence:

Critical Analysis of Research Findings

- Efficiency vs. Performance : The Transformer reduced training time by 75% compared to prior models but required extensive data . BART and T5 later emphasized scalability, with T5 leveraging a 750GB corpus for multitask learning .

- Bidirectional Context : BERT’s bidirectional approach outperformed unidirectional models like GPT but was initially undertrained, a flaw corrected by RoBERTa through extended training cycles .

- Task Generalization : BART demonstrated versatility by excelling in both generation (summarization) and comprehension (GLUE), whereas BERT/RoBERTa focused primarily on comprehension .

Practical Implications

- Industry Adoption : BERT and RoBERTa dominate tasks like sentiment analysis and named entity recognition, while BART is preferred for summarization and dialogue systems.

- Resource Considerations : Training T5 or RoBERTa demands significant computational resources (e.g., hundreds of GPUs), making them less accessible for small-scale projects .

Retrosynthesis Analysis

AI-Powered Synthesis Planning: Our tool employs the Template_relevance Pistachio, Template_relevance Bkms_metabolic, Template_relevance Pistachio_ringbreaker, Template_relevance Reaxys, Template_relevance Reaxys_biocatalysis model, leveraging a vast database of chemical reactions to predict feasible synthetic routes.

One-Step Synthesis Focus: Specifically designed for one-step synthesis, it provides concise and direct routes for your target compounds, streamlining the synthesis process.

Accurate Predictions: Utilizing the extensive PISTACHIO, BKMS_METABOLIC, PISTACHIO_RINGBREAKER, REAXYS, REAXYS_BIOCATALYSIS database, our tool offers high-accuracy predictions, reflecting the latest in chemical research and data.

Strategy Settings

| Precursor scoring | Relevance Heuristic |

|---|---|

| Min. plausibility | 0.01 |

| Model | Template_relevance |

| Template Set | Pistachio/Bkms_metabolic/Pistachio_ringbreaker/Reaxys/Reaxys_biocatalysis |

| Top-N result to add to graph | 6 |

Feasible Synthetic Routes

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Descargo de responsabilidad e información sobre productos de investigación in vitro

Tenga en cuenta que todos los artículos e información de productos presentados en BenchChem están destinados únicamente con fines informativos. Los productos disponibles para la compra en BenchChem están diseñados específicamente para estudios in vitro, que se realizan fuera de organismos vivos. Los estudios in vitro, derivados del término latino "in vidrio", involucran experimentos realizados en entornos de laboratorio controlados utilizando células o tejidos. Es importante tener en cuenta que estos productos no se clasifican como medicamentos y no han recibido la aprobación de la FDA para la prevención, tratamiento o cura de ninguna condición médica, dolencia o enfermedad. Debemos enfatizar que cualquier forma de introducción corporal de estos productos en humanos o animales está estrictamente prohibida por ley. Es esencial adherirse a estas pautas para garantizar el cumplimiento de los estándares legales y éticos en la investigación y experimentación.